June 13, 2013 feature

What is the best way to measure a researcher's scientific impact?

(Phys.org) —From a qualitative perspective, it's relatively easy to define a good researcher as one who publishes many good papers. But quantitatively measuring these papers is more complicated, since they can be measured in several different ways. In the past few years, several different metrics have been proposed that determine an individual's scientific caliber based on the quantity and quality of the individual's peer-reviewed publications. However, most of these metrics assume that all authors contribute equally when a paper has multiple authors. In a new study, researchers have argued that this assumption causes bias in these metrics, and they have proposed a new metric that accounts for the relative contributions of all coauthors, resulting in a rational way to capture a researcher's scientific impact.

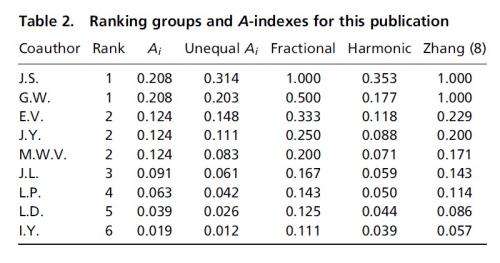

The researchers, Jonathan Stallings, et al., have published their paper "Determining scientific impact using a collaboration index" in a recent issue of PNAS.

"Since we all have credit cards, it goes without saying that measuring credit is important in daily life," corresponding author Ge Wang, the Clark & Crossan Endowed Chair Professor in the Department of Biomedical Engineering at Rensselaer Polytechnic Institute in Troy, New York, told Phys.org, "How to measure intellectual credit is a hot topic, but a way has been missing to individualize scientific impact rigorously for teamwork such as a joint peer-reviewed publication. Our recent PNAS paper provides an axiomatic answer to this fundamental question."

Currently, one of the most common measures of an individual's scientific impact is the H-index, which reflects both a researcher's number of publications and number of citations per publication (a measure of the publication's quality). Specifically, a scientist has a value h if h of their papers have at least h citations each, and their other papers are less frequently cited. The H-index does not account for the possibility that some collaborators may have contributed more than others on a paper. There are also many situations where the H-index falls short. For example, when a researcher has only a few publications but they are highly cited, the researcher's h value is limited by the small number of publications regardless of their high quality.

The scientist who originally proposed the H-index, Jorge E. Hirsch, noted that the index is best used when comparing researchers of similar scientific age and that highly collaborative researchers may have inflated values. He suggested normalizing the H-index based on the average number of coauthors. However, the researchers in the new study want to account for the coauthors' relative contributions axiomatically in order to minimize bias.

"Any quantitative measure of scientific productivity and impact is necessarily biased because intellect is the most complicated wonder that should not be absolutely measurable," Wang said. "Any measurement will miss something, which makes research interesting. When we have to measure a paper for multiple reasons, our axiomatic bibliometric approach is the best choice one can hope for."

The new measure of scientific impact is based on a set of axioms that determine the space of possible coauthors' credits and a most likely probabilistic distribution of what the researchers call a credit vector, which determines the relative credits of each coauthor of a given paper. Because this method is derived from the axioms, it is called the A-index.

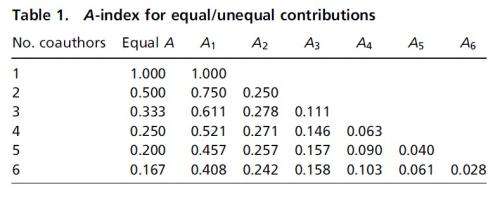

In the A-index, each coauthor is assigned to a group. For a publication with just one author, that author always has an A-index of 1. Multiple coauthors who contribute equally to a publication would all be in the same group and split the credit equally. For example, four coauthors who contribute equally to a publication would each have an A-index of 0.25. But if each coauthor contributes a different amount, then they would not be in the same group, and the credit would be distributed in a weighted fashion. For example, four coauthors with decreasing credits would have A-indexes of 0.521, 0.271, 0.146, and 0.063, respectively.

The sum of a researcher's A-indexes, called the C-index, gives a weighted count of publications based on that researcher's relative contributions. The A-index (a single-paper metric) can also be used to weight an individual's share of the quality of a publication, whether quality is defined in terms of the journal's impact factor or the number of citations of the publication. The sum of these values is the productivity index, or P-index.

When testing the C-index and P-index on 186 biomedical engineering researchers and in simulation tests, the researchers found that these metrics provide a fairer and more balanced way of measuring scientific impact compared with the the N-index and H-index, the former of which is simply the number of a researcher's publications.

One important point of comparison is that, while a high H-index requires a large number of publications, a researcher can achieve a high P-index with just a few publications if they are published in journals with high impact factors or receive lots of citations. A researcher can also achieve a high P-index by publishing many moderately important papers. In this way, the P-index balances quantity and quality by accounting for relative contributions and not only relying on a researcher's total number of publications. This advantage makes the P-index useful for young researchers and for comparing researchers with different collaborative tendencies.

"Our axiomatic framework is a fair and sensitive playground," Wang said. "It should encourage smoother and greater collaboration instead of discouraging it, because it is well known that 1+1>2 in many cases and especially so for increasingly important interdisciplinary projects."

The researchers point out that a main criticism with the new metrics is the lack of a well-defined system of coauthorship ranking, which is a problem of all collaboration metrics. They emphasize that developing a well-defined system of coauthorship ranking is necessary for realizing the full potential of these metrics.

The researchers also add that the A-index can be used to weight other metrics of scientific impact, such as the H-index. They hope to further investigate these possibilities in the future.

Publication details

Jonathan Stallings, et al. "Determining scientific impact using a collaboration index." PNAS Early Edition. DOI: 10.1073/pnas.1220184110

Journal information: Proceedings of the National Academy of Sciences

© 2013 Phys.org. All rights reserved.