August 19, 2010 weblog

Computer chip that computes probabilities and not logic

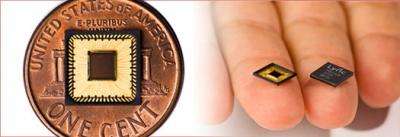

(PhysOrg.com) -- Lyric Semiconductor has unveiled a new type of chip that uses probability inputs and outputs instead of the conventional 1's and 0's used in logic chips today. Crunching probabilities is much more applicable to many computing task performed today rather than binary logic.

Ben Vigoda, CEO and founder of Lyric Semiconductor, has been aggressively working on this technology since 2006 and is partly being funded by the U.S. Defense Advanced Research Projects Agency (DARPA). DARPA is interested in using this technology in defense applications that involves information that is not clear cut and can use probability calculations to come to a conclusion.

Because probability calculations are used in so many products, there are many potential applications. Ben Vigoda stated: "To take one example, Amazon's recommendations to you are based on probability. Any time you buy from them, the fraud check on your credit card is also probability based, and when they e-mail your confirmation, it passes through a spam filter that also uses probability."

Conventional chips have transistors arranged in digital NAND gates which are used to implement digital logic functions using 1's and 0's. In a probability processor transistors are used to build Bayesian NAND gates. Bayesian probability is a field of mathematics named after the eighteenth century English statistician Thomas Bayes.

Lyric Semiconductor plans to have prototypes of their all-purpose probability chips operational within three years. Currently a smaller flash memory error-correcting chip, based on the probability technology, is available for license this week. The company plans on having flash memory chips in portable devices like tablets and smartphones within two years.

More information:

Lyric Semiconductor

Via: Technology Review

© 2010 PhysOrg.com