IBM Scientists Demonstrate World's Fastest Graphene Transistor

(PhysOrg.com) -- In a just-published paper in the magazine Science, IBM researchers demonstrated a radio-frequency graphene transistor with the highest cut-off frequency achieved so far for any graphene device - 100 billion cycles/second (100 GigaHertz).

This accomplishment is a key milestone for the Carbon Electronics for RF Applications (CERA) program funded by DARPA, in an effort to develop next-generation communication devices.

The high frequency record was achieved using wafer-scale, epitaxially grown graphene using processing technology compatible to that used in advanced silicon device fabrication.

"A key advantage of graphene lies in the very high speeds in which electrons propagate, which is essential for achieving high-speed, high-performance next generation transistors," said Dr. T.C. Chen, vice president, Science and Technology, IBM Research. "The breakthrough we are announcing demonstrates clearly that graphene can be utilized to produce high performance devices and integrated circuits."

Graphene is a single atom-thick layer of carbon atoms bonded in a hexagonal honeycomb-like arrangement. This two-dimensional form of carbon has unique electrical, optical, mechanical and thermal properties and its technological applications are being explored intensely.

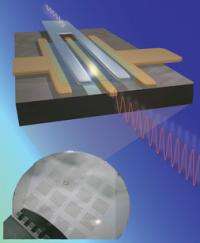

Uniform and high-quality graphene wafers were synthesized by thermal decomposition of a silicon carbide (SiC) substrate. The graphene transistor itself utilized a metal top-gate architecture and a novel gate insulator stack involving a polymer and a high dielectric constant oxide. The gate length was modest, 240 nanometers, leaving plenty of space for further optimization of its performance by scaling down the gate length.

It is noteworthy that the frequency performance of the graphene device already exceeds the cut-off frequency of state-of-the-art silicon transistors of the same gate length (~ 40 GigaHertz). Similar performance was obtained from devices based on graphene obtained from natural graphite, proving that high performance can be obtained from graphene of different origins. Previously, the team had demonstrated graphene transistors with a cut-off frequency of 26 GigaHertz using graphene flakes extracted from natural graphite.

More information: Carbon Based Chips May One Day Replace Silicon Transistors: www.physorg.com/news184420861.html

Provided by IBM