Smartphone app illuminates power consumption

(PhysOrg.com) -- A new application for the Android smartphone shows users and software developers how much power their applications are consuming. PowerTutor was developed by doctoral students and professors at the University of Michigan.

Battery-powered cell phones serve as hand-held computers and more these days. We run power-hungry applications while we depend on the phones to be available in emergencies.

"Today, we expect our phones to realize more and more functions, and we also expect their batteries to last," said Lide Zhang, a doctoral student in the Department of Electrical Engineering and Computer Science and one of the application's developers. "PowerTutor will help make that possible."

PowerTutor will enable software developers to build more efficient products, said Birjodh Tiwana, a doctoral student in the Department of Electrical Engineering and Computer Science and another of the program's developers. Tiwana said PowerTutor will allow users to compare the power consumption of different applications and select the leanest version that performs the desired task. Users can also watch how their actions affect the phone's battery life.

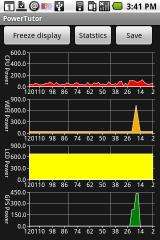

PowerTutor shows in real time how four different phone components use power: the screen, the network interface, the processor, and the global positioning system receiver.

To create the application, the researchers disassembled their phones and installed electrical current meters. Then they determined the relationship between the phone's internal state (how bright the screen is, for example) and the actual power consumption. That allowed them to produce a software model capable of estimating the power use of any program the phone is running with less than 5 percent error.

PowerTutor can also provide a power consumption history. It is available free at the Android Market at www.android.com/market/ .

PowerTutor was developed under the direction of associate professor Robert Dick and assistant professor Morley Mao, both in the Department of Electrical Engineering and Computer Science, and Lei Yang, a software engineer at Google. The work is supported by Google and the National Science Foundation, and was done in collaboration with the joint University of Michigan and Northwestern University Empathic Systems Project.

Provided by University of Michigan (news : web)