'Motion picture' of past warming paves way for snapshots of future climate change

(PhysOrg.com) -- By accurately modeling Earth's last major global warming -- and answering pressing questions about its causes -- scientists led by a University of Wisconsin-Madison climatologist are unraveling the intricacies of the kind of abrupt climate shifts that may occur in the future.

"We want to know what will happen in the future, especially if the climate will change abruptly," says Zhengyu Liu, a UW-Madison professor of atmospheric and oceanic sciences and director of the Center for Climatic Research in the Nelson Institute for Environmental Studies. "The problem is, you don't know if your model is right for this kind of change. The important thing is validating your model."

To do so, Liu and his colleagues run their mode back in time and match the results of the climate simulation with the physical evidence of past climate.

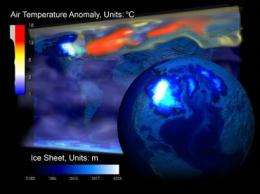

Starting with the last glacial maximum about 21,000 years ago, Liu's team simulated atmospheric and oceanic conditions through what scientists call the Bølling-Allerød warming, the Earth's last major temperature hike, which occurred about 14,500 years ago. The simulation fell in close agreement with conditions — temperatures, sea levels and glacial coverage — collected from fossil and geologic records.

"It's our most serious attempt to simulate this last major global warming event, and it's a validation of the model itself, as well," Liu says.

The results of the new climate modeling experiments are presented today (July 17) in the journal Science.

The group's simulations were executed on "Phoenix" and "Jaguar," a pair of Cray supercomputers at Oak Ridge National Laboratory in Oak Ridge, Tenn., and helped pin down the contributions of three environmental factors as drivers of the Bølling-Allerød warming: an increase in atmospheric carbon dioxide, the jump-start of stalled heat-moving ocean currents and a large buildup of subsurface heat in the ocean while those currents were dormant.

The climate dominoes began to fall during that period after glaciers reached their maximum coverage, blanketing most of North America, Liu explains. As glaciers melted, massive quantities of water poured into the North Atlantic, lowering the ocean salinity that helps power a major convection current that acts like a conveyor belt to carry warm tropical surface water north and cooler, heavier subsurface water south.

As a result, according to the model, ocean circulation stopped. Without warm tropical water streaming north, the North Atlantic cooled and heat backed up in southern waters. Subsequently, glacial melt slowed or stopped as well, and eventually restarted the overturning current — which had a much larger reserve of heat to haul north.

"All that stored heat is released like a volcano, and poured out over decades," Liu explains. "That warmed up Greenland and melted (arctic) sea ice."

The model showed a 15-degree Celsius increase in average temperatures in Greenland and a 5-meter increase in sea level over just a few centuries, findings that squared neatly with the climate of the period as represented in the physical record.

"Being able to successfully simulate thousands of years of past climate for the first time with a comprehensive climate model is a major scientific achievement," notes Bette Otto-Bliesner, an atmospheric scientist and climate modeler at National Center for Atmospheric Research (NCAR) and co-author of the Science report. "This is an important step toward better understanding how the world's climate could change abruptly over the coming centuries with increasing melting of the ice caps."

The rate of ice melt during the Bølling-Allerød warming is still at issue, but its consequences are not, Liu says. The modelers simulated both a slow decrease in melt and a sudden end to melt run-off. In both cases, the result was a 15-degree warming.

"That happened in the past," Liu says. "The question is, in the future, if you have a global warming and Greenland melts, will it happen again?"

Time — both actual and computing — will tell. In 2008, the group simulated about one-third of the last 21,000 years. With another 4 million processor hours to go, the simulations being conducted by the Wisconsin group will eventually run up to the present and 200 years into the future.

Traditional climate modeling approaches were limited by computer time and capabilities, Lieu explains.

"They did slides, like snapshots," Liu says. "You simulate 100 years, and then you run another 100 years, but those centuries may be 2,000 years apart (in the model). To look at abrupt change, there is no shortcut."

Using the interactions between land, water, atmosphere and ice in the Community Climate System Model developed at NCAR, the researchers have been able to create a much more detailed and closely spaced book of snapshots, "giving us more of a motion picture of the climate" over millennia, Liu said.

He stressed the importance of drawing together specialists in computing, oceanography, atmospheric science and glaciers — including John Kutzbach, a UW-Madison climate modeler, and UW-Madison doctoral student Feng He, responsible for modeling the glacial melt. All were key to attaining the detail necessary in recreating historical climate conditions, Liu says.

"All this data, it's from chemical proxies and bugs in the sediment," Liu said. "You really need a very interdisciplinary team: people on deep ocean, people on geology, people who know those bugs. It is a huge — and very successful — collaboration."

Source: University of Wisconsin-Madison (news : web)