Beyond -- way beyond -- WIMP interfaces

(PhysOrg.com) -- Human-computer interaction is undergoing a revolution, entering a multimodal era that goes beyond, way beyond, the WIMP (Windows-Icons-Menus-Pointers) paradigm. Now European researchers have developed a platform to speed up that revolution.

We have the technology. So why is our primary human-computer interface (HCI) based on the 35-year-old Windows-Icons-Menus-Pointers paradigm? Voice, gestures, touch, haptics, force feedback and many other sensors or effectors exist that promise to simplify and simultaneously enhance human interaction with computers, but we are still stuck with some 100 or so keys, a mouse and sore wrists.

In part, the slow pace of interface development is just history repeating itself. The story of mechanical systems that worked faster than handwriting is a 150-year saga that, eventually, led to the QWERTY keyboard in the early 1870s.

In part, the problem is one of complexity. Interface systems have to adapt to human morphology and neurology and they have to do their job better than before. It can take a lot of time to figure how to improve these interfaces.

The revolution has begun, with touch and 2D/3D gesture systems reinventing mobile phones and gaming. But the pace of development and deployment has been painfully slow. One European project hopes to change that.

The EU-funded OpenInterface (OI) project took as its starting point the many interaction devices currently available - touch screens, motion sensors, speech recognisers and many others - and sought to create an open source development framework capable of quickly and simply supporting the design and development of new user interfaces by mixing and matching different types of input device and modality.

“These devices and modalities have been around a long time, but whenever developers seek to employ them in new ways or simply in their applications, they have to reinvent the wheel,” notes Laurence Nigay, OpenInterface’s coordinator.

“They need to characterise the device, develop ways to get it to work with technology and other interface systems. And then do a lot of testing to make sure it usefully improves how people interact with technology,” he says.

Then the whole interminable process begins anew.

OI gets in your interface

But with the OI framework, designers can rapidly prototype new input systems and methods.

Here is how it works. The framework consists of a kernel, which is a graphical tool for assembling components and a repository of components. The OI framework enables developers to explore different interaction possibilities. Faster development time means more iterations of a new interface to achieve usable multimodal user interfaces.

The graphical environment of the framework is called OIDE, or OpenInterface Interaction Development Environment. It allows designers to assemble components in order to specify a “pipeline” for defining certain multimodal interaction.

Currently, the framework includes various interaction devices and modalities including the SHAKE, a lab-quality motion sensing device, the Wii remote (Wiimote), the iPhone, Interface-Z captors, different speech recognisers, vision-based finger tracker, and several toolkits including ARToolKit and Phidgets.

Proof at hand

The proof of the pudding is in the eating and OpenInterface developed a large series of interface concepts to test the OIDE (see Showcase at www.oi-project.org). One application used a Wiimote to operate a slide viewer. Another developed game controls using the tilt sensor of the iPhone and the motion sensor in the Wiimote.

Another slide viewer was developed to switch between a wide variety of interfaces, including the Wiimote, SHAKE, Interface Z captors and the Space Navigator. Users could choose any interaction device they wished. A balloon input system combined with a Wiimote allowed users to zoom into a specific point on the slide simply by using the Wiimote and squeezing the balloon.

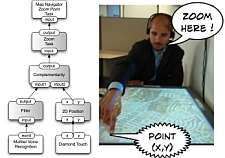

Mapping featured in the project too, with one application using a SHAKE motion sensor to navigate a map, while an augmented, table-top touch screen could process voice commands combined with touch to ‘zoom here’, for example. All sophisticated demonstrations of the OIDE’s power. Meanwhile, a third map application allowed users to combine a Wiimote and iPhone to navigate round a map.

Lastly, several multimodal interfaces have been developed for games, in particular games on mobile phones. As the mobile game market tends to follow the more mature console/PC game market - and with the growing diffusion of phones with new sensors - game developers will be more and more interested in following this new trend.

“All these applications were developed simply to demonstrate the capacity of the OI framework to rapidly develop and prototype new multimodal interfaces on PC and mobile phones that combine several input devices,” explains Nigay.

Works with computers, too

Multimodal interaction can be developed for PC applications as well as mobile phones. The framework is open source and it supports various programming languages, and can be downloaded from the project website.

The framework is also extensible, so if a new input device reaches the market, it simply needs to be characterised and plugged into the framework to then become part of the framework ‘reference interface library’.

According to the partners, this is a first step towards a commercial tool for multimodal interaction. The only existing tools dedicated to multimodal interaction like this, according to the project, are research prototypes.

In addition to the framework, the OI repository describing various interaction modalities is also a reference point for the community interested in this field. And it is a way to promote multimodal interaction in various application domains including gaming, navigation in a map, and education.

The platform also ushers in another welcome development: standards. So far, according to the OI project, no industry agreed standard for defining multimodal interfaces on mobile devices exists. The project has championed the creation of a standards group at the European Telecommunications Standards Institute.

Next steps include taking the concept further, both through standards initiatives and further research.

More information: OpenInterface project -- www.oi-project.org/

Provided by ICT Results