Stanford researchers developing 3-D camera with 12,616 lenses

The camera you own has one main lens and produces a flat, two-dimensional photograph, whether you hold it in your hand or view it on your computer screen. On the other hand, a camera with two lenses (or two cameras placed apart from each other) can take more interesting 3-D photos.

But what if your digital camera saw the world through thousands of tiny lenses, each a miniature camera unto itself? You'd get a 2-D photo, but you'd also get something potentially more valuable: an electronic "depth map" containing the distance from the camera to every object in the picture, a kind of super 3-D.

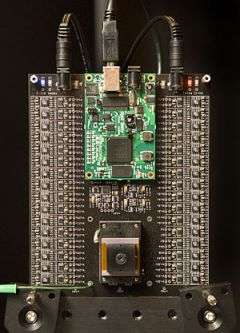

Stanford electronics researchers, lead by electrical engineering Professor Abbas El Gamal, are developing such a camera, built around their "multi-aperture image sensor." They've shrunk the pixels on the sensor to 0.7 microns, several times smaller than pixels in standard digital cameras. They've grouped the pixels in arrays of 256 pixels each, and they're preparing to place a tiny lens atop each array.

"It's like having a lot of cameras on a single chip," said Keith Fife, a graduate student working with El Gamal and another electrical engineering professor, H.-S. Philip Wong. In fact, if their prototype 3-megapixel chip had all its micro lenses in place, they would add up to 12,616 "cameras."

Point such a camera at someone's face, and it would, in addition to taking a photo, precisely record the distances to the subject's eyes, nose, ears, chin, etc. One obvious potential use of the technology: facial recognition for security purposes.

But there are a number of other possibilities for a depth-information camera: biological imaging, 3-D printing, creation of 3-D objects or people to inhabit virtual worlds, or 3-D modeling of buildings.

The technology is expected to produce a photo in which almost everything, near or far, is in focus. But it would be possible to selectively defocus parts of the photo after the fact, using editing software on a computer

Knowing the exact distance to an object might give robots better spatial vision than humans and allow them to perform delicate tasks now beyond their abilities. "People are coming up with many things they might do with this," Fife said. The three researchers published a paper on their work in the February edition of the IEEE ISSCC Digest of Technical Papers.

Their multi-aperture camera would look and feel like an ordinary camera, or even a smaller cell phone camera. The cell phone aspect is important, Fife said, given that "the majority of the cameras in the world are now on phones."

Here's how it works:

The main lens (also known as the objective lens) of an ordinary digital camera focuses its image directly on the camera's image sensor, which records the photo. The objective lens of the multi-aperture camera, on the other hand, focuses its image about 40 microns (a micron is a millionth of a meter) above the image sensor arrays. As a result, any point in the photo is captured by at least four of the chip's mini-cameras, producing overlapping views, each from a slightly different perspective, just as the left eye of a human sees things differently than the right eye.

The outcome is a detailed depth map, invisible in the photograph itself but electronically stored along with it. It's a virtual model of the scene, ready for manipulation by computation. "You can choose to do things with that image that you weren't able to do with the regular 2-D image," Fife said. "You can say, 'I want to see only the objects at this distance,' and suddenly they'll appear for you. And you can wipe away everything else."

Or the sensor could be deployed naked, with no objective lens at all. By placing the sensor very close to an object, each micro lens would take its own photo without the need for an objective lens. It has been suggested that a very small probe could be placed against the brain of a laboratory mouse, for example, to detect the location of neural activity.

Other researchers are headed toward similar depth-map goals from different approaches. Some use intelligent software to inspect ordinary 2-D photos for the edges, shadows or focus differences that might infer the distances of objects. Others have tried cameras with multiple lenses, or prisms mounted in front of a single camera lens. One approach employs lasers; another attempts to stitch together photos taken from different angles, while yet another involves video shot from a moving camera.

But El Gamal, Fife and Wong believe their multi-aperture sensor has some key advantages. It's small and doesn't require lasers, bulky camera gear, multiple photos or complex calibration. And it has excellent color quality. Each of the 256 pixels in a specific array detects the same color. In an ordinary digital camera, red pixels may be arranged next to green pixels, leading to undesirable "crosstalk" between the pixels that degrade color.

The sensor also can take advantage of smaller pixels in a way that an ordinary digital camera cannot, El Gamal said, because camera lenses are nearing the optical limit of the smallest spot they can resolve. Using a pixel smaller than that spot will not produce a better photo. But with the multi-aperture sensor, smaller pixels produce even more depth information, he said.

The technology also may aid the quest for the huge photos possible with a gigapixel camera—that's 140 times as many pixels as today's typical 7-megapixel cameras. The first benefit of the Stanford technology is straightforward: Smaller pixels mean more pixels can be crowded onto the chip.

The second benefit involves chip architecture. With a billion pixels on one chip, some of them are sure to go bad, leaving dead spots, El Gamal said. But the overlapping views provided by the multi-aperture sensor provide backups when pixels fail.

The researchers are now working out the manufacturing details of fabricating the micro-optics onto a camera chip.

The finished product may cost less than existing digital cameras, the researchers say, because the quality of a camera's main lens will no longer be of paramount importance. "We believe that you can reduce the complexity of the main lens by shifting the complexity to the semiconductor," Fife said.

Source: Stanford University