Why a famous technologist is being branded a Luddite

On December 21, the company SpaceX made history by successfully launching a rocket and returning it to a safe landing on Earth. It's also the day that SpaceX founder Elon Musk was nominated for a Luddite Award.

The nomination came as part of a campaign by the Information Technology & Innovation Foundation (ITIF), a leading science and technology policy think tank, to call out the "worst of the year's worst innovation killers."

It's an odd juxtaposition, to say the least.

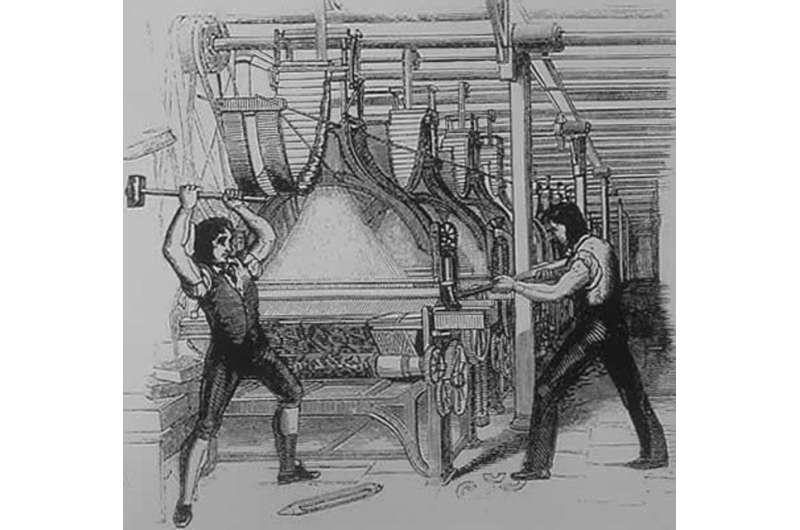

The Luddite Awards – named after an 18th-century English worker who inspired a backlash against the Industrial Revolution – highlight what ITIF refers to as "egregious cases of neo-Luddism in action."

Musk, of course, is hardly a shrinking violet when it comes to promoting technology innovation. Whether it's self-driving cars, reusable commercial rockets or the futuristic "hyperloop," he's not known for being a tech party pooper.

So what's the deal?

ITIF, as it turns out, took exception to Musk's concerns over the potential dangers of artificial intelligence (AI) – along with those other well-known "neo-Luddites," Stephen Hawking and Bill Gates.

ITIF is right to highlight the importance of technology innovation as an engine for growth and prosperity. But what it misses by a mile is the importance of innovating responsibly.

Being cautious ≠ smashing the technology

Back in 2002, the European Environment Agency (EEA) published its report Late Lessons from Early Warnings. The report – and its 2013 follow-on publication – catalogs innovations, from PCBs to the use of asbestos, that damaged lives and environments because early warnings of possible harm were either ignored or overlooked.

This is a picture that is all too familiar these days as we grapple with the consequences of unfettered innovation – whether it's climate change, environmental pollution or the health impacts of industrial chemicals.

Things get even more complex, though, with emerging technologies like AI, robotics and the "internet of things." With these and other innovations, it's increasingly unclear what future risks and benefits lie over the horizon – especially when they begin to converge together.

This confluence – the "Fourth Industrial Revolution" as it's being called by some – is generating remarkable opportunities for economic growth. But it's also raising concerns. Klaus Schwab, Founder of the World Economic Forum and an advocate of the new "revolution," writes "the [fourth industrial] revolution could yield greater inequality, particularly in its potential to disrupt labor markets. As automation substitutes for labor across the entire economy, the net displacement of workers by machines might exacerbate the gap between returns to capital and returns to labor."

Schwab is, by any accounting, a technology optimist. Yet he recognizes the social and economic complexities of innovation, and the need to act responsibly if we are to see a societal return on our techno-investment.

Of course every generation has had to grapple with the consequences of innovation. And it's easy to argue that past inventions have led to a better present – especially if you're privileged and well-off. Yet our generation faces unprecedented technology innovation challenges that simply cannot be brushed off by assuming business as normal.

For the first time in human history, for instance, we can design and engineer the stuff around us at the level of the very atoms it's made of. We can redesign and reprogram the DNA at the core of every living organism. We can aspire to creating artificial systems that are a match for human intelligence. And we can connect ideas, people and devices together faster and with more complexity than ever before.

Innovating responsibly

This explosion of technological capabilities offers unparalleled opportunities for fighting disease, improving well-being and eradicating inequalities. But it's also fraught with dangers. And like any complex system, it's likely to look great… right up to the moment it fails.

Because of this, an increasing number of people and organizations are exploring how we as a society can avoid future disasters by innovating responsibly. It's part of the reasoning behind why Arizona State University launched the new School for the Future of Innovation in Society earlier this year, where I teach. And it's the motivation behind Europe's commitment to Responsible Research and Innovation.

Far from being a neo-Luddite movement, people the world over are starting to ask how we can proactively innovate to improve lives, and not simply innovate in the hope that things will work out OK in the end.

This includes some of the world's most august scientific bodies. In December, for instance, the US National Academy of Sciences, the Chinese Academy of Sciences and the UK's Royal Society jointly convened a global summit on human gene editing. At stake was the responsible development and use of techniques that enable the human genome to be redesigned and passed on to future generations.

In a joint statement, the summit organizers recommended "It would be irresponsible to proceed with any clinical use of germline editing unless and until (i) the relevant safety and efficacy issues have been resolved, based on appropriate understanding and balancing of risks, potential benefits, and alternatives, and (ii) there is broad societal consensus about the appropriateness of the proposed application."

Neo-Luddites? Or simply responsible scientists? I'd go for the latter.

If innovation is to serve society's needs, we need to ask tough questions about what the consequences might be, and how we might do things differently to avoid mistakes. And rather than deserving the label "neo-Luddite," Musk and others should be applauded for asking what could go wrong with technology innovation, and thinking about how to avoid it.

That said, if anything, they sometimes don't go far enough. Musk's answer to his AI fears, for instance, was to launch an open AI initiative – in effect accelerating the development of AI in the hopes that the more people are involved, the more responsible it'll be.

It's certainly a novel approach – and one that seriously calls into question ITIF's Luddite label. But it still adheres to the belief that the answer to technology innovation is… more technology innovation.

The bottom line is that innovation that improves the lives and livelihoods of all – not just the privileged – demands a willingness to ask questions, challenge assumptions and work across boundaries to build a better society.

If that's what it means to be a Luddite, count me in!

Source: The Conversation

This story is published courtesy of The Conversation (under Creative Commons-Attribution/No derivatives).

![]()