UN report wants moratorium on killer robots (Update)

Killer robots that can attack targets without any human input "should not have the power of life and death over human beings," a new draft U.N. report says.

The report for the U.N. Human Rights Commission posted online this week deals with legal and philosophical issues involved in giving robots lethal powers over humans, echoing countless science-fiction novels and films. The debate dates to author Isaac Asimov's first rule for robots in the 1942 story "Runaround:" ''A robot may not injure a human being or, through inaction, allow a human being to come to harm."

Report author Christof Heyns, a South African professor of human rights law, calls for a worldwide moratorium on the "testing, production, assembly, transfer, acquisition, deployment and use" of killer robots until an international conference can develop rules for their use.

His findings are due to be debated at the Human Rights Council in Geneva on May 29.

According to the report, the United States, Britain, Israel, South Korea and Japan have developed various types of fully or semi-autonomous weapons.

In the report, Heyns focuses on a new generation of weapons that choose their targets and execute them. He calls them "lethal autonomous robotics," or LARs for short, and says: "Decisions over life and death in armed conflict may require compassion and intuition. Humans—while they are fallible—at least might possess these qualities, whereas robots definitely do not."

He notes the arguments of robot proponents that death-dealing autonomous weapons "will not be susceptible to some of the human shortcomings that may undermine the protection of life. Typically they would not act out of revenge, panic, anger, spite, prejudice or fear. Moreover, unless specifically programmed to do so, robots would not cause intentional suffering on civilian populations, for example through torture. Robots also do not rape."

The report goes beyond the recent debate over drone killings of al-Qaida suspects and nearby civilians who are maimed or killed in the air strikes. Drones do have human oversight. The killer robots are programmed to make autonomous decisions on the spot without orders from humans.

Heyns' report notes the increasing use of drones, which "enable those who control lethal force not to be physically present when it is deployed, but rather to activate it while sitting behind computers in faraway places, and stay out of the line of fire.

"Lethal autonomous robotics (LARs), if added to the arsenals of States, would add a new dimension to this distancing, in that targeting decisions could be taken by the robots themselves. In addition to being physically removed from the kinetic action, humans would also become more detached from decisions to kill - and their execution," he wrote.

His report cites these examples, among others, of fully or semi-autonomous weapons that have been developed:

— The U.S. Phalanx system for Aegis-class cruisers, which automatically detects, tracks and engages anti-air warfare threats such as anti-ship missiles and aircraft.

— Israel's Harpy, a "Fire-and-Forget" autonomous weapon system designed to detect, attack and destroy radar emitters.

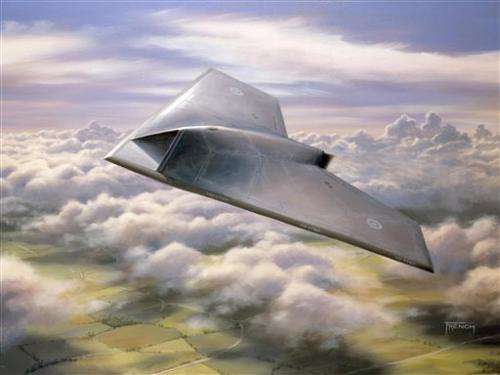

— Britain's Taranis jet-propelled combat drone prototype that can autonomously search, identify and locate enemies but can only engage with a target when authorized by mission command. It also can defend itself against enemy aircraft.

— The Samsung Techwin surveillance and security guard robots, deployed in the demilitarized zone between North and South Korea, to detect targets through infrared sensors. They are currently operated by humans but have an "automatic mode."

Current weapons systems are supposed to have some degree of human oversight. But Heyns notes that "the power to override may in reality be limited because the decision-making processes of robots are often measured in nanoseconds and the informational basis of those decisions may not be practically accessible to the supervisor. In such circumstances humans are de facto out of the loop and the machines thus effectively constitute LARs," or killer robots.

Separately, another U.N. expert, British lawyer Ben Emmerson, is preparing a special investigation for the U.N. General Assembly this year on drone warfare and targeted killings.

His probe was requested by Pakistan, which officially opposes the use of U.S. drones on its territory as an infringement on its sovereignty but is believed to have tacitly approved some strikes in the past. Pakistani officials say the drone strikes kill many innocent civilians, which the U.S. has rejected. The other two countries requesting the investigation were two permanent members of the U.N. Security Council, Russia and China.

In April, an alliance of activist and humanitarian groups led by Human Rights Watch launched the "Campaign to Stop Killer Robots" to push for a ban on fully autonomous weapons. The group applauded Heyns' draft report in a statement on its web site.

More information: The U.N. draft report: www.ohchr.org/Documents/HRBodi … 3/A-HRC-23-47_en.pdf

© 2013 The Associated Press. All rights reserved.