This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

Smart streaming readout system analyzes raw data from nuclear physics experiments

Nuclear physics experiments are data intensive. Particle accelerators probe collisions of subatomic particles such as protons, neutrons, and quarks to reveal details of the bits that make up matter. Instruments that measure the particles in these experiments generate torrents of raw data. To get a better handle on the data, nuclear physicists are turning to artificial intelligence and machine learning methods.

Recent tests of two streaming readout systems that use such methods found that the systems were able to perform real-time processing of raw experimental data. The tests also demonstrated that each system performed well in comparison with traditional systems. The results are published in The European Physical Journal Plus.

Streaming readout systems use advanced computer software to collect and analyze data generated by a device in real time. They feature a less complex physical infrastructure than traditional systems. In addition, they can be far more powerful, efficient, faster, and flexible. A streaming readout system can maximize the information that can be extracted from an experiment, from initial decisions about which data to save to flagging unexpected physics captured in very complex detector systems. These systems also store more of the original data for analysis. This allows for a more holistic picture of events by providing the whole of the event instead of just triggering on some small part of it.

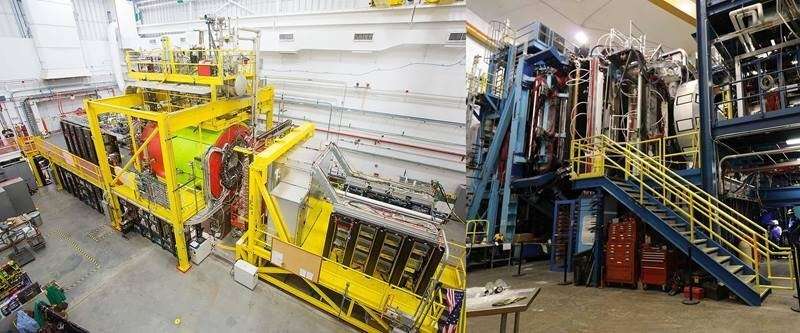

Nuclear physics is demanding and getting more so every year. Advances in experiments require powerful software and computing resources to make sense of the extreme amounts of raw data that experiments produce. For instance, the powerful Continuous Electron Beam Accelerator Facility (CEBAF) is a Department of Energy (DOE) Office of Science user facility at Thomas Jefferson National Accelerator Facility (Jefferson Lab) that initiates cascades of subatomic particles thousands of times per second.

These experiments generate enormous amounts of raw data every day. To harness the data, nuclear physicists have relied on hardware-based "triggered" systems to help them pre-sort data based on timed events. These systems only record data for a short period once a particular event is detected.

Now, nuclear physicists are replacing triggered systems with software-based streaming readout systems. These systems harness artificial intelligence and machine learning tools to process—in real time—the vast amounts of data that nuclear physics experiments produce.

In this way, all data are streamed to a data center to be analyzed, tagged, and filtered. The system automatically sifts through the enormous amount of data to filter out unnecessary background and record the interesting bits. With this work done by a streaming readout system, the actual data analysis can take a fraction of the time.

More information: Fabrizio Ameli et al, Streaming readout for next generation electron scattering experiments, The European Physical Journal Plus (2022). DOI: 10.1140/epjp/s13360-022-03146-z

Provided by US Department of Energy