August 2, 2007 feature

Tongue movements allow quadriplegics to control computers

Using the pressure waves in the ear caused by tongue movements, researchers have designed a technique for interfacing with computers. For the millions of people living with spinal cord injuries, this hands-free, non-intrusive method to work with computers could enable many people to lead more independent and productive lives.

Researchers Ravi Vaidyanathan et al., from the Naval Postgraduate School, Southern Illinois University, Case Western Reserve University, the University of Southampton, and Think-A-Move, Ltd, recently published their results on tongue control. They showed that, by inserting a small microphone into the ear, the vibrations from the air pressure changes caused by the tongue can be detected and distinguished for computer commands. The researchers have also designed a test and classification system to distinguish the signals of four different tongue movements, which are unique for every individual.

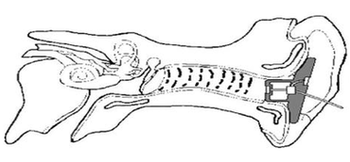

The group explains that certain tongue movements can cause fluctuations in air pressure to and from the ear. Although the exact mechanism of transfer is unknown, their results definitively demonstrate that when one moves their tongue, the resulting waves within the mouth propagate through bone, tissue and air into the ear canal.

“The idea of recording signals out of the ear was first put forward by a colleague (formerly with the company Think-A-Move, Ltd. in Cleveland, Ohio) with whom I started working in 2001, just after finishing my doctorate,” Vaidyanathan told PhysOrg.com. “When one thinks, concentrates or moves, there are a range of reactions that create signals which can be recorded from the body. If you concentrate with a level of intensity, for example, your heart rate, breathing, and various electrical and acoustic signatures all change—sensors can record, and possibly recognize this.”

Vaidyanathan explained that many researchers have used recording electrodes (most often on the head for brain activity) to capture such signals, but they can be difficult to classify, and slow to respond for real-time control.

“My colleague questioned if there might be another location on the body where such signals can be recorded, and suggested the ear,” Vaidyanathan said. “Signals from the mouth were intuitively logical given the relationship between the aural and oral cavities—you can observe this simply by ‘popping’ your ears.”

After winning a grant from the National Institute of Health to design a wheelchair for quadriplegics, Vaidyanathan started putting the team together. Lalit Gupta, a professor at Southern Illinois University, provided his experience in EEG signal recognition to implement the algorithms for recognizing tongue movements from pressure waves in the ear.

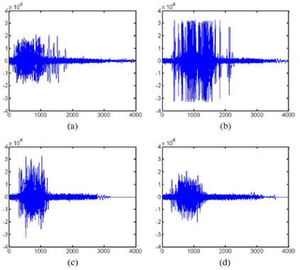

These pressure waves, which are basically sound waves, have different wavelengths and amplitudes corresponding to the direction, speed and intensity of the tongue movement. The waves have a low frequency of less than 200 Hz, at which point the signals are cut off by a filter to exclude any sources other than tongue movements. Since the ear canal is so well suited to capture and amplify sound waves, one can measure these waves with a microphone within the ear.

Eight individuals tested the new technology, first calibrating their unique tongue movements (up, down, left, and right) to create a personalized reference template. The results showed that tongue movements were detected accurately 97% of the time, encouraging the researchers to believe that the method can offer realistic precision.

“Experiments thus far indicate that for activities such as speaking, eating, even smoking a cigarette, there is no need to remove, or even deactivate the device,” Vaidyanathan said. “The tongue movement signals seem unique in terms of frequency, power, etc., and appear to have distinguishable signatures in comparison to other oral activity, allowing for separation through filtering. This is, however, an area I am hoping to explore further with deeper rigor in future work. This could involve new pattern recognition algorithms and adaptive filtering techniques.”

In terms of simplicity and computation speed, the researchers found that just four tongue movement work best, but they also predict that compound tongue movements could offer more variation, if needed. For example, a quick “double click” consisting of two simple tongue movements separated by a brief pause (such as up-up, up-down, up-right, etc.) could still use the same detection and classification algorithms, and performance would remain high.

According to the National Spinal Cord Injury Statistical Center (NSCISC), 11,000 new spinal cord injuries occur in the US ever year, half of which cause quadriplegia. Other people who have brain diseases, suffer stokes, or develop arthritis may also benefit from this non-intrusive and easy to operate method for computer control.

“I can’t speak to the timescale for commercial availability of the device, which will ultimately be determined by industry,” Vaidyanathan said. “However, Think-A-Move, Ltd. is in the process of commercializing products for both tongue movement and speech recognition out of the ear. Devices for both should be available in the near future.”

Citation: Vaidyanathan, Ravi, Chung, Beomsu, Gupta, Lalit, Kook, Hyunseok, Kota, Srinivas, and West, James D. “Tongue-Movement Communication and Control Concept for Hands-Free Human-Machine Interfaces.” IEEE Transactions on Systems, Man, and Cybernetics—Part A: Systems and Humans, Vol. 37, No. 4, July 2007.

Copyright 2007 PhysOrg.com.

All rights reserved. This material may not be published, broadcast, rewritten or redistributed in whole or part without the express written permission of PhysOrg.com.