Researchers improve automated recognition of human body movements in videos

An algorithm developed through collaboration of Disney Research Pittsburgh and Boston University can improve the automated recognition of actions in a video, a capability that could aid in video search and retrieval, video analysis and human-computer interaction research.

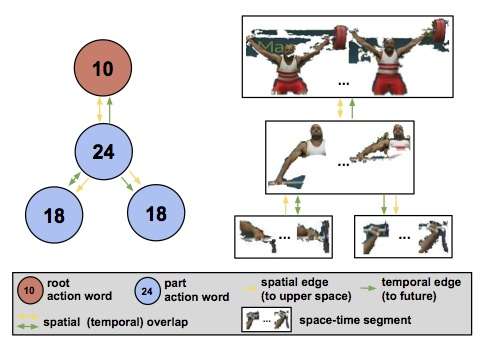

The core idea behind the new method is to express each action, whether it be a pedestrian strolling down the street or a gymnast performing a somersault, as a series of space-time patterns. These begin with elementary body movements, such as a leg moving up or an arm flexing. But these movements also combine into spatial and temporal patterns - a leg moves up and then the arm flexes, or the leg moves up below the arm. Or the pattern might describe a hierarchical relationship, such as a leg moving as part of the more global movement of the body.

"Modeling actions as collections of such patterns, instead of a single pattern, allows us to account for variations in action execution," said Leonid Sigal, senior research scientist at Disney Research Pittsburgh. It thus is able to recognize a tuck or a pike movement by a diver, even if one diver's execution differs from another, or if the angle of the dive blocks a part of one diver's body, but not that of another diver's.

The method, developed by Sigal along with Shugao Ma, a Disney Research intern who is a Ph.D. student at Boston University, and his adviser, Prof. Stan Sclaroff, can detect these structures directly from a video without fine-level supervision.

"Knowing that a video contains 'walking' or 'diving,' we automatically discover which elements are most important and discriminative and with which relationships they need to be stringed together into patterns to improve recognition," Ma said.

Representing actions in this way as space-time trees, not only improves recognition of actions but also is concise and efficient, said the researchers, who will present their findings at the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2015, June 7-12 in Boston.

The researchers tested the method using standard video datasets including 10 different sports actions and four interactions, such as kisses and hugs depicted on TV programs. On these datasets, the Disney algorithm outperformed previously reported methods, identifying elements with a richer set of relations among them. They also found that the space-time trees discovered in one dataset to discriminate between hugs and kisses performed promisingly in discriminating hugs or kisses from other actions, such as eating and using a phone, that were not part of the original dataset.

More information: "Space-Time Tree Ensemble for Action Recognition-Paper" www.disneyresearch.com/wp-cont … ecognition-Paper.pdf

Provided by Disney Research