May 10, 2010 report

Next generation hard drives may store 10 terabits per sq inch: research

(PhysOrg.com) -- The majority of today's hard disks use perpendicular recording, which means their storage densities are limited to a few hundred gigabytes per square inch. Scientists have for some time been trying to find ways of increasing the limit, and a new method has been proposed that could stretch the limit as high as ten terabits (Tb) per square inch.

The research, published in this week’s Nature Photonics, has found a method that combines two writing procedures to store data on hard drives. Each procedure writes tightly packed data without affecting data on the bits surrounding it and avoiding the usual challenge with tightly packing data, which is that the heat generated in the write head can create superparamagnetisim that can interfere with surrounding bits and jumble the data on them (by flipping a 0 state to 1 or vice versa).

One of the procedures used is bit-patterned recording (BPR), which writes to “magnetic islands” lithographed into the surface, which isolate the write events and prevent superparamagnetic effects occurring. The other is thermally-assisted magnetic recording (TAR), in which a tiny region of the surface is heated when data are being written and then cooled. The heat allows the surface to magnetize quickly, and this, the small-grained design of the surface, and the distance between bits all help to prevent superparamagnetisim.

The two methods both present difficulties: BPR is limited by the need for a write head that exactly matches the size of the magnetic islands, while TAR is limited by its need for small grain media that can tolerate heating and cooling, and the difficulty of controlling the area heated. It turns out that combining the two methods solves all the problems. BPR’s magnetic islands remove the need for small grain media, and TAR writes only to the heated bit, so the size of the write head is less important. Using the two methods in combination means surrounding bits are unaffected, and data can be tightly packed on less expensive surfaces.

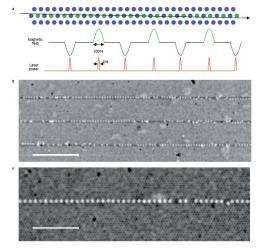

The new system, developed by Barry C. Stipe and colleagues from Hitachi research groups in California and Japan, uses a plasmonic nano-antenna to write the data, with laser light guided via a waveguide to the antenna, where it is transformed into a charge. The “E-shaped” antenna has a 20-25 nanometer (nm) wide middle prong that concentrates the charge on an area as tiny as 15 nm in diameter, rather like a lightning rod, with the outer prongs acting as grounds.

The write speed obtained by the researchers was 250 megabits per second and the error rate was low. Data tracks were separated by 24 nm, and the researchers obtained a data storage density of one terabit per square inch of high-quality data quite easily. The researchers believe 10 terabits per square inch is theoretically possible.

More information: Magnetic recording at 1.5 Pb m^(−2) using an integrated plasmonic antenna, Barry C. Stipe et al., Nature Photonics, Published online: 2 May 2010. doi:10.1038/nphoton.2010.90

© 2010 PhysOrg.com