Cystorm supercomputer unleashes 28.16 trillion calculations per second

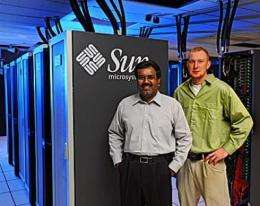

Srinivas Aluru recently stepped between the two rows of six tall metal racks, opened up the silver doors and showed off the 3,200 computer processor cores that power Cystorm, Iowa State University's second supercomputer.

And there's a lot of raw power in those racks.

Cystorm, a Sun Microsystems machine, boasts a peak performance of 28.16 trillion calculations per second. That's five times the peak of CyBlue, an IBM Blue Gene/L supercomputer that's been on campus since early 2006 and uses 2,048 processors to do 5.7 trillion calculations per second.

Aluru, the Ross Martin Mehl and Marylyne Munas Mehl Professor of Computer Engineering and the leader of the Cystorm project, said the new machine also scores high on a more realistic test of a supercomputer's actual performance: 15.44 trillion calculations per second compared to CyBlue's 4.7 trillion per second. That measure makes Cystorm 3.3 times more powerful than CyBlue.

Those performance numbers, however, do not earn Cystorm a spot on the TOP500 list of the world's fastest supercomputers. (When CyBlue went online three years ago, it was the 99th most powerful supercomputer on the list.)

"Cystorm is going to be very good for data-intensive research projects," Aluru said. "The capabilities of Cystorm will help Iowa State researchers do new, pioneering research in their fields."

The supercomputer is targeted for work in materials science, power systems and systems biology.

Aluru said materials scientists will use the supercomputer to analyze data from the university's Local Electrode Atom Probe microscope, an instrument that can gather data and produce images at the atomic scale of billionths of a meter. Systems biologists will use the supercomputer to build gene networks that will help researchers understand how thousands of genes interact with each other. Power systems researchers will use the supercomputer to study the security, reliability and efficiency of the country's energy infrastructure. And computer engineers will use the supercomputer to build a software infrastructure that helps users make decisions by identifying relevant information sources.

"These research efforts will lead to significant advances in the penetration of high performance computing technology," says a summary of the Cystorm project. "The project will bring together multiple departments and research centers at Iowa State University and further enrich interdisciplinary culture and training opportunities."

Joining Aluru on the Cystorm project are five Iowa State researchers: Maneesha Aluru, an associate scientist in electrical and computer engineering and genetics, development and cell biology; Baskar Ganapathysubramanian, an assistant professor and William March Scholar in Mechanical Engineering; James McCalley, the Harpole Professor in Electrical Engineering; Krishna Rajan, a professor of materials science and engineering; and Arun Somani, an Anson Marston Distinguished Professor in Engineering and Jerry R. Junkins Endowed Chair of electrical and computer engineering. Steve Nystrom, a systems support specialist for the department of electrical and computer engineering, is the system administrator for Cystorm.

The researchers purchased the computer with a $719,000 grant from the National Science Foundation, $400,000 from Iowa State colleges, departments and researchers, and a $200,000 equipment donation from Sun Microsystems.

Because of Cystorm, the computer company will designate Iowa State a Sun Microsystems Center of Excellence for Engineering Informatics and Systems Biology.

While Cystorm is much more powerful than CyBlue, Aluru said Iowa State's first supercomputer will still be used by researchers across campus.

"CyBlue will still be around," Aluru said. "Researchers will use both systems to solve problems. Both systems enhance the research capabilities of Iowa State."

Source: Iowa State University (news : web)