Seeking efficiency, scientists run visualizations directly on supercomputers

(PhysOrg.com) -- If you wanted to perform a single run of a current model of the explosion of a star on your home computer, it would take more than three years just to download the data. In order to do cutting-edge astrophysics research, scientists need a way to more quickly compile, execute and especially visualize these incredibly complex simulations.

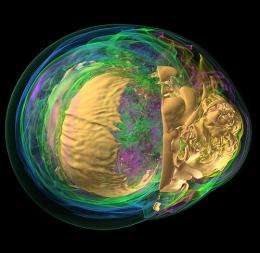

These days, many scientists generate quadrillions of data points for visualizations of everything from supernovas to protein structures—and they’re quickly overwhelming current computing capabilities. Scientists at the U.S. Department of Energy's Argonne National Laboratory are exploring other ways to speed up the process, using a technique called software-based parallel volume rendering.

Volume rendering is a technique that can be used to make sense of the billions of tiny points of data collected from an X-ray, MRI, or a researcher’s simulation. For example, bone is denser than muscle, so an MRI measuring the densities of every square millimeter of your arm will register the higher readings for the radius bone in your forearm.

Argonne scientists are trying to find better, quicker ways to form a recognizable image from all of these points of data. Equations can be written to search for sudden density changes in the dataset that might set bone apart from muscle, and researchers can create a picture of the entire arm, with bone and muscle tissue in different colors.

“But on the scale that we’re working, creating a movie would take a very long time on your laptop—just rotating the image one degree could take days,” said Mark Hereld, who leads the visualization and analysis efforts at the Argonne Leadership Computing Facility.

First, researchers divide the data among many processing cores so that they can all work at once, a technique that’s called parallel computing. On Argonne’s Blue Gene/P supercomputer, 160,000 computing cores all work together in parallel. Today’s typical laptop, by comparison, has two cores.

Usually, the supercomputer’s work stops once the data has been gathered, and the data is sent to a set of graphics processors (GPUs), which create the final visualizations. But the driving commercial force behind developing GPUs has been the video game industry, so GPUs aren’t always well suited for scientific tasks. In addition, the sheer amount of data that has to be transferred between computers eats up valuable time and disk space.

“It’s so much data that we can’t easily ask all of the questions that we want to ask: each new answer creates new questions and it just takes too much time to move the data from one calculation to the next,” said Hereld. “That drives us to look for better and more efficient ways to organize our computational work.”

Argonne researchers wanted to know if they could improve performance by skipping the transfer to the GPUs and instead performing the visualizations right there on the supercomputer. They tested the technique on a set of astrophysics data and found that they could indeed increase the efficiency of the operation.

“We were able to scale up to large problem sizes of over 80 billion voxels per time step and generated images up to 16 megapixels,” said Tom Peterka, a postdoctoral appointee in Argonne’s Mathematics and Computer Science Division.

Because the Blue Gene/P's main processor can visualize data as they are analyzed, Argonne's scientists can investigate physical, chemical, and biological phenomena with much more spatial and temporal detail.

According to Hereld, this new visualization method could enhance research in a wide variety of disciplines. “In astrophysics, studying how stars burn and explode pulls together all kinds of physics: hydrodynamics, gravitational physics, nuclear chemistry and energy transport,” he said. “Other models study the migration of dangerous pollutants through complex structures in the soil to see where they’re likely to end up; or combustion in cars and manufacturing plants—where fuel is consumed and whether it’s efficient.”

“Those kinds of problems often lead to questions that are very complicated to pose mathematically,” Hereld said. “But when you can simply watch a star explode through visualization of the simulation, you can gain insight that’s not available any other way.”

Argonne’s work in advanced computing is supported by the Department of Energy’s Office of Advanced Scientific Computing Research.

Provided by Argonne National Laboratory (news : web)