Brain on a chip?

(PhysOrg.com) -- How does the human brain run itself without any software? Find that out, say European researchers, and a whole new field of neural computing will open up. A prototype 'brain on a chip' is already working.

“We know that the brain has amazing computational capabilities,” remarks Karlheinz Meier, a physicist at Heidelberg University. “Clearly there is something to learn from biology. I believe that the systems we are going to develop could form part of a new revolution in information technology.”

It’s a strong claim, but Meier is coordinating the EU-supported FACETS project which brings together scientists from 15 institutions in seven countries to do just that. Inspired by research in neuroscience, they are building a ‘neural’ computer that will work just like the brain but on a much smaller scale.

The human brain is often likened to a computer, but it differs from everyday computers in three important ways: it consumes very little power, it works well even if components fail, and it seems to work without any software.

How does it do that? Nobody yet knows, but a team within FACETS is completing an exhaustive study of brain cells - neurons - to find out exactly how they work, how they connect to each other and how the network can ‘learn’ to do new things.

Mapping brain cells

“We are now in a situation like molecular biology was a few years ago when people started to map the human genome and make the data available,” Meier says. “Our colleagues are recording data from neural tissues describing the neurons and synapses and their connectivity. This is being done almost on an industrial scale, recording data from many, many neural cells and putting them in databases.”

Meanwhile, another FACETS group is developing simplified mathematical models that will accurately describe the complex behaviour that is being uncovered. Although the neurons could be modelled in detail, they would be far too complicated to implement either in software or hardware.

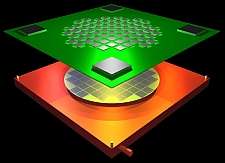

The goal is to use these models to build a ‘neural computer’ which emulates the brain. The first effort is a network of 300 neurons and half a million synapses on a single chip. The team used analogue electronics to represent the neurons and digital electronics to represent communications between them. It’s a unique combination.

Since the neurons are so small, the system runs 100,000 times faster than the biological equivalent and 10 million times faster than a software simulation. “We can simulate a day in one second,” Meier notes.

The network is already being used by FACETS researchers to do experiments over the internet without needing to travel to Heidelberg.

New type of computing

But this ‘stage 1’ network was designed before the results came in from the mapping and modelling work. Now the team are working on stage 2, a network of 200,000 neurons and 50 million synapses that will incorporate all the neuroscience discoveries made so far.

To build it, the team is creating its network on a single 20cm silicon disk, a ‘wafer’, of the type normally used to mass-produce chips before they are cut out of the wafer and packaged. This approach will make for a more compact device.

So called ‘wafer-scale integration’ has not been used much before for this, as such a large circuit will certainly have manufacturing flaws. “Our chips will have faults but they are each likely to affect only a single synapse or a single connection in the network,” Meier points out. “We can easily live with that. So we exploit the fault tolerance and use the entire wafer as a neural network.”

How could we use a neural computer? Meier stresses that digital computers are built on principles that simply do not apply to devices modelled on the brain. To make them work requires a completely new theory of computing. Yet another FACETS group is already on the case. “Once you understand the basic principles you may hope to develop the hardware further, because biology has not necessarily found the best solution.”

Beyond the brain?

Practical neural computers could be only five years away. “The first step could be a little add-on to your computer at home, a device to handle very complex input data and to provide a simple decision,” Meier says. “A typical thing could be an internet search.”

In the longer term, he sees applications for neural computers wherever there are complex and difficult decisions to be made. Companies could use them, for example, to explore the consequences of critical business decisions before they are taken. In today’s gloomy economic climate, many companies will wish they already had one!

The FACETS project, which is supported by the EU’s Sixth Framework Programme for research, is due to end in August 2009 but the partners have agreed to continue working together for another year. They eventually hope to secure a follow-on project with support from both the European Commission and national agencies.

Meanwhile, the consortium has just obtained funding from the EU’s Marie Curie initiative to set up a four-year Initial Training Network to train PhD students in the interdisciplinary skills needed for research in this area.

Where could this go? Meier points out that neural computing, with its low-power demands and tolerance of faults, may make it possible to reduce components to molecular size. “We may then be able to make computing devices which are radically different and have amazing performance which, at some point, may approach the performance of the human brain - or even go beyond it!”

More information: facets.kip.uni-heidelberg.de/

Provided by ICT Results