September 26, 2023 dialog

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

written by researcher(s)

proofread

Holographic hybridization technique allows changes of depth of field in recorded pictures and videos

Most of the imaging technologies available today, including smartphone cameras, digital video cameras, microscopes and telescopes, are based on the concepts of direct imaging, i.e., a camera directly recording a scene in a single step. This is precisely how human vision works, where light from an object is collected by the eye's lens and focused on the retina.

Holography, a sub-field of optics whose invention received a Nobel prize in 1971, images objects indirectly. This indirect imaging process consists of at least two steps. In the first step, an object is recorded by a camera in a unique way with many optical components mounted on a special table that is resilient to vibrations. In the second step, the recorded intensity pattern is processed in the computer to reconstruct the object information.

The complicated imaging process with many optical components and the associated high cost in holography is justified by the additional information obtained from the object in three or more dimensions. However, the complicated recording process and cost of holography preclude its application in many areas, such as smartphone and digital video camera technologies.

Over the past two years, my colleagues and I at the Center of Photonics and Computational Imaging (CPCI) of the University of Tartu, Estonia, have been developing imaging technologies that have the advantages of holography but only the minimal requirements of direct imaging systems. We demonstrated 3D imaging capability in 2022 using a commonly available optical component—a refractive lens.

In any imaging system, image sharpness and depth of field are two of the most critical parameters. However, both are intertwined by a common factor called the f-number, as written in many DSLR cameras. A small-value f-number provides a small depth of field and vice versa. A small f-number provides a sharp image for a particular depth and blurs the object at other planes. A high f-number provides a sharp image for many depths.

While the depth of field can be tuned by selecting an appropriate f-number before taking the picture, it is impossible to change the depth of field after capturing the picture. The only way to obtain the desired depth of field after recording is to repeat the recording again.

If you are a wildlife photographer, luck may not be on your side to recreate the same scene with a wild animal. In cinematography, with more "takes" and time, it is possible to replicate the movie scene with a desired depth of field. Considering that every aspect of being is time-dependent, without a time traveling machine, the above is not possible in many cases.

There are computational methods, such as deblurring, that may help to some extent to refocus a slightly blurred plane digitally. But during this process, an already sharp image becomes blurred. There is no technology available today that has the capability to tune the depth of field after recording.

Certain coded aperture imaging technologies developed by Prof. Rosen and Dr. Rai from Ben Gurion University, Israel, and one I developed in 2022, can be adapted to tune the depth of field after recording, but cannot be applied to DSLRs, smartphone cameras that still rely on conventional optical elements such as refractive lenses.

At CPCI, my Ph.D. student Shivasubramanian Gopinath, research manager Aravind Simon, and I accidentally stumbled upon a solution to this problem through the holographic hybridization method. Hybridization is defined as the process of combining two or more elements to create a new element with mixed properties of the parent elements.

Hybridization means different things in different fields but generally means "mixing." For instance, in biology, DNA hybridization involving the breeding of a male lion and a female tiger produces a hybrid offspring liger. Let us consider the mythical griffin, which is a hybrid creature that has the body of a lion and the wings of a bird.

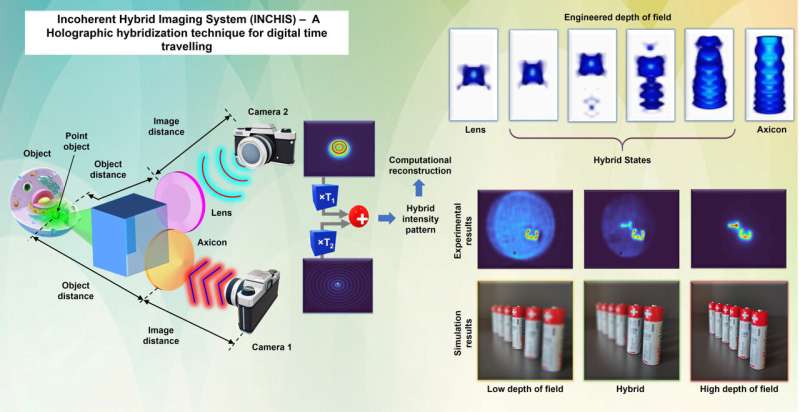

What is hybridization in holography? Instead of recording one picture, we propose to record two pictures collinearly and simultaneously, one with a refractive lens and another with a refractive axicon or a conical prism. A refractive lens has a low depth of field and an axicon has a high depth of field. The two recorded digital pictures are combined with different strengths and reconstructed in the computer by a special algorithm developed at CPCI.

By tuning the strengths of the two pictures before combining them into one, it is possible to tune the depth of field within the limits of the lens and the axicon. The schematic of the setup, the variation of depth of field, and the expected change in the picture are shown in the figure above. During this tuning, the computational algorithm preserves the highest image sharpness. Our research on this topic has just been published in Optics and Lasers in Engineering.

Since the developed technology is simple and low cost, it can be easily integrated into the existing technologies of smartphone cameras, microscopy, holography and cinematography systems. We believe the developed hybridization approach will allow us to relive the captured moments like a digital time-traveling machine.

This story is part of Science X Dialog, where researchers can report findings from their published research articles. Visit this page for information about ScienceX Dialog and how to participate.

More information: Shivasubramanian Gopinath et al, Sculpting axial characteristics of incoherent imagers by hybridization methods, Optics and Lasers in Engineering (2023). DOI: 10.1016/j.optlaseng.2023.107837

Bio:

Prof. Vijayakumar Anand received his Ph.D. degree from the Indian Institute of Technology Madras, India in 2015. He was a PBC outstanding postdoctoral fellow at Ben Gurion University, Israel from 2015 to 2018. From 2019 to 2021, he was a Deputy Vice-Chancellor Research and Enterprise Fellow (Nanophotonics Fellow) at Swinburne University of Technology, Australia. He started his position as ERA Chair and Associate Professor at the University of Tartu, Estonia, in 2022. He is an adjunct Associate Professor at Swinburne University of Technology, Australia. He has published more than 150 journal articles, conference proceedings, book chapters, and books. He is also the author of the bestselling textbook "Design and Fabrication of Diffractive Optical Elements with MATLAB," published by SPIE Press. He has been elected to the editorial board of Applied Physics B: Laser and Optics published by Springer and Chinese Optics Letters. His current research interests include computational optics, imaging, digital holography, diffractive optics, and microfabrication.

(c) 2023 Science X