Is the p-value pointless?

For the first time in its 177-year history, the American Statistical Association (ASA) has voiced its opinion and made specific recommendations for a statistical practice. The subject of their ire? The (arguably) most common statistical output, the p-value. The p-value has long been the primary metric for demonstrating that study results are "statistically significant," usually by achieving the semi-arbitrary value of p

ce of the p-value has been greatly overstated and the scientific community has become over-reliant on this one – flawed – measure.

In the associated article, published in The American Statistician, Ronald Wasserstein and Nicole Lazar explain how the dependence on the p-value threatens the reproducibility and replicability of research. Importantly, the p-value does not prove that scientific conclusions are true and does not signify the importance of a result. As Wasserstein says in the ASA press release, "The p-value was never intended to be a substitute for scientific reasoning."

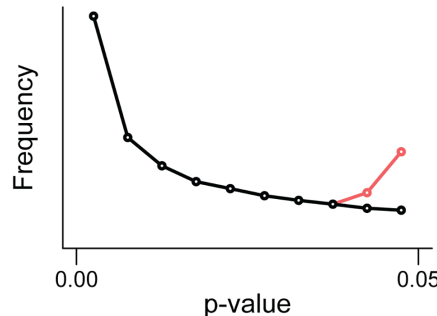

As documented in a 2015 PLOS Biology Perspective by Megan Head, Michael Jennions and colleagues, the p-value is subject to a common type of manipulation known as "p-hacking," where researchers selectively report datasets or analyses that achieve a "significant" result. The authors of this Perspective used a text-mining protocol to reveal this to be a widespread issue across multiple scientific disciplines. The authors also provide helpful recommendations for researchers and journals.

The problem with the p-value cuts both ways. Over-interpretation of the p-value can lead to both false positives and false negatives. Dependence on a specific p-value can lead to bias as researchers may discontinue or shelve work that doesn't meet this arbitrary standard.

The hope is that the ASA statement will increase awareness of the problems of inappropriate p-value use persisting in the scientific practice. Their guidelines can aid researchers in determining the best practices for the use of the p-value, and help identify when other statistical tests are more appropriate.

Provided by Public Library of Science

This story is republished courtesy of PLOS Blogs: blogs.plos.org.