March 25, 2008 feature

Avatar Mimics You in Real Time

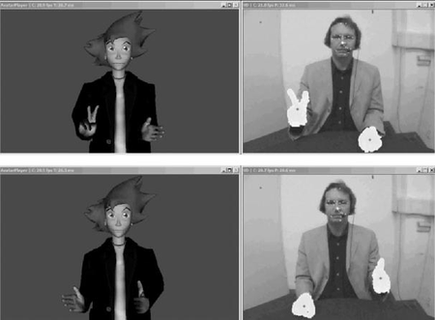

It’s a little bit like looking in the mirror at your cartoon double, except that the “reflection” is an avatar on your computer screen. Wave your hand, nod your head, speak a sentence, and your avatar does the same.

The technology is already on display at the headquarters of Deutsche Telekom in Bonn, Germany, and at the Deutsche Telekom Laboratories in Berlin. Visitors can experiment with making gestures and watching comical characters mimic them in real time.

The public feedback is very positive, according to researchers Oliver Schreer, Peter Eisert, and Ralf Tanger, of the Fraunhofer Heinrich-Hertz-Institut in Berlin, and Roman Englert with the Deutsche Telekom Laboratories and Ben Gurion University in Beer-Sheva, Israel. The team will publish the results of its study on its vision- and speech-driven avatar technology in an upcoming issue of IEEE Transactions on Multimedia.

“The presented approach allows intuitive, touchless user interaction,” Schreer told PhysOrg.com. “Due to the recognition capabilities, any novel interface can be designed for interactive human-computer interaction.”

The researchers’ prototype system is compatible with a normal PC, with the only hardware required being a low-cost Webcam and a pair of standard headphones. The complete set of audio-visual analysis is performed in real-time, which allows an immediate animation of the virtual character. To begin, the program wouldn’t require any training or individual input of gestures. However, since the system relies on skin color recognition to follow the movements of the hands and head, users must wave their hands around at first to enable the system to determine the individual’s skin color. Wearing skin-colored clothing should be avoided.

The system can recognize a set of 66 parameters that define facial expression, and it also contains a set of high-level facial expressions (such as joy, sadness, surprise, and disgust). Users can also press buttons to manually activate these expressions. The system also recognizes “visemes,” which move the lips in accordance with the phoneme being spoken based on voice analysis. A set of 15 visemes can represent all phonemes. The system also recognizes a set of 186 body motion parameters that define joint rotation in the arms and upper body.

The head rotation is detected as well in order to represent head nick, head shake and head roll.

By detecting the positions of fingers, the system can recognize many basic gestures, including many from the American Sign Language alphabet. Sometimes the avatar’s hands don’t exactly mimic the user’s hands, as the main aim is to make the avatar’s movements as smooth and natural as possible.

In the future, the researchers plan to apply the system in virtual chat rooms and online call center applications, such as technical support. In both situations, users are represented by avatars. The avatars become animated based on the users’ movements and speech, while maintaining the privacy of the users. The researchers also hope to integrate the avatar system into mobile devices, where it could serve as a user-friendly interface in addition to touch screens, a stylus, or speech recognition systems.

“Some aspects like gestures based on hand recognition are already market mature,” Schreer said. “Finger analysis and interpretation are more complicated and may need another one or two years in order to achieve robust algorithms that operate under real-life conditions, i.e. the real environment. Initial applications are scrolling of menus in cellulars (e.g. SMS browsing) and in the medical area for the control of interfaces in operating rooms.”

More information: Schreer, Oliver; Englert, Roman; Eisert, Peter; and Tanger, Ralf. “Real-Time Vision and Speech Driven Avatars for Multimedia Applications.” IEEE Transactions on Multimedia. To be published in a future issue.

Copyright 2008 PhysOrg.com.

All rights reserved. This material may not be published, broadcast, rewritten or redistributed in whole or part without the express written permission of PhysOrg.com.