August 9, 2014 weblog

Optalysys will launch prototype optical processor

UK-based startup Optalysys is moving ahead to deliver exascale levels of processing power on a standard-sized desktop computer within the next few years, reported HPCwire earlier this week. The company itself announced on August 1 that it is "only months away from launching a prototype optical processor with the potential to deliver exascale levels of processing power on a standard-sized desktop computer." The company will demo its prototype, which meets NASA Technology Readiness Level 4, in January next year. Though the January date represents only a proof-of-concept stage, the processor is expected to run at over 340 gigaFLOPS , which will enable it to analyze large data sets, and produce complex model simulations in a laboratory environment. Engadget commented that those numbers were not bad for a proof of concept. HPCwire pointed at the potential significance of this work in its article's headline, "IsThis the Exascale Breakthrough We've Been Waiting For?" Optalysys' technology uses light for compute-intensive mathematical functions at speeds that exceed what can be achieved with electronics, at a fraction of the cost and power consumption.

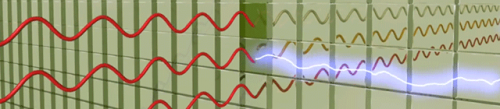

The company CEO, Dr. Nick New, said that, "Using low power lasers and high resolution liquid crystal micro-displays, calculations are performed in parallel at the speed of light." Engadget contributing editor Steve Dent wrote how "low-intensity lasers are beamed through layers of liquid crystal grids, which change the light intensity based on user-inputted data. The resulting interference patterns can be used to solve mathematical equations and perform other tasks. By splitting the beam through multiple grids, the system can compute in parallel much more efficiently than standard multi-processing supercomputers."

Optalysys technology can provide a big improvement over traditional computing, the company noted. "Electronic processing is fundamentally serial, processing one calculation after the other. This results in big data management problems when the resolutions are increased, with improvements in processor speed only resulting in incremental improvements in performance. The Optalysys technology, however, is truly parallel – once the data is loaded into the liquid crystal grids, the processing is done at the speed of light, regardless of the resolution. The Optalysys technology we are developing is highly innovative and we have filed several patents in the process."

The company's target audience for their technology? "We hope the technology will be used by everyone, but to begin with we envisage the first users to be the users of high power Computational Fluid Dynamics (CFD) simulations and Big Data sets."

Optalysys chairman James Duez said in applications such as aerofoil design, weather forecasting, MRI data analysis and quantum computing, "it is becoming increasingly common for traditional computing methods to fall short of delivering the processing power needed." CFD, noted Duez, can be used to predict the weather, design cars and model airflow, "but the speed of processing needed to create models is constrained by current electrical capabilities."

Dr. New said "We are currently developing two products, a 'Big Data' analysis system and an Optical Solver Supercomputer, both of which are expected to be launched in 2017."

Optalysys was founded in 2013 by New and Duez. The team includes specialists in software development, free-space optics, optical engineering and production engineering.

More information: — www.optalysys.com/faq/

© 2014 Tech Xplore