'Deplatforming' online extremists reduces their followers, but there's a price

Conspiracy theorist and U.S. far-right media personality Alex Jones was recently ordered to pay US$45 million (£37 million) damages to the family of a child killed in the 2012 Sandy Hook school shooting.

Jones had claimed that being banned or "deplatformed" from major social media sites for his extreme views negatively affected him financially, likening the situation to "jail." But during the trial, forensic economist Bernard Pettingill estimated Jones's conspiracy website InfoWars made more money after being banned from Facebook and Twitter in 2018.

So does online deplatforming actually work? It's not possible to measure influence in a scientifically rigorous way so it's difficult to say what happens to a person or group's overall influence when they are deplatformed. Overall, research suggests deplatforming can reduce the activity of nefarious actors on those sites. However, it comes with a price. As deplatformed people and groups migrate elsewhere, they may lose followers but also become more hateful and toxic.

Typically, deplatforming involves actions taken by the social media sites themselves. But it can be done by third parties like the financial institutions providing payment services on these platforms, such as PayPal.

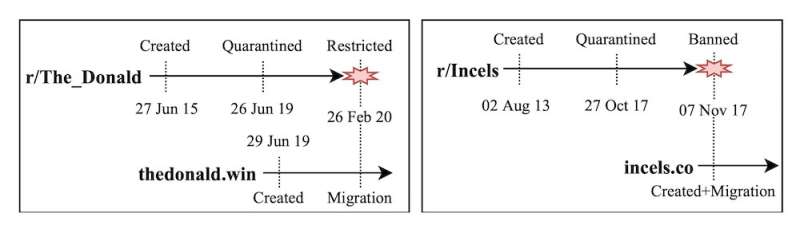

Closing a group is also a form of deplatforming, even if the people in it are still free to use the sites. For example, The_Donald subreddit (a forum on the website Reddit) was closed for hosting hateful and threatening content, such as a post encouraging members to attend a white supremacist rally.

Does deplatforming work?

Research shows deplatforming does have positive effects on the platform the person or group was kicked out of. When Reddit banned certain forums victimizing overweight people and African Americans, a lot of users who were active on these hateful subreddits stopped posting on Reddit altogether. Those who stayed active posted less extreme content.

But the deplatformed group or person can migrate. Alex Jones continues to work outside mainstream social networks, mainly operating through his InfoWars website and podcasts. A ban from big tech may be seen as punishment for challenging the status quo in an uncensored manner, reinforcing the bonds and sense of belonging between followers.

Gab was created as an alternative social network in 2016, welcoming users who have been banned from other platforms. Since the U.S. Capitol insurrection, Gab has been tweeting about these bans as a badge of honor, and said it's seen a surge in users and job applications.

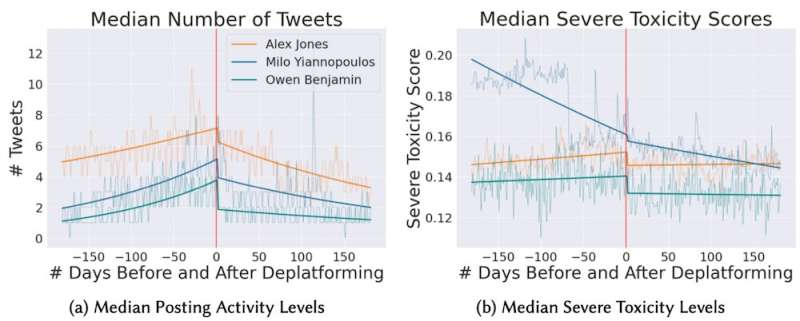

My team's research looked at the subreddits The_Donald and Incels (a male online community hostile towards women), which moved to standalone websites after being banned from Reddit. We found that as dangerous communities migrated onto different platforms, their footprints became smaller, but users got significantly more extreme. Similarly, users who got banned from Twitter or Reddit showed an increased level of activity and toxicity upon relocating to Gab.

Other studies into the birth of fringe social networks like Gab, Parler, or Gettr have found relatively similar patterns. These platforms market themselves as bastions of free speech, welcoming users banned or suspended from other social networks. Research shows that not only does extremism increase as a result of lax moderation but also that early site users have a disproportionate influence on the platform.

The unintended consequences of deplatforming are not limited to political communities but extend to health disinformation and conspiracy theory groups. For instance, when Facebook banned groups discussing COVID-19 vaccines, users went on Twitter and posted even more anti-vaccine content.

Alternative solutions

What else can be done to avoid the concentration of online hate that deplatforming can encourage? Social networks have been experimenting with soft moderation interventions that do not remove content or ban users. They limit the content's visibility (shadow banning), restrict the ability of other users to engage with the content (replying or sharing), or add warning labels.

These approaches are showing encouraging results. Some warning labels have prompted site users to debunk false claims. Soft moderation sometimes reduces user interactions and extremism in comments.

However, there is potential for popularity bias (acting on or ignoring content based on the buzz around it) about what subjects platforms like Twitter decide to intervene on. Meanwhile, warning labels seem to work less effectively for fake posts if they are right-leaning.

It is also still unclear whether soft moderation creates additional avenues for harassment, for example mocking users that get warning labels on their posts or aggravating users who cannot re-share content.

Looking forward

A crucial aspect of deplatforming is timing. The sooner platforms act to stop groups using mainstream platforms to grow extremist movements, the better. Rapid action could in theory put the brakes on the groups' efforts to muster and radicalize large user bases.

But this would also need a coordinated effort from mainstream platforms as well other media to work. Radio talk shows and cable news play a crucial role in promoting fringe narratives in the U.S.

We need an open dialogue on the deplatforming tradeoff. As a society, we need to discuss if our communities should have fewer people exposed to extremist groups, even if those who do engage become ever more isolated and radicalized.

At the moment, deplatforming is almost exclusively managed by big technology companies. Tech companies can't solve the problem alone, but neither can researchers or politicians. Platforms must work with regulators, civil rights organizations and researchers to deal with extreme online content. The fabric of society may depend upon it.

Provided by The Conversation

This article is republished from The Conversation under a Creative Commons license. Read the original article.![]()