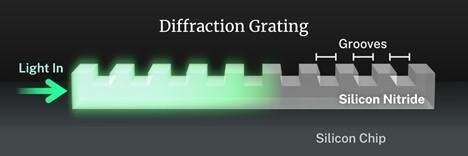

The NIST team directed light into an ultrathin layer of silicon nitride etched with grooves to create a diffraction grating. If the separation between the grooves and the wavelength of light is carefully chosen, the intensity of light declines much more slowly, linearly rather than exponentially. Credit: S. Kelley/NIST

Shine a flashlight into a murky pond water and the beam won't penetrate very far. Absorption and scattering rapidly diminishes the intensity of the light beam, which loses a fixed percentage of energy per unit distance traveled. That decline—known as exponential decay—holds true for light traveling through any fluid or solid that readily absorbs and scatters electromagnetic energy.

But that's not what researchers at the National Institute of Standards and Technology (NIST) found when they studied a miniature light-scattering system—an ultrathin layer of silicon nitride fabricated atop a chip and etched with a series of closely spaced, periodic grooves. The grooves create a grating—a device that scatters different colors of light at different angles—while the silicon nitride acts to confine and guide incoming light as far as possible along the 0.2-centimeter length of the grating.

The grating scatters light—most of it upwards, perpendicular to the device—much like pond water does. And in most of their experiments, the NIST scientists observed just that. The intensity of the light dimmed exponentially and was able to illuminate only the first few of the grating's grooves.

However, when the NIST team adjusted the width of the grooves so that they were nearly equal to the spacing between them, the scientists found something surprising. If they carefully chose a specific wavelength of infrared light, the intensity of that light decreased much more slowly as it traveled along the grating. The intensity declined linearly with the distance traveled rather than exponentially.

The scientists were just as intrigued by a property of the infrared light scattered upwards from the grating. Whenever the intensity of light along the grating shifted from exponential to linear decline, the light scattered upwards formed a wide beam that had the same intensity throughout. A broad light beam of uniform intensity is a highly desirable tool for many experiments involving clouds of atoms.

Electrical and computer engineer Sangsik Kim had never seen anything like it. When he first observed the strange behavior in simulations he performed at NIST in the spring of 2017, he and veteran NIST scientist Vladimir Aksyuk worried that he had made a mistake. But two weeks later, Kim saw the same effect in laboratory experiments using actual diffraction gratings.

Animation depicts the NIST experiment in altering how light is absorbed. Credit: S. Kelley/NIST

If the wavelength shifted even slightly or the spacing between the grooves changed by only a tiny amount, the system reverted back to exponential decay.

It took the NIST team several years to develop a theory that could explain the strange phenomenon. The researchers found that it has its roots in the complex interplay between the structure of the grating, the light traveling forward, the light scattered backward by the grooves in the grating, and the light scattered upwards. At some critical juncture, known as the exceptional point, all of these factors conspire to dramatically alter the loss in light energy, changing it from exponential to linear decay.

The researchers were surprised to realize that the phenomenon they observed with infrared light is a universal property of any type of wave traveling through a lossy periodic structure whether the waves are acoustical, infrared light or radio.

The finding may enable researchers to transmit beams of light from one chip-based device to another without losing as much energy, which could be a boon for optical communications. The broad, uniform beam sculpted by the exceptional point is also ideal for studying a cloud of atoms. The light induces the atoms to jump from one energy level another; its width and uniform intensity enables the beam to interrogate the rapidly moving atoms for a longer period of time. Precisely measuring the frequency of light emitted as the atoms make such transitions is a key step in building highly accurate atomic clocks and creating precise navigation systems based on trapped atomic vapors.

More generally, said Aksyuk, the uniform beam of light makes it possible to integrate portable, chip-based photonic devices with large-scale optical experiments, reducing their size and complexity. Once the uniform beam of light probes an atomic vapor, for instance, the information can be sent back to the photonic chip and processed there.

Yet another potential application is environmental monitoring. Because the transformation from exponential to linear absorption is sudden and exquisitely sensitive to the wavelength of light selected, it could form the basis of a high-precision detector of trace amounts of pollutants. If a pollutant at the surface changes the wavelength of light in the grating, the exceptional point will abruptly vanish and the light intensity will swiftly transition from linear to exponential decay, Aksyuk said.

The researchers, including Aksyuk and Kim, who is now at Texas Tech University in Lubbock, reported their findings online in the April 21 issue of Nature Nanotechnology.

More information: Alexander Yulaev, Exceptional points in lossy media lead to deep polynomial wave penetration with spatially uniform power loss, Nature Nanotechnology (2022). DOI: 10.1038/s41565-022-01114-3. www.nature.com/articles/s41565-022-01114-3

Journal information: Nature Nanotechnology

Provided by National Institute of Standards and Technology