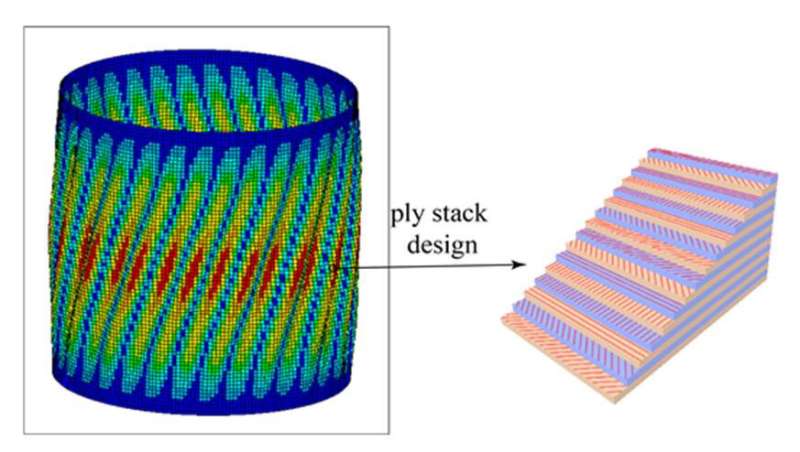

Simulation of buckling knockdown factor prediction for a composite shell (left), and the ply stack of the composite (right). Credit: Oak Ridge National Laboratory

Researchers from Oak Ridge National Laboratory developed a novel design and training strategy for reversible ResNets that reduces the dimensionality of high-dimensional machine learning models for complex physical systems.

Developing reduced-order models of complex physical systems is computationally expensive. ORNL researchers have developed a neural network-based approach that reduces the number of inputs necessary to develop these models and, by extension, the complexity of HPC applications. The team's method:

- reduced a 20-dimensional model to 1-dimension.

- reduced the error rate (compared to a standard NN) from 35.1% to 1.6%.

Input reduction is achieved by employing residual neural networks, or ResNets, which utilize shortcuts to bypass layers. The ORNL team's approach can be used for a wide range of applications (and even experimental data), such as the team's acceleration of the design process of multi-layer composite shells (which are used in pressure vessels, reservoirs and tanks, and rocket and spacecraft parts) by determining optimum ply angles.

The researchers are currently working on scaling the algorithm up to ORNL's Summit supercomputer, currently the world's most powerful.

More information: Guannan Zhang, Jacob Hinkle. ResNet-based isosurface learning for dimensionality reduction in high-dimensional function approximation with limited data. arXiv:1902.10652v2 [math.FA]: arxiv.org/abs/1902.10652

Provided by Oak Ridge National Laboratory