August 1, 2017 report

Redefine statistical significance: Large group of scientists, statisticians argue for changing p-value from .05 to .005

(Phys.org)—A large group of scientists and statisticians has uploaded a paper to the PsyArXiv preprint server arguing for changing the p-value from .05 to .005. The paper outlines their reasons for suggesting that the commonly used value for assigning significance to results be changed.

Some science is cut and dried: If you drop a ball from a tower, for example, it will fall to the ground under normal circumstances. Unfortunately, a lot of other science is not nearly so definitive—the science of investigating, producing and using pharmaceuticals, for example. Not all drugs work as expected in all people under all conditions. Uncertainty is prevalent in many areas, including astronomy, physics and economics. Because of this, the scientific community has settled on a means for obtaining the p-value that offers a measure of an experiment's success. Different p-values mean different things, of course, but the most prominent is the one that represents what has come to be known as statistical significance, which has historically been set at .05. But now, this new paper suggests that the bar has been set too low, and is therefore contributing to the problem of irreproducible findings in research efforts.

One of the main problems with the p-value, some in the statistics field have suggested, is that non-statisticians do not really understand it and because of that, use it incorrectly. It cannot be used, for example, to declare that a new drug has a 95 percent chance of working if it is used in the prescribed way. It is also not a way of interpreting how true something is, they note. Instead, it is defined as the probability of an outcome when conducting a test that is equal to or "more extreme" than the result if the null hypothesis (nothing happened) is true.

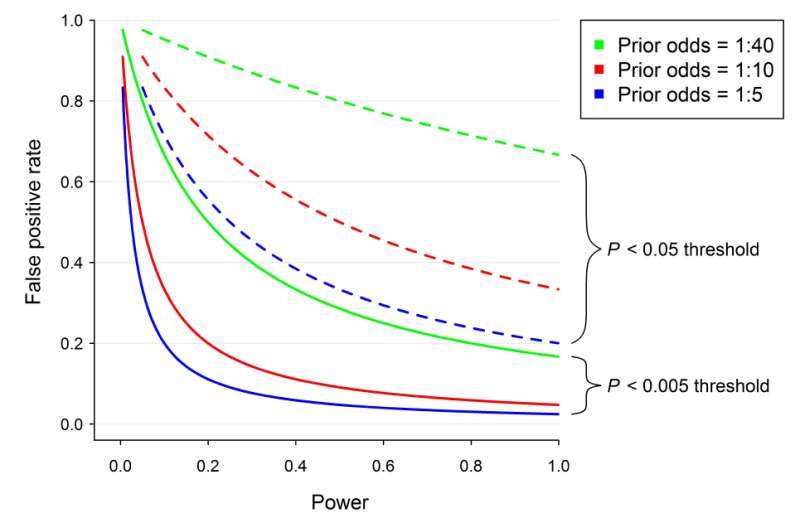

But even when it is used correctly, it does not offer a strong enough measure of evidence, according to the authors. Thus, they suggest changing the p-value to .005. They claim doing so would reduce the rate of false positives from the current 33 percent down to 5 percent.

More information: Benjamin, Daniel J et al. "Redefine Statistical Significance". PsyArXiv, 22 July 2017. Web. dx.doi.org/10.17605/OSF.IO/MKY9J

© 2017 Phys.org