Using real-world data, scientists answer key questions about an atmospheric release

In the event of an accidental radiological release from a nuclear power plant reactor or industrial facility, tracing the aerial plume of radiation to its source in a timely manner could be a crucial factor for emergency responders, risk assessors and investigators.

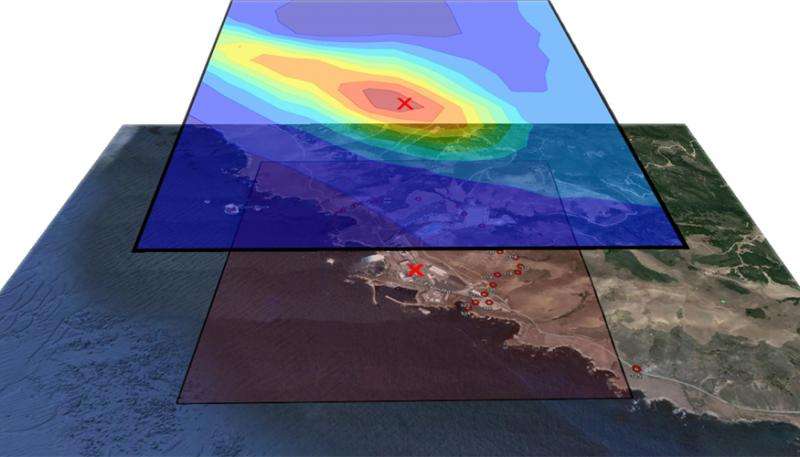

Using data collected during an atmospheric tracer experiment three decades ago at the Diablo Canyon Nuclear Power Plant on the Central Coast of California, tens of thousands of computer simulations and a statistical model, researchers at Lawrence Livermore National Laboratory (LLNL) have created methods that can estimate the source of an atmospheric release with greater accuracy than before.

The methods incorporate two computer models: the U.S. Weather Research and Forecasting (WRF) model, which produces simulations of wind fields, and the community FLEXPART dispersion model, which predicts concentration plumes based on the time, amount and location of a release. Using these models, atmospheric scientists Don Lucas and Matthew Simpson at LLNL's National Atmospheric Release Advisory Center (NARAC) have run simulations developed for national security and emergency response purposes.

In 1986, to assess the impact of a possible radioactive release at the Diablo Canyon plant, Pacific Gas & Electric (PG&E) released a non-reactive gas, sulfur hexafluoride, into the atmosphere and retrieved data from 150 instruments placed at the power plant and its surrounding area. This data was made available to LLNL scientists, presenting them with a rare and valuable opportunity to test their computational models by comparing them with real data.

"Occasionally, the models we use in NARAC undergo development and we need to test them," Lucas said. "The Diablo Canyon case is a benchmark we can use to keep our modeling tools sharp. For this project, we used our state-of-the-art weather models and had to turn back the clock to the 1980s, using old weather data to recreate, as best we could, the conditions when they were performed. We were digging up all the data, but even doing that wasn't enough to determine the exact weather patterns at that time."

Because of the complex topography and microclimate surrounding Diablo Canyon, Lucas said, the model uncertainty was high, and he and Simpson had to devise large ensembles of simulations using weather and dispersion models. As part of a recent Laboratory Directed Research & Development (LDRD) project led by retired LLNL scientist Ron Baskett and LLNL scientist Philip Cameron-Smith, they ran 40,000 simulations of plumes, altering parameters such as wind, location of the release and amount of material, each one taking about 10 hours to complete.

One of their major goals was to reconstruct the amount, the location and the time of the release when the weather is uncertain. To improve this inverse modeling capability, they enlisted the help of Devin Francom, a Lawrence Graduate Scholar in the Applied Statistics Group at LLNL. Under the direction of Bruno Sanso in the Department of Statistics at UC Santa Cruz, and his LLNL mentor and statistician Vera Bulaevskaya, Francom developed a statistical model that was used to analyze the air concentration output obtained from these runs and estimated the parameters of the release.

This model, called Bayesian multivariate adaptive regression splines (BMARS), was the subject of Francom's dissertation, which he recently successfully defended. BMARS is a very powerful tool for analysis of simulations such as the ones obtained by Simpson and Lucas. Because it is a statistical model, it does not just produce point estimates of quantities of interest, but also provides a full description of uncertainty in these estimates, which is crucial for decision-making in the context of emergency response. Moreover, BMARS was particularly well suited for the large number of runs in this problem because compared to most statistical models used for emulating computer output, it is much better at handling massive amounts of data.

"We were able to solve the inverse problem of finding where the material comes from based on the forward models and instruments in the field," Francom said. "We could say, 'it came from this area and it was over this timeframe and this is how much was released.' Most importantly, we could do that fairly accurately and give a margin of error associated with our estimates. This is a fully probabilistic framework, so uncertainty was propagated every step of the way."

Surprisingly, the location Francom's method suggested conflicted with information in technical reports of the experiment. This was investigated further, which revealed a discrepancy in recording the coordinates when the 1986 test was performed.

"When we went back and looked at the recorded location of the 1986 release, it did not seem to match the qualitative description of the researchers," Francom said. "Our prediction suggested the qualitative description of the location was more probable than the recorded release location. We didn't expect to find that. It was neat to see that we could find the possible inaccuracy in the records, and learn what we think is the true location through our particle dispersion models and the statistical emulator."

"This analysis is a very powerful example of the physics models, statistical methods, data and modern-day computational arsenal coming together to provide meaningful answers to questions involving complex phenomena," Bulaevskaya said. "Without all of these pieces, it would have been impossible to obtain accurate estimates of the release characteristics and correctly describe the degree of confidence we have in these values."

Lucas said the researchers would eventually like to have a model that can run quickly because in an actual event, they would need to know when and where the release occurred and how much was released right away. "Fast emulators, such as BMARS, give us the ability to obtain estimates of these quantities rather quickly," Lucas said. "If radiological material is released to the atmosphere and detected by downwind sensors, the emulator could give information about dangerous areas and could potentially save lives."

Francom will be moving on to Los Alamos National Laboratory to continue his work on statistical emulators for analyzing complex computer codes. Lucas and Simpson, along with Cameron-Smith and Baskett, have a paper on inverse modeling of Diablo Canyon data that is being revised for the journal Atmospheric Chemistry and Physics. Francom and co-authors have submitted another paper, focusing on BMARS in this problem, to a statistics journal and is undergoing peer review.

Journal information: Atmospheric Chemistry and Physics

Provided by Lawrence Livermore National Laboratory