Relative Citation Ratio: Scientists publish new metric to measure the influence of scientific research

A group from the National Institute of Health's Office of Portfolio Analysis has developed a new metric, known as the Relative Citation Ratio (RCR), which will allow researchers and funders to quantify and compare the influence of a scientific article. While the RCR cannot replace expert review, it does overcome many of the issues plaguing previous metrics. In a Meta-Research Article publishing on September 6 in the Open Access journal, PLOS Biology, George Santangelo and colleagues describe RCR, which measures the influence of a scientific publication in a way that is article-level and field-independent.

Historically, bibliometrics were calculated at the journal level, and the influence of an article was assumed based on the journal in which it was published. These journal-level metrics are still widely used to evaluate individual articles and researcher performance, based on the flawed assumption that all articles published by a given journal are of equal quality and that high quality science is not published in low impact factor journals. This has highlighted the need for article-level metrics.

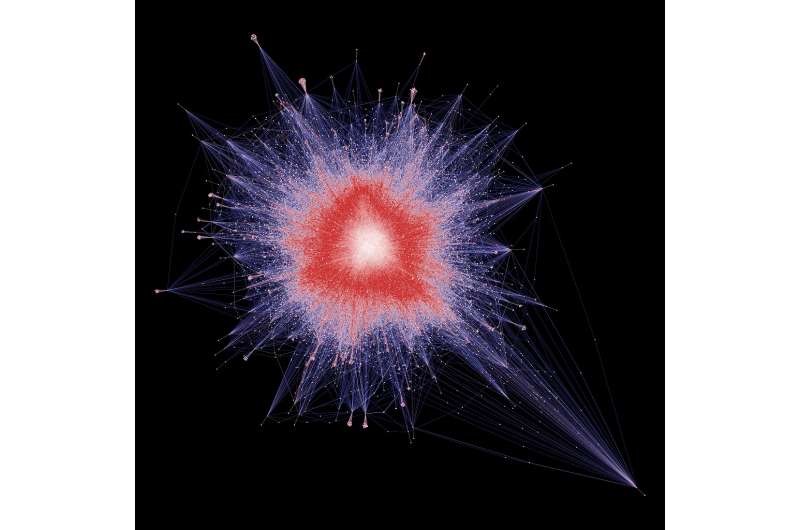

Citation is the primary mechanism for scientists to recognize the importance of each other's work, but citation practices vary widely between fields. In the article "Relative Citation Ratio (RCR): A new metric that uses citation rates to measure influence at the article level", the authors describe a novel method for field-normalization: the co-citation network. The co-citation network is formed from the reference lists of articles that cite the article in question. For example, if Article X is cited by Article A, Article B, and Article C, then the co-citation network of Article X would contain all the articles from the reference lists of Articles A, B, and C. Comparing the citation rate of Article X to the citation rate in the co-citation network allows each article to create its own individualized field.

In addition to using the co-citation network, RCR is also benchmarked to a peer comparison group, so that it is easy to determine the relative impact of an article. The authors argue that this unique benchmarking step is particularly important as it allows 'apple to apples' comparisons in comparing groups of papers, e.g. comparing research output between similar types of institutions or between developing nations.

The authors demonstrate that their quantitative RCR values correlate well with the qualitative opinions of subject experts. Many in the scientific community already support the use of RCR. Dr. Stefano Bertuzzi, Executive Director of the American Society for Microbiology, says in a blog post that RCR "evaluates science by putting discoveries into a meaningful context. I believe that RCR is a road out of the JIF [Journal Impact Factor] swamp." Additionally, two studies have already been published comparing RCR to other, simpler metrics, with RCR outperforming those metrics with regard to correlation with expert opinion. However, critics warn that the complexity of calculations behind RCR impede transparency. To counter this, the authors and the NIH provide full access to the algorithms and data used to calculate RCR. They also provide a free, easy to use web tool for calculating RCR of articles listed in PubMed at https://icite.od.nih.gov.

One of the primary criticisms raised against RCR is that, due to the field normalization method, it could undervalue inter-disciplinary work, especially for researchers who work in fields with low citation practices. The authors investigated this possibility, but find little evidence in their analyses that inter-disciplinary work is penalized by RCR calculation.

While RCR represents a major advance, the authors acknowledge that it should not be used as a substitute for expert opinion. While it does measure an article's influence, it does not measure impact, importance, or intellectual rigor. It is also too early to apply RCR in assessing individual researchers. Senior author George Santangelo explains that "no number can fully represent the impact of an individual work or investigator. Neither RCR nor any other metric can quantitate the underlying value of a study, nor measure the importance of making progress in solving a particular problem." While the gold-standard of assessment will continue to be qualitative review by experts, Santangelo says RCR can assist in "the dissemination of a dynamic way to measure the influence of articles on their respective fields".

More information: Hutchins BI, Yuan X, Anderson JM, Santangelo GM (2016) Relative Citation Ratio (RCR): A New Metric That Uses Citation Rates to Measure Influence at the Article Level. PLOS Biology 14(9): e1002541. DOI: 10.1371/journal.pbio.1002541

Journal information: PLoS Biology

Provided by National Institutes of Health