UTA prepares Titan supercomputer to process the data from LHC experiments

University of Texas at Arlington physicists are preparing the Titan supercomputer at Oak Ridge Leadership Computing Facility in Tennessee to support the analysis of data generated from the quadrillions of proton collisions expected during this season's Large Hadron Collider particle physics experiments.

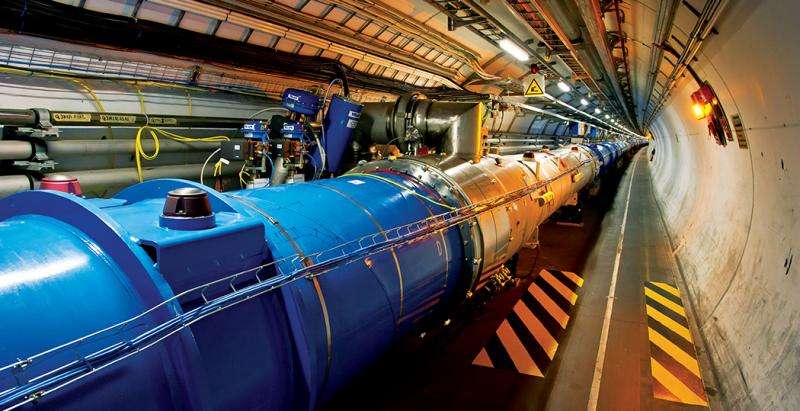

The LHC is the world's most powerful particle accelerator, located at the European Organization for Nuclear Research or CERN near Geneva in Switzerland. Its collisions produce subatomic fireballs of energy, which morph into the fundamental building blocks of matter.

The four particle detectors located on the LHC's ring allow scientists to record and study the properties of these building blocks and to look for new fundamental particles and forces.

"Some of the biggest challenges of these projects are the computing and data analysis," said physics professor Kaushik De, who leads UTA's High-Energy Physics group and supports the computing arm of these international experiments. "We need much more capacity now than in the past.

"Three years ago we started a joint program to explore the possibility of using one of the most powerful supercomputers in the world, Titan, as part of our global network of computing sites, and it has proved to be a big success. We are now processing millions of hours of computer cycles per week on Titan."

Morteza Khaledi, dean of UTA's College of Science, said that this research is one of the critical areas of worldwide significance aligned with UTA's focus on data-driven discovery as the University implements its Strategic Plan 2020: Bold Solutions | Global Impact.

"Our key role in this international project also provides opportunities for students and faculty to participate in global projects at the very highest level," Khaledi said.

De, who joined the University in 1993, designed the workload management system known as PanDA, for Production and Distributed Analysis, to handle data analysis jobs for the LHC's previous ATLAS experiments. During the LHC's first run, from 2010 to 2013, PanDA made ATLAS data available for analysis by 3,000 scientists around the world using the LHC's global grid of networked computing resources.

The latest rendition, known as Big PanDA, schedules jobs opportunistically on Titan in a manner that does not conflict with Titan's ability to schedule its traditional, very large, leadership-class computing jobs.

"This integration of the workload management system on Titan—the first large-scale use of leadership class supercomputing facilities fully integrated with PanDA to assist in the analysis of experimental high-energy physics data—will have immediate benefits for ATLAS," De said.

UTA currently hosts the ATLAS SouthWest Tier 2 Center, one of more than 100 centers around the world where massive amounts of data from the particle collisions is fed and analyzed.

De leads a team of 30 UTA researchers dedicated to the ATLAS project. His research has generated more than $30 million in research funding for UTA during the past two decades.

During the first run of the LHC, scientists on the ATLAS and CMS experiments discovered the Higgs boson, the cornerstone of the Standard Model that helps explain the origins of mass.

"So far the Standard Model seems to explain ordinary matter, but we know there has to be something beyond the Standard Model," said Alexander Weiss, chair of the UTA Physics Department. "The understanding of this new physics can only be uncovered with new experiments such as those to be performed in next LHC run."

For example, the Standard Model contains no explanation of gravity, which is one of the four fundamental forces in the universe. It also does not explain astronomical observations of dark matter, a type of matter that interacts with our visible universe only through gravity, nor does it explain why matter prevailed over antimatter during the formation of the early universe. The small mass of the Higgs boson also suggests that matter is fundamentally unstable.

The new LHC data will help scientists verify the Standard Model's predictions and push beyond its boundaries. Many predicted and theoretical subatomic processes are so rare that scientists need billions of collisions to find just a small handful of events that are clean and scientifically interesting. Scientists also need an enormous amount of data to precisely measure well-known Standard Model processes. Any significant deviations from the Standard Model's predictions could be the first step towards new physics.

Provided by University of Texas at Arlington