Spin doctoring is deliberate manipulation. I don't think everyday research spin is intended to deceive, though. Mostly it's because researchers want to get attention for their work and so many others do it, it seems normal. Or they don't know enough about minimizing bias. It takes a lot of effort to avoid bias in research reporting.

Regardless of why it's done, the effect of research spin is the same. It presents a distorted picture. And when that collides with readers who don't have enough time, inclination, or awareness to see the spin, people are misled. It's one way strong beliefs keep being shored up by flimsy foundations.

A classic example of research spin kept flying through my Twitter feed this last week. The message was one that must have kicked a lot of confirmation bias dust into the eyes of people who pushed it along: more grist for the scientists-don't-write-well mill. It's a good example to discuss the mechanics of research reporting bias, because the topic (though not the paper) is easy to understand for anyone who reads scientific literature: the language used in abstracts in science journals. But a little background before we get to it.

Research spin is when findings are made to look stronger or more positive than is justified by the study. You can do it by distracting people from negative results or limitations by getting them to focus on the outcomes you want – or even completely leaving out results that mess up the message you want to send. You can use words to exaggerate claims beyond what data support – or to minimize inconvenient results.

t was ironic that this example was a study of abstracts. Abstracts evolved as marketing blurbs for papers – and they're the mother lode of research spin. (More on that in this post.) Other than the title, it's the part of a paper that will be read the most. And with some exceptions I'll get to later, there's not all that much guidance for how to do this critical research reporting step well.

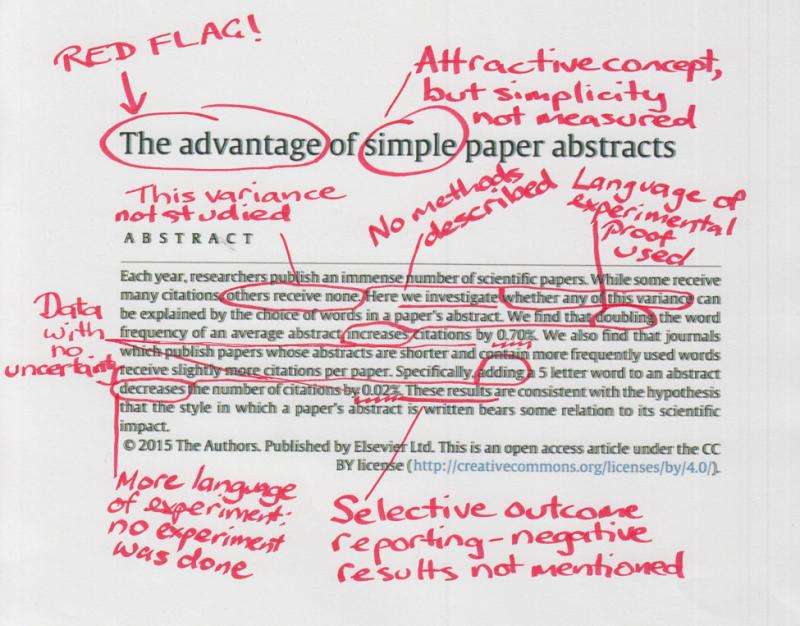

This is the study, by Adrian Letchford and colleagues. They conclude their statistical modeling data supports their hypothesis that papers with harder-to-understand abstracts get cited less. I've scribbled pointers to red flags for research spin on the title and abstract and I'll flesh those out as we go. I, and most others in my Twitter circle I expect, first heard of this study because Retraction Watch decided this study was worth covering.

Right from the title, it's clear this is not an objective description of a research project. And that's usually not a good sign. Out of the many analyses the authors report in the paper and its supplementary information, in the abstract they include only some that confirm their hypothesis – with no mention that there were some that don't. For example, one analysis found that among the papers published in the same journal, the longer abstracts were associated with increased citations.

There's no explanation in the abstract of what kind of study it was. But the language chosen sounds like an experimental study's results. For example: "Doubling the word frequency of an average abstract increases citations by 0.70 percent". There was no experiment of what happens when you double "word frequency" of an abstract. The data come from statistical modeling, with various assumptions and components. "Word frequency" here might not mean what you think it means. (It's confusing: more on that later.)

Just what difference these numbers might mean is not put in any context. There's just a very precise number, without showing how much uncertainty is associated with that result.

Let's go to the authors' aim and hypothesis. Did they investigate the reasons for variance between studies that have many citations and those that have none as the abstract implies? Could this study support the authors' hypothesis and conclusions?

The first step is what's known so far about whether citation rates are affected by the language and length of abstracts. The paper cites two previous studies: one was from 2013, and the authors found no impact of length of abstract. The other was from 2015, and those authors found that shorter abstracts were associated with fewer citations – except for mathematics and physics. Using more common and simpler words was also associated with fewer citations.

So from the paper itself, we already know that these authors' conclusions conflict with other research. When I looked at the citations of that 2013 study in Google Scholar, I quickly found another two studies [PDF, PDF], and they had similar results. (There could be more.)

Why, then, did this group do their study – and without a thorough search for existing evidence? They write that they found the results of those two studies "surprising", "in the light of evidence from psychological experiments" about reading. At some point in the process, they were "inspired by the idea that more frequently used words might require a lower cognitive load to understand".

It's hard to see the direct relevance to scientists' work of this hypothesis. Most citations in the scientific literature should be by authors with a reasonable grasp of the subject they are writing about, and its jargon. If a title or abstract is not using the expected jargon, you could assume it could be overlooked. But unless a paper is truly undecipherable, why would we avoid citing papers with abstracts dense with jargon? (You might assume that journalists could gravitate more towards papers with abstracts they find easy to understand, but media attention is not part of the mechanism to increased citation these authors hypothesize.)

But that's why they did it. So how did they go about it, and what were the risks of bias in their approach?

They took 10 years' worth of science journal articles and citation data from the Web of Science. Because of limits to what can be downloaded, they took only the top 1 percent most highly cited papers from each year. There wasn't a sample size calculation. (Presumably only English language abstracts were included.)

The 1 percenters were in around 3,000 journals a year. All journals with 10 or fewer papers in the top 1 percent in each year were excluded. That brought their sample down to 1 percenters concentrated in around 500 journals a year. (There was no data for papers that weren't highly cited.)

What fields of science these journals come from wasn't analyzed. The authors argue that factoring out journals in some analyses means "any effect of differences between fields is also accounted for". No analysis was provided to support that. Journals with policies with very short word limits for abstracts (like Science) weren't analyzed separately either.

And then they started doing lots of analyses and building successive models. There's always a risk of pulling up flukes doing that, even though they used a statistical method to try to reduce the risk. How many analyses were planned beforehand wasn't reported. (You can read more about why that's important here.)

For the final model that arrives at the numbers used in the abstract, data on authors are added out of the blue, with no explanation of why. By that point in the article, I found it hard not to wonder what other analyses had been run but not reported.

What counted as "simple" language across all these disciplines? How often abstracts used frequently used words in Google Books. That's not the same as writing simply. And just because professional jargon isn't in widespread use among book authors, doesn't mean that the words aren't in frequent use within a professional group.

Here's the key bit that explains their use of "word frequency":

We find that the average abstract contains words that occur 4.5 times per million words in the Google Ngram dataset. According to our model, doubling the median word frequency of an average abstract to 9 times per million words will increase the number of times it is cited by approximately 0.74 percent [sic]. If the average English word is approximately 5 letters long, then removing a word from an abstract increases the number of citations by 0.02 percent according to our model.

It's not clear that the Google dataset shows us the simplest words. For example, "genetic" and "linear" appear far more frequently than words like "innovative", "breakthrough", or even "apple". That doesn't mean linear is a simpler word than apple. One of the purposes of jargon is precision: removing it while also using fewer words doesn't necessarily make something simple to read either. And for much research, a big part of a good abstract is numbers and statistical terms.

Cody Weinberger and colleagues, who conducted the 2015 study referred to above, have very different recommendations. They conclude that writing with the jargon needed for articles to be retrieved is critical for citation:

An intriguing hypothesis is that scientists have different preferences for what they would like to read versus what they are going to cite. Despite the fact that anybody in their right mind would prefer to read short, simple, and well-written prose with few abstruse terms, when building an argument and writing a paper, the limiting step is the ability to find the right article.

Good communication is important. Obscure communication makes it harder to work out what researchers actually did – and that can push people into simply relying on the researchers' claims. (The word frequency issue in this paper about abstracts is a good case in point!)

In clinical research, there's a strong and growing set of standards helping us improve research reporting – including in our abstracts. See for example reporting standards for abstracts of clinical trials [PDF]. (More about the origins of organizing to improve research reporting from the brilliant Doug Altman here.) Many of us are also working to ensure that there good descriptions of research and other jargon to help non-experts make sense of research (for example here).

Good science should be transparent. The writing and data presentation should be a clear window into it. Ultimately, though, methodologically strong research reporting that avoids spin is far more critical an issue for good science than the use of jargon in abstracts.

More information: Matthew E. Falagas et al. The Impact of Article Length on the Number of Future Citations: A Bibliometric Analysis of General Medicine Journals, PLoS ONE (2013). DOI: 10.1371/journal.pone.0049476 Cody J. Weinberger et al. Ten Simple (Empirical) Rules for Writing Science, PLOS Computational Biology (2015). DOI: 10.1371/journal.pcbi.1004205 Cody J. Weinberger et al. Ten Simple (Empirical) Rules for Writing Science, PLOS Computational Biology (2015). DOI: 10.1371/journal.pcbi.1004205

Journal information: PLoS Computational Biology , PLoS ONE

Provided by Public Library of Science

This story is republished courtesy of PLOS Blogs: blogs.plos.org.