Researchers release open source code for powerful image detection algorithm

A UCLA Engineering research group has made public the computer code for an algorithm that helps computers process images at high speeds and "see" them in ways that human eyes cannot. The researchers say the code could eventually be used in face, fingerprint and iris recognition for high-tech security, as well as in self-driving cars' navigation systems or for inspecting industrial products.

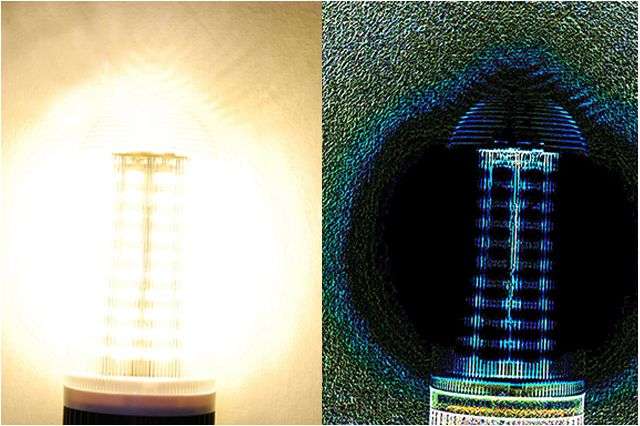

The algorithm performs a mathematical operation that identifies objects' edges and then detects and extracts their features. It also can enhance images and recognize objects' textures.

The algorithm was developed by a group led by Bahram Jalali, a UCLA professor of electrical engineering and holder of the Northrop-Grumman Chair in Optoelectronics, and senior researcher Mohammad Asghari.

It is available for free download on two open source platforms, Github and Matlab File Exchange. Making it available as open source code will allow researchers to work together to study, use and improve the algorithm, and to freely modify and distribute it. It also will enable users to incorporate the technology into computer vision and pattern recognition applications and other image-processing applications.

The Phase Stretch Transform algorithm, as it is known, is a physics-inspired computational approach to processing images and information. The algorithm grew out of UCLA research on a technique called photonic time stretch, which has been used for ultrafast imaging and detecting cancer cells in blood.

The algorithm also helps computers see features of objects that aren't visible using standard imaging techniques. For example, it might be used to detect an LED lamp's internal structure, which—using conventional techniques—would be obscured by the brightness of its light, and it can see distant stars that would normally be invisible in astronomical images.

Provided by University of California, Los Angeles