Disney Research and Carnegie Mellon University scientists have found that three computer vision methods commonly used to reconstruct 3-D scenes produce superior results in capturing facial details when they are performed simultaneously, rather than independently.

Photometric stereo (PS), multi-view stereo (MVS) and optical flow (OF) are well-established methods for reconstructing 3-D images, each with its own strengths and weaknesses that often complement the others. By combining them into a single technique, called photogeometric scene flow (PGSF), the researchers were able to create synergies that improved the quality and detail of the resulting 3-D reconstructions.

"The quality of a 3-D model can make or break the perceived realism of an animation," said Paulo Gotardo, an associate research scientist at Disney Research. "That's particularly true for faces; people have a remarkably low threshold for inaccuracies in the appearance of facial features. PGSF could prove extremely valuable because it can capture dynamically moving objects in high detail and accuracy."

Gotardo, working with principal research scientist Iain Matthews at Disney Research in collaboration with Tomas Simon and Yaser Sheikh of Carnegie Mellon's Robotics Institute, found that they could obtain better results by solving the three difficult problems simultaneously.

PS can capture the fine detail geometry of faces or other texture-less objects by photographing the object under different lighting conditions. The method is often used to enhance the detail of low-resolution geometry obtained by MVS, but requires a third technique, OF, to compensate for 3-D motion of the object over time. With each of these three steps, image misalignments and other errors can accumulate and lead to a loss of detail.

"The key to PGSF is the fact that PS not only benefits from, but also facilitates the computation of MVS and OF," Simon said.

The researchers found that facial details such as skin pores, eyes, brows, nostrils and lips that they obtained via PGSF were superior to those obtained using other state-of-the-art techniques.

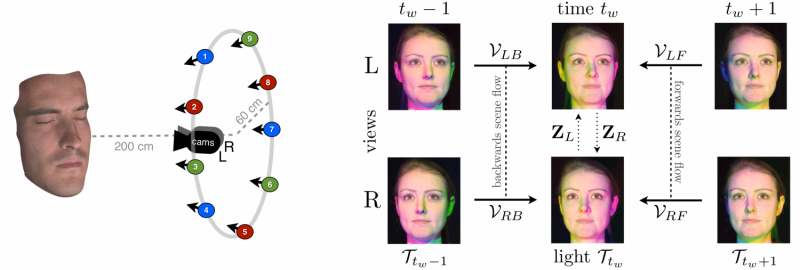

To perform PGSF, the researchers created an acquisition setup consisting of two cameras and nine directional lights of three different colors. The lights are multiplexed in time and spectrally to sample the appearance of an actor's face within a very short interval—three video frames. This minimizes the need for motion compensation while also minimizing self-shadowing, which can be problematic for 3-D reconstruction.

"The PGSF technique also can be applied to more complex acquisition setups with different numbers of cameras and light sources," Matthews said.

The researchers will present their findings on PGSF at ICCV 2015, the International Conference on Computer Vision, Dec. 11, in Santiago, Chile.

More information: "Photogeometric Scene Flow for High-Detail Dynamic 3D Reconstruction-Paper" [PDF, 17.10 MB]

Provided by Disney Research