Credit: AI-generated image (disclaimer)

Many of Shakespeare's plays depend on mistaken identity. In Twelfth Night, Viola disguises herself as a boy, and is mistaken for her twin brother Sebastian, complicating an already complicated love triangle.

Don't forget, boys played girls back then. So we had a boy playing a girl pretending to be a boy mistaken for her twin brother. Have I lost you yet?

Not surprisingly, it usually ended in comedy or tragedy. Or both.

Shakespeare, of course, was only borrowing the dramatic idea of mistaken identity from the ancient Greeks.

But mistaken identity is set to trouble our digital lives. For this reason, I have an article coming out shortly in the Communications of the ACM, the house magazine of the largest computing society, warning of some of the upcoming dangers.

Who's behind the wheel?

One such danger arrives this weekend. Volvo will trial autonomous cars on the Southern Expressway in Adelaide, the first such trial in the southern hemisphere.

The law in South Australia was changed to permit the Transport Minister to approve such trials. But this change in the law doesn't require autonomous cars to identify themselves apart from those driven by humans.

This wasn't much of a problem when the technology was big, bulky and easy to spot. But regular looking cars can now be driven autonomously.

Indeed, if you have the latest Tesla S, you can simply update the software over the internet and turn it into an autonomous car, at least for highway driving.

But shouldn't an autonomous car have distinctive plates or flashing lights to stop it being mistaken for one driven by a human?

We already do this with learner drivers. And the fact that we are trialing driverless car technology means we are still in the learning phase.

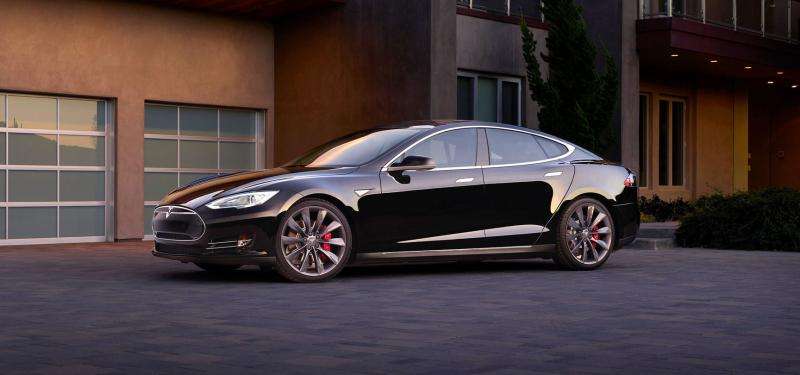

The Tesla S is capable of driving autonomously, but you’d never know just by looking at it. Credit: Tesla

There are a whole host of other reasons why the general public should know that the car in front is autonomous.

The Google cars driving autonomously around California have had around a dozen minor accidents. In almost every case, they were rear-ended by a human driver, who Google argues was at fault.

But I suspect some of those accidents were because the Google car stopped quickly, following the letter of the law too precisely when a human might just have driven on.

At a four way intersection, autonomous cars struggle to follow the subtle body language and eye contact that human drivers use to decide who has priority.

And one day, autonomous cars will be far better drivers than humans and we'll want them distinguished apart from cars driven by humans.

Red flag

There is, of course, useful historical precedent here. The UK Locomotive Act of 1865 required a person with a red flag to walk 60 yards in front of one of the new fangled "self propelled machines".

This was perhaps a little too restrictive. But nevertheless, the intent was a good one: to protect society from a new technology, especially in a period of change.

Inspired by such historical precedents, I propose that we introduce laws to prevent autonomous systems from being mistaken for humans. In recognition of Alan Turing's contributions to AI, I am calling this the Turing Red Flag law.

One of Alan Turing's seminal contributions is the Turing Test. This asks if a computer can be mistaken for a human. When it can, Alan Turing argued we would had true artificial intelligence.

A Turing Red Flag law would require any autonomous system be designed to prevent it being mistaken for one controlled by a human.

Let's consider another area where a Turing Red Flag law would apply. Apple just reported record results. Minutes later, Associated Press put out a report that begins:

CUPERTINO, Calif. (AP) Apple Inc. (AAPL) on Tuesday reported fiscal first-quarter net income of $18.02 billion. The Cupertino, California-based company said it had profit of $3.06 per share. The results surpassed Wall Street expectations…

Read on, and you get to the kicker.

This story was generated by Automated Insights

Yes, a computer and not a journalist wrote the report. And indeed, a computer generates most of AP's financial reports.

A Turing Red Flag law would require that you be informed that the news article you are reading was generated by computer and not by a person. Yes, before you ask this piece was written by a living, breathing human.

Similarly, a Turing Red Flag law would require you be told that the poker bot taking all your money is a computer. Or that the chat bot flirting with you on a dating site is not a person.

This way we can leave cases of mistaken identity to the Bard, and get on with our lives safe in the knowledge of whether we're dealing with man or machine.

Source: The Conversation

This story is published courtesy of The Conversation (under Creative Commons-Attribution/No derivatives).

![]()