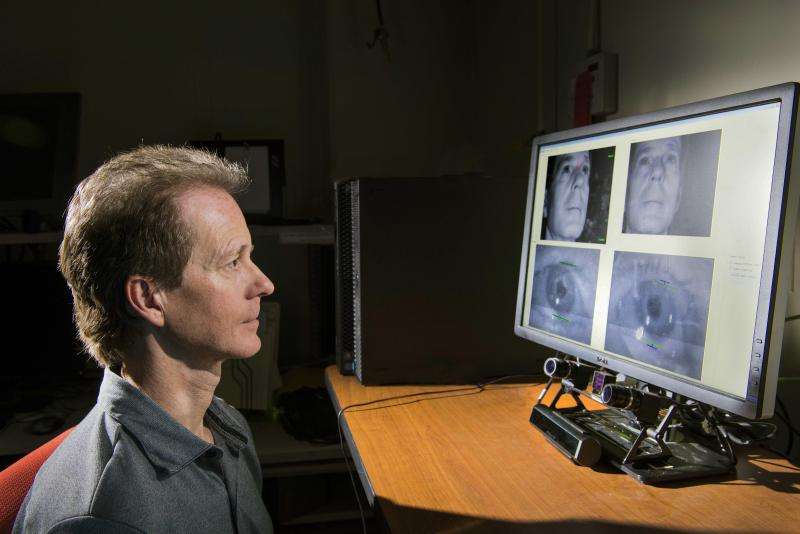

Sandia National Laboratories researcher Mike Haass demonstrates how an eye tracker under a computer monitor is calibrated to capture his eye movements on the screen. Haass and others are working with a San Diego-based small business, EyeTracking Inc., to figure out how to capture within tens of milliseconds the content beneath the point on the screen where the viewer is looking. Credit: Randy Montoya

Intelligence analysts working to identify national security threats in warzones or airports or elsewhere often flip through multiple images to create a video-like effect. They also may toggle between images at lightning speed, pan across images, zoom in and out or view videos or other moving records.

These dynamic images demand software and hardware tools that will help intelligence analysts analyze the images more effectively and efficiently extract useful information from vast amounts of quickly changing data, said Laura McNamara, an applied anthropologist at Sandia National Laboratories who has studied how certain analysts perform their jobs.

"Our core problem is designing computational information systems that make people better at getting meaningful information from those data sets, which are large and diverse and coming in quickly in high-stress environments," McNamara said.

A first step toward technological solutions for government agencies and industry grappling with this problem is a Cooperative Research and Development Agreement that Sandia has signed with EyeTracking Inc., a San Diego small business that specializes in eye tracking data collection and analysis.

"Both Sandia and EyeTracking are being helped by a direct link between each other," said EyeTracking president James Weatherhead. "The hope is for both sides to come out with these tools and feed solutions back to different government agencies."

Eye tracking monitors gazes, measures workload

In general, eye tracking measures the eyes' activity by monitoring a viewer's gaze on a computer screen, noting where viewers look and what they ignore and timing when they blink. Current tools work well analyzing static images, like the children's picture book "Where's Waldo," and for video images where researchers anticipate content of interest, for example the placement of a product in a movie.

Sandia researcher Laura Matzen says such eye tracking data has been used in laboratory environments to study how people reason and differences between the ways experts and novices use information, but now Sandia needs to study real-world, or dynamic, environments.

If EyeTracking and Sandia can figure out ways to provide improved data analysis for dynamic images, Matzen said researchers can:

- design enhanced experiments or field studies using dynamic images;

- compare how people or groups of people interact with dynamic visual data;

- advance cognitive science research to explore how expertise affects visual cognition, which could be used to create more effective training programs; and

- inform new system designs, for example, to help scale up certain types of surveillance by partially automating some analyst steps or highlighting anomalies to help analysts notice them or make sense of them more quickly.

EyeTracking provides hardware, software, Sandia offers access to analysts

EyeTracking is the exclusive distributor of the FOVIO Eye Tracker, a camera about the size of a soda can that's placed under a computer monitor to track viewers' eye movements. The company was started by Sandra Marshall, a cognitive psychologist from San Diego State University, who has worked with colleagues to develop software packages for collecting and analyzing eye tracking data.

Under the agreement, Sandia researchers Dan Morrow and Mike Haass are working with EyeTracking to figure out how to capture within tens of milliseconds the content beneath the point on a screen where a viewer is looking.

"How soon does the analyst look at the target region? How long to they linger there? Do they ever get there?" Morrow asks. "If they are dwelling in another area, then we might go back after the fact to figure out why they are doing that."

Until now, eye tracking research has shown how viewers react to stimuli on the screen. For example, a bare, black tree against a snow-covered scene will naturally attract attention. This type of bottom-up visual attention, where the viewer is reacting to stimuli, is well understood, Matzen said.

But what if the viewer is looking at the scene with a task in mind, like finding a golf ball in the snow? They might glance at the tree quickly, but then their gaze goes to the snow to search for the golf ball. This type of top-down visual cognition is not well understood and Sandia hopes to develop models that predict where analysts will look, she said.

Sandia researchers have worked with intelligence analysts to better understand how they do their jobs. In one experiment, they filmed them and asked them to describe their thought processes at points in the video, but because their visual task strategies had become automatic over the course of their careers, they couldn't accurately describe how they did their jobs, McNamara said.

"We know a lot about information processing, the physiology and neuroscience of visual processing," she said. "How do we take that and apply it in these highly dynamic and real-world environments? The technologies are developed around a laboratory model as opposed to these real-world task environments."

Partnership could lead to software designs that keep end user in mind

McNamara says researchers need to anticipate analysts' decisions in real-world environments to create a model of top-down visual decision-making. "We want to understand how fixation on something leads to analyst decisions, such as detouring to get information from a different source," she said. "Right now, there's no way to do that kind of complex information foraging modeling and incorporate eye tracking. You can't do it, unless you want to go back and hand code every single fixation."

That's "incredibly tedious," Morrow said, so he and Haass are exploring how to match time-stamped data with the content the viewer is focused on as they toggle, zoom or pan through their work day.

"You might build this great radar, for example, but if you haven't thought through how that data is interpreted, it's not going to be successful because it's the whole system including the human analyst that creates mission success," Morrow said.

The CRADA and several other projects at Sandia aim to strengthen the connections between humans and technology and to design systems with the end user in mind, McNamara said.

"Where this could end up going is ensuring that as we invest money on information and analysis environments for intelligence analysts who are facing this firehose of information, we don't give them software that increases their cognitive and perceptual load or that they just can't use," she said.

Provided by Sandia National Laboratories