Shooting "color" in the blackness of space

It's a question that I've heard, in one form or another, for almost as long as I've been talking with the public about space. And, to be fair, it's not a terrible inquiry. After all, the smartphone in my pocket can shoot something like ten high-resolution color images every second. It can automatically stitch them into a panorama, correct their color, and adjust their sharpness. All that for just a few hundred bucks, so why can't our billion-dollar robots do the same?

The answer, it turns out, brings us to the intersection of science and the laws of nature. Let's take a peek into what it takes to make a great space image…

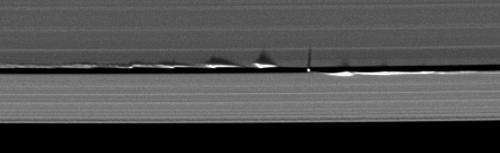

Perhaps the one thing that people most underestimate about space exploration is the time it takes to execute a mission. Take Cassini, for example. It arrived at Saturn back in 2004 for a planned four-year mission. The journey to Saturn, however, is about seven years, meaning that the spacecraft launched way back in 1997. And planning for it? Instrument designs were being developed in the mid-1980s! So, when you next see an astonishing image of Titan or the rings here at Universe Today, remember that the camera taking those shots is using technology that's almost 30 years old. That's pretty amazing, if you ask me.

But even back in the 1980s, the technology to create color cameras had been developed. Mission designers simply choose not to use it, and they had a couple of great reasons for making that decision.

Perhaps the most practical reason is that color cameras simply don't collect as much light. Each "pixel" on your smartphone sensor is really made up of four individual detectors: one red, one blue, two green (human eyes are more sensitive to green!). The camera's software combines the values of those detectors into the final color value for a given pixel. But, what happens when a green photon hits a red detector? Nothing, and therein lies the problem. Color sensors only collect a fraction of the incoming light; the rest is simply lost information. That's fine here on Earth, where light is more or less spewing everywhere at all times. But, the intensity of light follows one of those pesky inverse-square laws in physics, meaning that doubling your distance from a light source results in it looking only a quarter as bright.

That means that spacecraft orbiting Jupiter, which is about five times farther from the Sun than is the Earth, see only four percent as much light as we do. And Cassini at Saturn sees the Sun as one hundred times fainter than you or I. To make a good, clear image, space cameras need to make use of all the little light available to them, which means making do without those fancy color pixels.

The darkness of the solar system isn't the only reason to avoid using a color camera. To the astronomer, light is everything. It's essentially our only tool for understanding vast tracts of the Universe and so we must treat it carefully and glean from it every possible scrap of information. A red-blue-green color scheme like the one used in most cameras today is a blunt tool, splitting light up into just those three categories. What astronomers want is a scalpel, capable of discerning just how red, green, or blue the light is. But we can't build a camera that has red, orange, yellow, green, blue, and violet pixels – that would do even worse in low light!

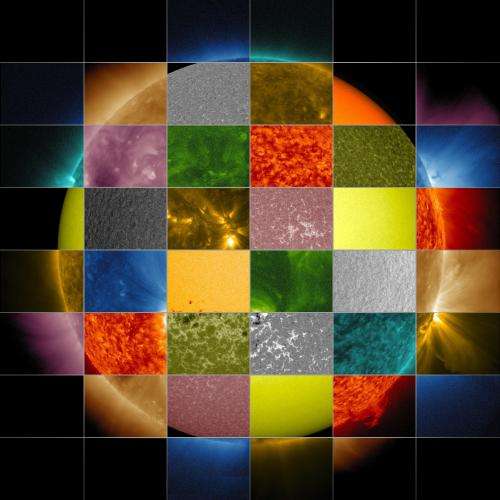

Instead, we use filters to test for light of very particular colors that are of interest scientifically. Some colors are so important that astronomers have given them particular names; H-alpha, for example, is a brilliant hue of red that marks the location of hydrogen throughout the galaxy. By placing an H-alpha filter in front of the camera, we can see exactly where hydrogen is located in the image – useful! With filters, we can really pack in the colors. The Hubble Space Telescope's Advanced Camera for Surveys, for example, carries with it 38 different filters for a vast array of tasks. But each image taken still looks grayscale, since we only have one bit of color information.

At this point, you're probably saying to yourself "but, but, I KNOW I have seen color images from Hubble before!" In fact, you've probably never seen a grayscale Hubble image, so what's up? It all comes from what's called post-processing. Just like a color camera can combine color information from three detectors to make the image look true-to-life, astronomers can take three (or more!) images through different filters and combine them later to make a color picture. There are two main approaches to doing this, known colloquially as "true color" and "false color."

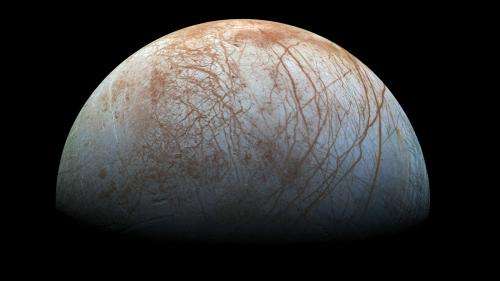

True color images strive to work just like your smartphone camera. The spacecraft captures images through filters which span the visible spectrum, so that, when combined, the result is similar to what you'd see with your own eyes. The recently released Galileo image of Europa is a gorgeous example of this.

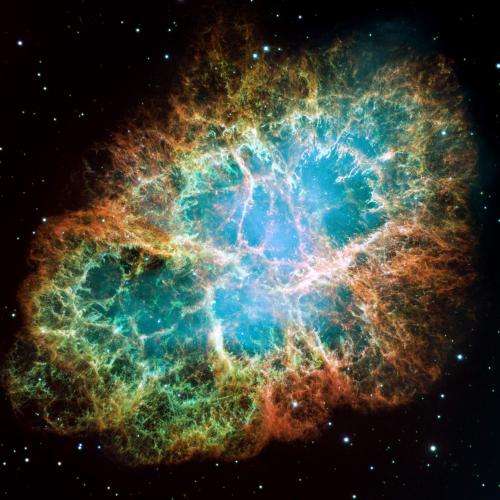

False color images aren't limited by what our human eyes can see. They assign different colors to different features within an image. Take this famous image of the Crab Nebula, for instance. The red in the image traces oxygen atoms that have had electrons stripped away. Blue traces normal oxygen and green indicates sulfur. The result is a gorgeous image, but not one that we could ever hope to see for ourselves.

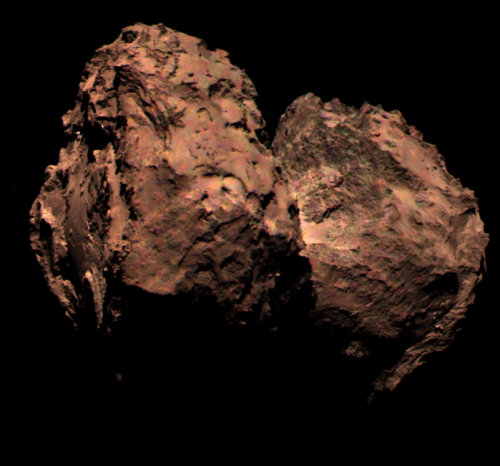

So, if we can make color images, why don't we always? Again, the laws of physics step in to spoil the fun. For one, things in space are constantly moving, usually really, really quickly. Perhaps you saw the first color image of comet 67P/Churyumov-Gerasimenko released recently. It's kind of blurry, isn't it? That's because both the Rosetta spacecraft and the comet moved in the time it took to capture the three separate images. When combined, they don't line up perfectly and the image blurs. Not great!

But it's the inverse-square law that is the ultimate challenge here. Radio waves, as a form of light, also rapidly become weaker with distance. When it takes 90 minutes to send back a single HiRISE image from the Mars Reconnaissance Orbiter, every shot counts and spending three on the same target doesn't always make sense.

Finally, images, even color ones, are only one piece of the space exploration puzzle. Other observations, from measuring the velocity of dust grains to the composition of gases, are no less important to understanding the mysteries of nature. So, next time you see an eye-opening image, don't mind that it's in shades of gray. Just imagine everything else that lack of color is letting us learn.

Source: Universe Today