Science graduates are not that hot at maths – but why?

Research suggests science graduates are struggling with essential quantitative skills and science degree programs are to blame.

Quantitative skills are the bread and butter of science. More than calculating right answers, quantitative skills are defined by applying mathematical and statistical reasoning to scientific and everyday problems.

They underpin national and international expectations for science graduates.

But at two Group of Eight universities, 40% of final year science students reported low levels of confidence in quantitative skills. We know what students think, but what can they do?

Applying maths and stats reasoning

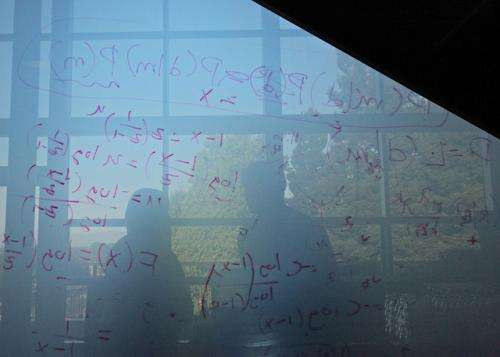

In the weeks before, 210 biosciences students were walking across the stage to receive their Bachelor of Science qualification and I asked them to answer 35 mathematical and statistical reasoning questions.

Below is a preview of results presented today at the Australian Conference on Science and Mathematics Education and previously at the First Year in Maths Forum.

The maths used in the biosciences are often not much more than school level maths. I used questions from online maths modules developed by university bioscientists, such as:

The diameter of ribosomes start at about 11 nanometres. How many micrometres is this?

The intent was to assess application of mathematical reasoning, not a student's memory of metric units. So some additional information was provided:

The metric vocabulary includes TERA (trillions), GIGA (billions), MEGA (millions), KILO (thousands), MILLI (thousandths), MICRO (millionths), NANO (billionths) and PICO (trillionths). The easiest way to convert metric measurements is to move the decimal place. Every time you move to the right on this list, the unit gets smaller (by 3 decimal places), so you need more of them to compensate (move the decimal place 3 to the right).

It was almost giving them the answer but of the 210 students, only 69% were able to select the correct answer (try for yourself).

Students were then presented with another metric conversion question that showed five numbers (2x103 cm; 0.3 cm; 4 mm; 7x10-4 km; 8 m) and asked to place them in ascending order.

Only 50% selected the correct answer.

Of the 10 basic mathematical application questions asked, many underpinned by proportional reasoning, the average score was 74%.

Interpreting data and drawing sensible conclusions are central to science. I used statistical reasoning tasks developed by university statisticians with questions such as this hypothesis test.

An electrician uses an instrument to test whether or not an electrical circuit is defective. The instrument sometimes fails to detect that a circuit is good and working. The null hypothesis is that the circuit is good (not defective). The alternative hypothesis is that the circuit is not good (defective). If the electrician rejects the null hypothesis, which of the following statements is true?

The options were then given the students:

- The electrician decides that the circuit is defective, but it could be good

- The circuit is definitely not good and needs to be repaired

- The circuit is definitely good and does not need to be repaired

- The circuit is most likely good, but it could be defective

Only 45% of students selected the correct answer with most students demonstrating a fundamental misconception of statistical hypothesis testing.

In another question, probing understanding of correlation and causation, students were asked:

Researchers surveyed 1,000 randomly selected adults in the United States. A statistically significant, strong positive correlation was found between income level and the number of containers of recycling they typically collect in a week. Please select the best interpretation of this result.

Again, the students were asked to select from optons:

- We can not conclude whether earning more money causes more recycling among US adults because this type of design does not allow us to infer causation

- This sample is too small to draw any conclusions about the relationship between income level and amount of recycling for adults in the US

- This result indicates that earning more money influences people to recycle more than people who earn less money

One-third of students answered incorrectly.

Of the 25 statistical reasoning questions asked, exploring topics such as graphical representation of data, tests of significance and probability, the average score was 56%.

So who's to blame?

We could easily blame the school sector or even students for these alarming results. But at the end of the day if universities give students degree qualifications, they have to take responsibility for the quality of those graduates.

As I have argued before, science students can tell us a lot about the quality of science degree programs.

I interviewed several students about the quantitative skills questions discussed above. A maths student (she answered 23 of the 35 questions correctly) said:

I have never been asked questions like this in my degree program.

A biosciences student (he answered 27 of the 35 questions correctly) added:

I would have done better if I took this in first year.

He then explained how little quantitative skills were required in science subjects. Empirical research indicates he is not alone with 400 science students indicating quantitative skills were not widely assessed across the curriculum.

The student interviews resonated with findings of a recent study into bioscience degree programs that asked 46 academics from 13 universities to identify where students learn quantitative skills.

The results showed that quantitative skills were not taught much and that academics rarely talked collectively about the teaching of quantitative skills across the degree program.

The real problems to address in science higher education, if we expect students to graduate with quantitative skills or any complex learning outcome for that matter, are whole of degree program curriculum leadership and teaching development.

Most science academics have no training in teaching or learning, and they do not have a picture of the curriculum beyond the modules they teach. Science departments typically do not have curriculum leaders who gather together academics to plan for student learning across subjects or year levels.

We need to start discussing this nationally so when science students walk across the stage to get their science qualifications, we trust the quality of the qualification.

Source: The Conversation

This story is published courtesy of The Conversation (under Creative Commons-Attribution/No derivatives).

![]()