August 5, 2013 weblog

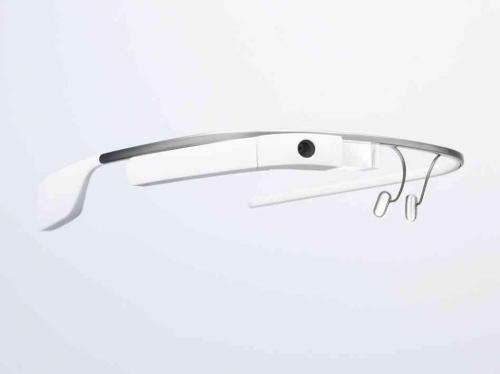

OpenGlass apps show support for visually impaired (w/ Video)

A two-person team behind a company called Dapper Vision is pioneering an OpenGlass Project that could tweak perceptions about Google Glass. Rather than seeing Google's wearable as a marketing and infotainment luxury item, the hands-free device could become known as an information vehicle for the visually impaired. The two doctoral students behind Dapper Vision recently released the latest in a series of videos showing OpenGlass apps at work. The latest video in the series, posted last month, offers two explorations into how Google Glass might behave as a support system for identifying objects for the visually impaired.

The video first shows a demo with a Question-Answer app. This app turns to Amazon's Mechanical Turk and Twitter for help in identifying objects. Users take a picture with a question attached, which is sent for answers on Twitter or Amazon's Mechanical Turk. In this scenario the visually impaired user asks Google Glass what an object is ("What is this a can of?") and gets a reply.

The other app, called Memento, carries images and annotations that a sighted user first creates so that the information can then be heard by the visually impaired user. The sighted person creates and describes the scene. Once that is done, the visually impaired person, wearing Glass, can know what items are in the room. Glass recognizes the scene and reads back the sighted person's earlier commentary. Memento can warn users about dangerous equipment, for example. These are test demos in preparation for further user testing down the road.

In introducing themselves as the two students behind OpenGlass project, Dapper Vision's Brandyn White and Andrew Miller said they have had extensive experience working with MapReduce/Hadoop and Computer Vision. (Hadoop MapReduce is a software framework for easily writing applications processing large amounts of data in-parallel on large clusters of commodity hardware.) The two developed Hadoopy (a Python wrapper for Hadoop Streaming written in Cython) along with Picarus, a library of web-scale computer vision algorithms and REST API for Computer Vision, Annotation, Crawling, and Machine Learning. (REST stands for Representational State Transfer, an architectural style using HTTP.)

More information:

www.openglass.us/

www.cultofandroid.com/37917/op … e-visually-impaired/

© 2013 Phys.org