Google launches next phase of voice-recognition project

Google on Tuesday switched on a new program that will dramatically improve the accuracy of its speech recognition service, which allows people to use verbal commands to search the Internet, send an e-mail or post a Facebook update.

That's of growing importance to the Mountain View, Calif., search giant, which sees Internet searches on smart phones as a significant part of its business. While the company doesn't disclose specific numbers, one in four searches on Android devices are now done by voice, and the search volume on Android phones climbed by 50 percent in the first six months of 2010.

"A lot of the world's information is spoken, and if Google's mission is to organize the world's information, it needs to include the world's spoken information," said Mike Cohen, who heads the company's speech efforts.

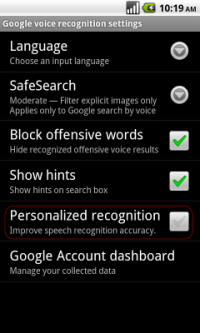

Users of the latest Android-powered smart phones can now allow Google to recognize the unique pattern of their speech by downloading a new app from the Android Market. The service gradually learns the patterns of a person's speech and eventually will more accurately understand their voice commands.

Google's ambitions don't stop at improving voice recognition. Its recent purchase of Phonetic Arts, a British company that specializes in speech output, highlights Google's plans to allow your computer or smart phone to speak back to you, in a "voice" that will sound increasingly natural, and even human.

Google earns the vast majority of its revenue through search advertising, and expects a majority of its Internet business to flow through smart phones and other wireless devices in the future, so high quality voice services are of critical importance.

The linguistic models that Cohen's team has helped develop over the past six years at Google, based on more than 230 billion searches typed into google.com and speech inflections recorded from millions of people who used voice search, are now so vast and complex that it would literally take several centuries for a single PC to create Google's digital model of spoken English.

For Google, said Al Hilwa, an analyst with the research firm IDC, "voice is a critical strategic competence."

Google's Dec. 3 announcement of the Phonetic Arts acquisition - terms were not disclosed - is "complementary to what Google is doing in social networking, video and mobile where it should be possible for people on the go to talk to their mobile devices, search engines or social networks as an alternative mechanism of interaction," Hilwa said.

Speech also is another key area where Google competes with Microsoft, which purchased Mountain View-based Tellme Networks, Inc., in 2007 to bulk up its speech services, and which also offers voice search through its Bing search engine.

Before joining Google, Cohen co-founded Nuance Communications, a Menlo Park, Calif., speech technology company, in 1994. Cohen, a part-time jazz guitarist whose first memory was the sound of the toy piano he received for Hanukkah in the Brooklyn apartment where he grew up, has been a research scientist in the field of using computer speech technology for more than a quarter century.

A bespectacled man with red hair who once worked as a piano tuner and whose sextet once played the Montreux Jazz Festival in Switzerland, Cohen likes to laugh at the irony of Google placing a native Brooklynite - given the New York borough's famously stretched and tangled dialect - in charge of speech recognition.

"I'm from Brooklyn," Cohen said. "I've never parked my car; I only 'paahhk' my car."

A person's accent, Cohen said, is one of the most difficult challenges for speech recognition services, and is one problem that the new personal voice recognition service should help overcome.

Still, much more than understanding different accents are involved in human speech, and a wide range of factors - variables include the shape of a person's mouth, teeth and throat, the cadence and pitch of their sentences - are elements the human ear has evolved to differentiate, but which computers have not.

"It's all different from one person to another, and that all affects the sounds that come out," Cohen said. "There is tremendous variation between individuals. It's been a known thing that you can do better (at speech recognition) if you can do something to try to adapt to an individual's speech patterns."

More information: googlemobile.blogspot.com/2010 … h-gets-personal.html

(c) 2010, San Jose Mercury News (San Jose, Calif.).

Distributed by McClatchy-Tribune Information Services.