Scientist Omar Hurricane discusses LLNL fusion program

LLNL Distinguished Scientist Omar Hurricane, chief scientist for the Laboratory's Inertial Confinement Fusion (ICF) program, is at the forefront of the drive to achieve nuclear fusion with energy gain for the first time in a laboratory. In a wide-ranging interview with NIF & Photon Science News, Hurricane outlines the NIF strategy for moving toward ignition and describes the challenges standing in the way of meeting that historic goal.

Q: To begin with the basics: Why is it important to achieve ignition, and what will be accomplished as a result of having achieved it?

It is a "grand challenge," scientifically. People have been trying to do this in experimental facilities since the 1950s. I think it's important because once we do it, it shows you exactly what conditions are required to achieve it. What also comes with it are very high neutron flux levels, which open up a variety of applications for stockpile stewardship, high energy density physics and nuclear physics. It would be a heck of a neutron source. We're hovering right below ten-quadrillion DT (deuterium-tritium) neutrons, and ignition proper will be a couple of orders of magnitude more neutrons. And then we have to see where we go from there with respect to applications like inertial fusion energy.

Q. How would you describe NIF's current strategy for achieving ignition?

It is research—it's not just a matter of hitting the target harder with the laser and it's going to work. There are uncertainties in the physics, although we do know the basic parameter that we're after at the center of our implosion. We know that we have to have sufficiently high pressure for a sufficiently long time, and we call that condition Lawson's criterion—pressure times time. We know we have to have that at least at a certain level.

But to get to that level at the center of the implosion, there are all these elements that go into it. We have some idea of how they work, but not a perfect picture of how they work. How well we do depends on the details of each one of those elements. As we try to push the implosions to achieve that level of pressure and time, we run into obstacles. Some of them can be anticipated and some of them cannot be. That's where it becomes research and development: you run into an obstacle during the research, you learn about the parameter space around that obstacle, and then you develop some way around it so you can go further.

I like to use the metaphor of climbing Mount Everest. You know you want to get up to the top of the mountain—in this case the mountain is pressure times time—but the exact path of how you're going to get there is not clear. It depends on the conditions on the ground as you're trying to get yourself and your group up the mountain, and the path you take might actually change, depending on the conditions. Because we haven't been up this mountain before, it's not like you just pull out a map and you head in a certain direction. There is a lot of switching back, a lot of changing course.

The strategy that we're following now is what we call the base-camp strategy. Because we're not certain of the path, we have basecamps more or less littered around the base of the mountain. They're each in a different type of terrain, and the groups who are manning those basecamps are each trying to find their best way up the mountain. The data tell you what the terrain is a certain distance around your basecamp, and you can make choices which direction to go based on what you see in the data.

But there's a distance beyond that that's somewhat dark, it has a fog around it, and that's where we start to rely on the models. The models let us extrapolate a little bit beyond where the data are to help us take a little bit of a larger step and help strategize how to get further up the mountain. That part takes some judgment, because if you take a model based on data about the locale and extrapolate it too far, you can get nonsense.

There's another metaphor that goes with that. Everyone is familiar with weather predictions. Part of the weatherman's prediction is based on data, such as satellite imagery of nearby weather systems, and they can project where those weather systems are going to go. But to make a prediction a little further out they use a computer model. They take that data, run it through some physics calculations, and they can maybe predict the weather a week out, or two weeks out.

But it's just common sense that you don't go to the weatherman and ask him what the weather's going to be like six months from now. You'd be forced to take a model that's based on today's data and extrapolate too far into the future to be of any value.

We have the same problem with the design physics for our ICF implosions. We have models based on data that allow us to make steps forward that are maybe a little bit beyond where the data are; but if you take them too far, you potentially throw yourself off a cliff. It takes experience and some judgment to gauge how far you can believe the models and make a step based on that extrapolation.

So we're here in our basecamp, we have the data, we move a little bit to the edge of what we can see, we get more data, we see what our models say about that, and we try to establish a new basecamp. And by doing that successively over and over again in steps, we are working our way up the mountain. We have several groups looking at different approaches up the mountain, so that as a team we have a better chance of success getting to the very top.

The metaphor is not just true for us. A lot of research science follows that same sort of metaphor. Whenever you're trying to achieve something that no one's ever done before, that is at the boundary of the science of what people know and what they don't know, it has to work like that.

Q. One of the issues that came up during the National Ignition Campaign (NIC) was the discrepancy between the models and the experimental results. Has there been some progress in bringing those more in agreement?

Yes, there has been. Actually that was an example of taking a model and trying to extrapolate too far. The models that were used in the National Ignition Campaign are essentially the same, at their guts, as the models we're using now—but in that case there was an extrapolation using the model that went too far, like a weatherman's model trying to predict the weather six months from now rather than just next week.

The other part of it is what the National Ignition Campaign (NIC) was targeting. The NIC implosion was the most difficult implosion you could do. It was a very stressing implosion. It pushed the boundaries of our predictive capability. It pushed the boundaries of the physics and our engineering capabilities extremely far. What we're doing now is improving the models and at the same time we're testing them against implosions that are "easier." We essentially lowered the bar, and we have been able to develop an implosion that is less stressing and therefore much easier for the models to find some sort of overlap.

At low velocities and what we call low convergence ratios, our models match the data pretty well . The convergence ratio is a ratio between the initial radius of the implosion divided by the final radius; it's basically a measure of how far the implosion has collapsed on itself. If you don't have to move an implosion quite as far it's easier to predict, but if you have to move it a long distance, which is what a high convergence ratio implosion does, it's harder to predict.

This is because implosions by their nature are amplifiers. In their ideal form, they amplify pressure, and that gets back to the goal that we're after. We're trying to get more than 300 billion atmospheres of pressure in the center of the implosion. An ideal implosion squeezes down from a large radius to a small radius in response to how we drive it with the laser, how we designed the target and so forth. But in addition to being a pressure amplifier, any irregularity in the implosion that is undesirable also becomes amplified. So it's a pressure amplifier in the best sense, but it can be a problem amplifier in the worst sense.

That's what we're struggling with. We want to get the pressures up, we want to get the convergence ratios up, but at the same time anything that's a problem gets amplified and gets worse. So we have to tackle these problems one at a time to try to make progress.

Our first attack on that was trying to address fluid instability—what's known as the Rayleigh-Taylor instability. It's basically a buoyancy-driven instability that tends to tear apart the shell of the implosion as it progresses inward. You have high pressure outside, developed by the laser interacting with the hohlraum plasma, on the order of 100 million atmospheres. That pressure is trying to squeeze the capsule in uniformly, but imperfections or modulations in the surface tend to get amplified. So any weak spot gets pushed on by that pressure and it tends to move faster and break through, whereas the thick spots tend not to be pushed as hard, and the irregularities are amplified at higher and higher convergence ratios.

We addressed that by changing the pulse shape from what the NIC was using to the high-foot pulse shape—increasing the energy in the initial laser pulse. This is basically a tactic to reduce convergence ratio, but also to smooth out the sensitivity to ablation front instability, so the implosion starts to look a little more one-dimensional and behaves more like the ideal implosion that we're after.

Now that doesn't solve everything, because there's more than just the instabilities going on. Also, to address the instabilities in that first round, we traded away something. To get high pressures we need high compression, and we gave away some of that compression to get the stability benefit.

But it does give us an implosion that now becomes a diagnostic for all the other things that are going wrong—and that type of implosion is a base camp of sorts. Now we can see that we've fixed this one issue, we've gotten a little further up the mountain, and we can see what other problems we have to address, what paths we need to take. It has allowed us to see some of things that were suspected previously, but were mixed in with the instability part and weren't obvious.

One of the things that we're seeing now that has to be addressed is the in-flight control of the shape of the implosion. Instead of just being a round shell that's going to smaller and smaller radius, it's irregular in shape as a function of time. It's wiggling around wasting kinetic energy, and again because convergence is an amplifier, the wiggling gets worse as you get to a smaller and smaller radius. So it's harder to get the pressures you need. It's like taking a water balloon and squeezing it—it's going to pop out between your fingers.

Q: Getting back to the pulse shape, we're also doing what's called adiabat-shaping experiments. Is that like a compromise between the high-foot and the low-foot?

It's actually trying to take the best characteristics from both. You might say it's a strategy to have your cake and eat it too, as one of my colleagues likes to say. We demonstrated with the high-foot pulse shape a certain degree of stability control that we now believe is good enough to go to very high velocity in these implosions and not be hit by catastrophic instability, which we sometimes call "mix." The ultimate consequence of instability when it gets really bad is mix. That means your ablator has been torn to pieces and it's now in the fusion fuel that you're trying to get hot, and it prevents it from getting hot.

The adiabat-shaping team is taking the element of the first part of the pulse that is responsible for the stabilization, but then throwing away the part that makes it stiff against compression. They're trying to get the stability benefits of the high-foot and the compression of the low-foot. And they have been successful to some degree, but adiabat-shaping still only addresses instability.

Q. You've also tried three or four different capsule compositions. What are the pros and cons of each?

That in fact is the way you look at the capsules—they have pros and cons. The program is actually looking at three right now. As with the NIC, the high-foot team and the adiabat-shaping team use the plastic capsule. Those have the advantage of being easier to fabricate. They are amorphous, it's plastic, so it's easier to get the dopants, which are the high-Z (high atomic number) materials that are put in in different layers that help screen unwanted high-energy X-rays from the inside of the capsule.

But the plastic capsule has a lower ablation pressure-generation capability. The ablation pressure is the pressure that the ablator makes in response to the X-ray flux that hits it. Plastic's a pretty good ablator, but it's not the best if you just look at ablation pressure. The two other materials that actually have a far superior ablation pressure are diamond—or high-density carbon, which is why we sometimes call it HDC—and beryllium.

Los Alamos National Laboratory is taking the lead on beryllium. Beryllium has a good ablation pressure, and also because it's low on the Periodic Table, it tends to be more transparent to X-rays. The X-rays have an easier time penetrating in to a larger thickness of beryllium, causing it to turn into a plasma, and you get an extra boost from that when it explodes in response to the X-rays.

Those other ablators have downsides, so again there's a tradeoff. Both the diamond and beryllium ablators are crystalline in the room-temperature state. The crystalline structure can be a problem for seeding instability growth, and to bypass that problem the crystalline ablators require a strong initial shock to insure that they are melted very early in the implosion. But if it's too strong, the shock makes the implosions "stiff" and it's harder to get the required compression.

For the beryllium, because it's such an aggressive ablator, it tends to take all that plasma off the ablator and fill the hohlraum up very quickly. That's a problem because you're trying to get the laser energy into the hohlraum, and the ablator material tends to get in the way. So this is the tension between what's good for the capsule may be not good for the hohlraum. It also impacts the shape control of the implosion, because the laser beams are meant to go certain places in the hohlraum to produce a symmetrical implosion. But if there's plasma from the ablator in the way, they have trouble getting to them.

The diamond is neat because it's such an aggressive ablator with high ablation pressure, so the implosion tends to go very quickly. It's roughly two and a half times the density of plastic, and as a result of that they can make the shell of the ablator thinner; so it's about the same mass but it's a good deal thinner. That gave us some worries early on, because as you make it thinner, the instabilities might have an easier time tearing through. Actually that team's been very successful at avoiding any indication of mixing. Their pulse shape is similar to the high-foot—they learned from that and built in resistance to instability. And they've gotten very fast implosions, 400 kilometers per second, I believe, and maybe a little more with that ablator.

Q: What is the goal for the implosion velocity?

It's not a hard and fast goal, in terms of a specific number, but we generally want velocities in the high 300 kilometers per second to 400 kilometers per second. The high velocity is necessary, but not sufficient by itself. We would take higher, actually, if we could go higher, but there's a limit because of the energy available. The high-foot has gotten about 380 kilometers per second. Diamond has gotten faster. So we're getting to the right ballpark velocity-wise.

But again, like the high-foot, the diamond team is using this pulse shape that makes the compression more difficult in order to keep the instabilities at bay. Even though the velocities are high, eventually they'll have to back away from a stiff implosion to allow it to compress more. And that's the kind of delicate balance between giving up some of your instability control to get the compression—finding the optimum level.

Q: Are there other challenges that need to be addressed?

We do have other issues, and the question is: Which one do we tackle next? Part of the judgment of which problem to tackle next is based on, well, do we know enough about the physics that underlies the problem to address it, or is it going to be another exploration?

Unfortunately, when it comes to the hohlraum, which controls the shape of the implosion, that is more of a research effort. We have to do some exploring to figure out the best way to address that. Some basecamp teams, like the diamond-capsule team, are actually using completely different hohlraums, called the vacuum- and near-vacuum hohlraums, as opposed to the high-foot team, which uses a high fill-gas hohlraum. And they can do that because their diamond ablator allows them to use that hohlraum in a way that we would have more difficulty with using the plastic ablator.

The advantage that the diamond is giving is it allows the pulse shape to be very short. That allows them to use these vacuum- or near-vacuum hohlraums, which gives them better control of the shape of the implosion—certainly theoretically, and I believe the data are starting to show that as well. (For a further discussion of this issue, see "Rugby Hohlraums Join the Ignition Scrum.")

There also is less anomalous energy loss in those near-vacuum hohlraums. Right now with the higher gas-filled hohlraums that are being used for the plastic implosions and beryllium implosions, some of the laser energy can't be accounted for when it enters the hohlraums. It's probably caught up in the laser-plasma instability process or a coronal plasma that is bottling up energy in a place that the models don't capture. So it's not being seen by the capsule, which is really where you want the energy to go.

What we're doing with the hohlraums and the shape control is a research effort. The plastic ablator team also is moving into new hohlraums—we're making that transition now.

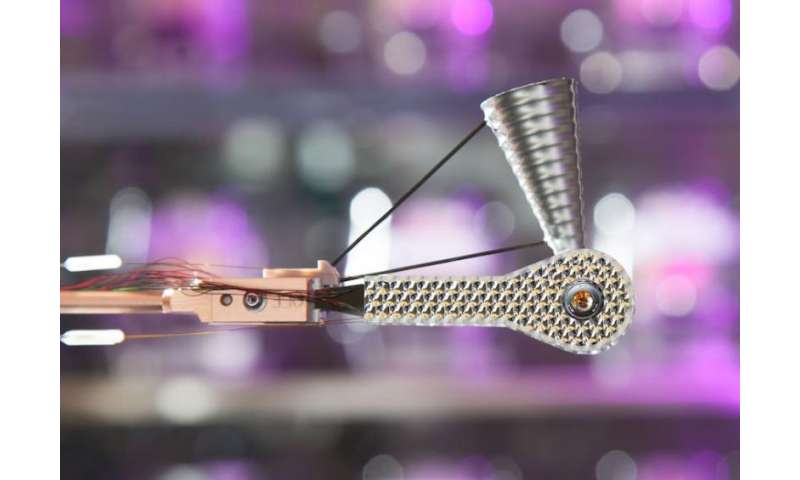

Other issues are more mundane, which have to do with the fact that the target is an engineered object. The capsule has to be held in the hohlraum. It's held there with two thin diaphragms called the tent—a drumhead that fits between the hohlraum walls and captures the capsule. We're seeing—and it was suspected that this was affecting the NIC implosion too, but now it's clear it's affecting everything—this tent is tearing the implosion where it's in contact with the capsule. So that contact point rips up the capsule.

Our efforts to control ablation-front Rayleigh-Taylor instability help to mitigate this problem somewhat, and at first we thought we completely eliminated the problem. As we've done more work we see that the problem has been reduced, but it's still present.

Then there's an engineering feature that's needed to put the fuel into the capsule called the fill-tube. We're accumulating some evidence that also has an impact. Again it was suspected before, but now it's something that clearly we have to address to go further.

The tent and the fill-tube are not exactly physics problems. It's really a research challenge for the engineering team: Can they figure out another way of holding the capsule that satisfies all the requirements of keeping it where it needs to be, being compatible with the cryogenic system, not vibrating, and not breaking when you put the target in the chamber, but not causing the damage to the implosion? So they are researching this engineering aspect of how to hold the capsule in there in different ways. They propose fixes and the physics team has to say, "Well, that's great, but it's going to cause some other problem," or, "That looks like it will be fine, maybe we should try it."

Q. Like making the tents thinner?

Yes, making the tents thinner is less impactful with respect to the instability, but we believe there's a nuance there. As we make the tent thinner, the angle at which it contacts the capsule changes. And there's some debate by some of the people who tend to focus on those issues as to whether the angle change counteracts the thinness of the tent.

Ideally, if the tent were gossamer-thin—and it's pretty thin, the thinnest tents they've made are 15 nanometers, although as a matter of practice we tend to shoot more of the 45-nanometer tents—we believe as it gets thinner it should be less impactful, but this angle issue becomes a problem.

There's also a practical impact to making the tents thinner—the targets become more fragile. Last year when we first started getting really high-yield high-foot implosions, we had a set of 15-nanometer-tent targets. We lost all but two of them because the tents broke. That's the challenge when you're trying to do research at the cutting edge—these mundane "gotchas" always come in to screw up all your grand plans. So we had to retreat from doing the 15-nanometer tents, because even though we like them from the physics point of view, we couldn't trust that the target would survive to the shot.

That's why there's a certain pace to these things—it's hard to go much faster because of these practical limitations. Every issue and every fix potentially creates another issue, and that's kind of what's going on now.

Q: Last year you said we were about halfway to ignition. Where would you say you are now?

The key metric is what people call the normalized Lawson criterion. It's a measure of the ratio of where you are compared to where you need to be on a normalized scale of zero to one. The metric says we're at seven-tenths. But that's really not much different from where we were last year.

We essentially need to double our performance, especially since even though we treat the boundary to ignition as this well-known target—technically speaking, when the power from alpha-particle self-heating, which we call "bootstrapping," outstrips the rate at which X-ray losses and electron conduction cool the implosion—it's actually a little fuzzy. Because of that you have to build in extra margin against that fuzz. We know we need to move in that direction, but there's still some uncertainty about exactly what conditions we need. We just know it has to be a fair bit higher than where we are, and likely about a factor of two higher.

Q: Alpha heating is where the alpha particles produced by the fusion reactions in the central hotspot begin to heat the outer layers of fuel—and that is in fact occurring, correct?

Yes, and alpha-particle self-heating is a big deal. The first time it was done in a laboratory at significant levels, it was done here. It's an accomplishment. It's not ignition, but we're getting an alpha-dominated plasma now, and from a scientific point of view for someone who works in plasma physics, that's really exciting.

It's actually a unique region, and I believe it was sort of overlooked before as well. People were maybe a little over-optimistic about quickly getting to ignition and just skipped right over it, but actually it's a very interesting regime to be in, where the heating from the alpha particles is competing with all the energy losses in the center of the implosion, and that actually is quite useful for understanding our models. Essentially it's a competition between large numbers, heating and losses, and we can examine it using these implosions as a microscope, in this regime right now. Not that we don't want to get further quickly, but it's still an interesting regime.

Q: Has the alpha heating gone up at all in the recent DT experiments?

The best ones have basically been pegged at right above 2 to 2.3—an alpha heating yield multiplier of 2 to 2.3 over the yield expected from the implosion just due to the energy we put in. That's pretty much been the same for the best shots since last May. We're learning these other things, trying things and hopefully we'll have another step coming up.

Q: Going forward, which strategies seem to be the most promising?

As a part of the strategy, we learn from Mother Nature what works and what doesn't, as opposed to just using the models. We take the elements of what works and try to put them together and build on that.

The adiabat shaping is an example of trying to take something that works, the stability control from the high-foot, and combine it with the next step, getting the higher compression. We see the diamond campaign having success with these lower gas-filled hohlraums, so combining these alternate pulse shapes that control instability with these different hohlraums, especially the lower gas-filled, low LPI—or laser-plasma instability—hohlraums, is a way to take the next step further.

What's nice about having these multiple basecamps is that they're not independent, competing basecamps. Instead, we all leverage from one another. As one team learns something, it's communicated to the other teams, and they see if they can use it or not and vice versa. In a sense, we're working as one larger team, but because people can only process so much, everyone is focusing on his or her one effort and it's contributing to the work of the larger unit.

We want to get the good instability control, higher convergence and good shape control at the same time. That's probably going to be most of our concentration of effort for the upcoming fiscal year, to combine those elements into one while also doing science experiments to better understand what's going on in the hohlraums. The engineering teams are working with the physics teams to try to address those more mundane issues of how to hold the capsules in without getting these perturbations that tear it up. That's the near-term tactic, and we'll have to see where we stand at the end of next year.

In addition, because the levels of performance that have been achieved are starting to get useful for high energy density physics purposes, we're starting to spin off, for example, the high-foot implosion for applications for reactions-in-flight radiochemistry and other nuclear research that can take advantage of the level of neutrons that are being produced with NIF.

But it is all an evolutionary process. The idea that you just design something and that fixes all the problems and it's just going to work as soon as you blast it with the laser is just not in line with experience or reality. We have designs that are meant to be stepping stones, and we evolve those designs toward where we know we need to be. The whole process, the whole strategy of basecamps, is working in steps and finding the path up the mountain.

Provided by Lawrence Livermore National Laboratory