November 12, 2014 report

Virtual reality study shows echolocation in humans not just about the ears

(Phys.org)—A pair of researchers with Ludwig-Maximilians-Universität München in Germany has found that echolocation in humans involves more than just the ears. In their paper published in the journal Royal Society Open Science, Ludwig Wallmeier and Lutz Wiegrebe describe how echolocation is thought to work in humans as compared to other animals, and the results of a study they conducted using volunteers and a virtual reality system.

Echolocation is a means of determining the location of an object in the near vicinity by emitting sounds and then listening to the echoes that are bounced off objects when they come back. Bats are perhaps most famous for their echolocation abilities but many other animals have some degree of ability as well, including humans. Wallmeier and Wiegrebe note that several studies have been conducted recently to discover just how well humans can use sounds as a means of navigating terrain when they are unable to see. Thus far, they also note, none of the studies conducted to date have been able to quantify such an ability, which tends to muddy the results. In their study, they sought to do just that.

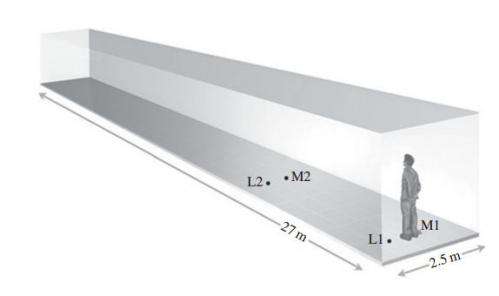

To find out how good people are at echolocation and what parts of the body are involved, they enlisted the assistance of eight sighted students—each was asked to wear a blindfold and to make clicking noises as they made their way through a long corridor. Over several weeks' time, each learned to differentiate between sounds that were echoed back to them, which allowed them to gauge wall distance and eventually to walk easily through the corridor with no other assistance.

Once they'd mastered the real corridor, each of the volunteers was asked to sit at a virtual reality workstation that simulated a walk through the same corridor and to use the same clicks they'd used earlier. In the simulation, the researchers varied the experience—they tested abilities when the volunteers were able to alter the orientation of the corridor and how well they were able to continue their virtual walk when their head or body was held steady, preventing them from getting different angles on the echo feedback.

In analyzing all the data they'd collected, the two researchers found that the volunteers lost most of their echolocation abilities when they were restricted from movement—they ran into walls that were easily avoided when allowed to move freely. By moving echolocation to a simulated environment, the researchers believe that they have finally found a way to quantify echolocation ability in humans.

More information: Self-motion facilitates echo-acoustic orientation in humans, Royal Society Open Science, DOI: 10.1098/rsos.140185 , rsos.royalsocietypublishing.org/content/1/3/140185

ABSTRACT

The ability of blind humans to navigate complex environments through echolocation has received rapidly increasing scientific interest. However, technical limitations have precluded a formal quantification of the interplay between echolocation and self-motion. Here, we use a novel virtual echo-acoustic space technique to formally quantify the influence of self-motion on echo-acoustic orientation. We show that both the vestibular and proprioceptive components of self-motion contribute significantly to successful echo-acoustic orientation in humans: specifically, our results show that vestibular input induced by whole-body self-motion resolves orientation-dependent biases in echo-acoustic cues. Fast head motions, relative to the body, provide additional proprioceptive cues which allow subjects to effectively assess echo-acoustic space referenced against the body orientation. These psychophysical findings clearly demonstrate that human echolocation is well suited to drive precise locomotor adjustments. Our data shed new light on the sensory–motor interactions, and on possible optimization strategies underlying echolocation in humans.

Journal information: Royal Society Open Science

© 2014 Phys.org