Computer scientists to develop smart vision machines

Five years ago, Laurent Itti of the University of Southern California presented groundbreaking research on how humans see the world. Now, he is heading a $16-million Defense Advanced Research Project Agency effort to build machines that see the world in the way he discovered humans see.

But to do this, Itti and fellow scientists from eight other institutions first plan to learn more about how humans see, and then immediately use this more detailed knowledge to build new machines.

The "neuromorphic visual system for intelligent unmanned sensors" project builds on a previous effort called Neovision, which attempted the same goal, but relied on existing software systems that were not completely compatible with the neural systems that Itti had discovered were crucial.

The new project will try to build its eyes from the bottom up, from scratch, finding out more precisely how these neural systems function and then building new software and hardware into which the new understandings will be embedded, tying research directly to development in a continuing reciprocal process.

The goal is clear: "The modern battlefield requires that soldiers rapidly integrate information from a multitude of sensors," says Itti's project description. "A bottleneck with existing sensors is that they lack intelligence to parse and summarize the data. This results in information overload … Our goal is to create intelligent general-purpose cognitive vision sensors inspired from the primate brain, to alleviate the limitations of such human-based analysis."

To do this will require applying existing understandings as well as new fundamental research: "At the core of the present project is the belief that new basic science is crucial," the project description continues.

This is because living creatures' vision involves much more than just images. Rather, it was honed by evolution to seek out the specific visual signals critical to a seeing creature's survival. This involves complex circuitry in the retina, where the outputs from light detector cells are processed to give rise to 12 different types of visual "images" of the world (as opposed to the red, green and blue images of a standard camera). These images are further processed by complex neural circuits in the visual cortex, and in deep-brain areas including the superior colliculus, with feedbacks driving eye movements.

Itti's 2006 paper "Bayesian Surprise Attracts Human Attention," co-authored by Pierre Baldi of UC Irvine, argued that a mathematical algorithm that analyzed incoming visual data looking for a precisely characterized element the authors called a 'surprise' seemed to fit experimental data of eye movement. The theory was the basis of the earlier Neovision work, and has since been extensively revised and developed.

The new plan is to go much farther than Neovision. The researchers will model the entire complex interactive system from the ground up to understand the exact messages transmitted from the retina to the cortex and further to the colliculus, and how the brain cells understand them. Then they will attempt to embed parallel transactions, using the same perception algorithms, into working silicon systems.

The execution of this strategy will be unusual. Typically, such projects aim at a finished system at the end of the project, reporting progress toward such a system at regular intervals.

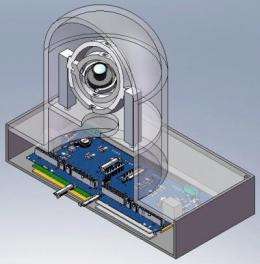

Instead, Itti's plan is to create a whole series of prototypes, complete with breadboard hardware at a rate of one every six months, as understandings emerge from the project's basic science side.

Researchers will regularly convene at USC for intensive workshops, to learn the findings of a "core team of engineers at USC [interacting] with Ph.D. students and postdocs, performing the basic science directly in an academic setting. Because the engineering core will work directly with the researchers, we expect that the technology transition will be swift and efficient," said Itti.

Itti is an associate professor in the Viterbi School Department of Computer Science. The other institutions that will be part of the effort he is leading include UC Berkeley, Caltech, MIT, Queens University (Canada), Brown, Arizona State, and Penn State, along with a company, Imagize, that specializes in the field.

Provided by University of Southern California