June 10, 2010 report

Entropy study suggests Pictish symbols likely were part of a written language

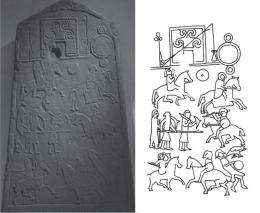

(PhysOrg.com) -- How can you tell the difference between random pictures and an ancient, symbol-based language? A new study has shown that concepts in entropy can be used to measure the degree of repetitiveness in Pictish symbols from the Dark Ages, with the results suggesting that the inscriptions appear be much closer to a modern written language than to random symbols. The Picts, a group of Celtic tribes that lived in Scotland from around the 4th-9th centuries AD, left behind only a few hundred stones expertly carved with symbols. Although the symbols appear to convey information, it has so far been impossible to prove that this small sample of symbols represents a written language.

As the team of UK researchers including Rob Lee of the University of Exeter explains, a fundamental characteristic of any communication system is that there is a degree of uncertainty of character occurrence. This uncertainty can also be thought of as entropy or information, since it differs from characters arranged randomly or in a simple repetitive pattern.

In their study, the UK researchers analyzed different texts in terms of the average uncertainty of a character’s appearance when the preceding character is known. The less uncertainty (or the more difficult it is to predict the second character), the greater the entropy of the character pair, and the greater the probability that the characters are part of a written language. The researchers call this measurement the Shannon di-gram entropy, where the term “di-gram” refers to two characters.

However, when applying this measurement to more than 400 datasets of known written languages, the researchers found that it didn’t work for small sample sizes due to their insufficient representation of characters, or “incompleteness.” This shortcoming posed a problem for the small sample sizes of Pictish symbols.

To confront this challenge, the researchers had to modify the entropy formula. They proposed that a sample’s degree of incompleteness could be determined by the ratio of the number of different di-grams to the number of different uni-grams (single characters) in the sample. In other words, a sample with more ways of pairing its characters has a greater completeness than samples with relatively few ways of pairing them. Since the Shannon di-gram entropy depends upon this measurement of the degree of completeness, the measurement offers a way to normalize the Shannon di-gram entropy. The researchers also accounted for the fact that some languages (e.g. Morse code) have more repetitious di-grams than others by proposing a di-gram repetition factor (the ratio of the number of di-grams that appear only once in a sample to the total number of di-grams in the sample).

The researchers showed that the degree of completeness and the di-gram repetition factor could be calculated for any sample size. They demonstrated how to use the two parameters to identify characters as words, syllables, letters, and other elements of language. For the small set of Pictish symbols, the researchers concluded that the symbols likely correspond to words, based on their degree of Shannon di-gram entropy modified by these two parameters. In addition to showing that it’s very unlikely that the Pictish symbols are simply random pictures, these methods used with verbal datasets could be applied to investigating the level of information communicated by animal languages, which are often hampered by small sample datasets.

More information:

Rob Lee, Philip Jonathan, and Pauline Ziman. “Pictish symbols revealed as a written language through application of Shannon entropy.” Proc. R. Soc. A, doi:10.1098/rspa.2010.0041

via: CERN Courier

© 2010 PhysOrg.com