Futuristic 48-Core Intel Chip Could Reshape How Computers are Built (w/ Video)

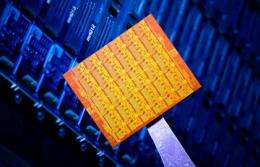

(PhysOrg.com) -- Researchers from Intel Labs demonstrated an experimental, 48-core Intel processor, or "single-chip cloud computer," that rethinks many of the approaches used in today's designs for laptops, PCs and servers.

Researchers from Intel Labs demonstrated an experimental, 48-core Intel processor, or "single-chip cloud computer," that rethinks many of the approaches used in today's designs for laptops, PCs and servers. This futuristic chip boasts about 10 to 20 times the processing engines inside today's most popular Intel Core-branded processors.

The long-term research goal is to add incredible scaling features to future computers that spur entirely new software applications and human-machine interfaces. The company plans to engage industry and academia next year by sharing 100 or more of these experimental chips for hands-on research in developing new software applications and programming models.

While Intel will integrate key features in a new line of Core-branded chips early next year and introduce six- and eight-core processors later in 2010, this prototype contains 48 fully programmable Intel processing cores, the most ever on a single silicon chip. It also includes a high-speed on-chip network for sharing information along with newly invented power management techniques that allow all 48 cores to operate extremely energy efficiently at as little as 25 watts, or at 125 watts when running at maximum performance (about as much as today's Intel processors and just two standard household light bulbs).

Intel plans to gain a better understanding of how to schedule and coordinate the many cores of this experimental chip for its future mainstream chips. For example, future laptops with processing capability of this magnitude could have "vision" in the same way a human can see objects and motion as it happens and with high accuracy.

Imagine, for example, someday interacting with a computer for a virtual dance lesson or on-line shopping that uses a future laptop's 3-D camera and display to show you a "mirror" of yourself wearing the clothes you are interested in. Twirl and turn and watch how the fabric drapes and how the color complements your skin tone.

This kind of interaction could eliminate the need of keyboards, remote controls or joysticks for gaming. Some researchers believe computers may even be able to read brain waves, so simply thinking about a command, such as dictating words, would happen without speaking.

Intel Labs has nicknamed this test chip a "single-chip cloud computer" because it resembles the organization of datacenters used to create a "cloud" of computing resources over the Internet, a notion of delivering such services as online banking, social networking and online stores to millions of users.

Cloud datacenters are comprised of tens to thousands of computers connected by a physically cabled network, distributing large tasks and massive datasets in parallel. Intel's new experimental research chip uses a similar approach, yet all the computers and networks are integrated on a single piece of Intel 45nm, high-k metal-gate silicon about the size of a postage stamp, dramatically reducing the amount of physical computers needed to create a cloud datacenter.

"With a chip like this, you could imagine a cloud datacenter of the future which will be an order of magnitude more energy efficient than what exists today, saving significant resources on space and power costs," said Justin Rattner, head of Intel Labs and Intel's Chief Technology Officer. "Over time, I expect these advanced concepts to find their way into mainstream devices, just as advanced automotive technology such as electronic engine control, air bags and anti-lock braking eventually found their way into all cars."

Cores Allow Software to Intelligently Direct Data for Efficiency

The concept chip features a high-speed network between cores to efficiently share information and data. This technique gives significant improvement in communication performance and energy efficiency over today's datacenter model, since data packets only have to move millimeters on chip instead of tens of meters to another computer system.

Application software can use this network to quickly pass information directly between cooperating cores in a matter of a few microseconds, reducing the need to access data in slower

off-chip system memory. Applications can also dynamically manage exactly which cores are to be used for a given task at a given time, matching the performance and energy needs to the demands of each.

Related tasks can be executed on nearby cores, even passing results directly from one to the next as in an assembly line to maximize overall performance. In addition, this software control is extended with the ability to manage voltage and clock speed. Cores can be turned on and off or change their performance levels, continuously adapting to use the minimum energy needed at a given moment.

Overcoming Software Challenges

Programming processors with multiple cores is a well-known challenge for the industry as computer and software makers move toward many-cores on a single silicon chip. The prototype allows popular and efficient parallel programming approaches used in cloud datacenter software to be applied on the chip. Researchers from Intel, HP and Yahoo's Open Cirrus collaboration have already begun porting cloud applications to this 48 IA core chip using Hadoop, a Java software framework supporting data-intensive, distributed applications as demonstrated by Rattner today.

Intel plans to build 100 or more experimental chips for use by dozens of industrial and academic research collaborators around the world with the goal of developing new software applications and programming models for future many-core processors.

"Microsoft is partnering with Intel to explore new hardware and software architectures supporting next-generation client plus cloud applications," said Dan Reed, Microsoft's corporate vice president of Extreme Computing. "Our early research with the single chip cloud computer prototype has already identified many opportunities in intelligent resource management, system software design, programming models and tools, and future application scenarios."

This milestone represents the latest achievement from Intel's Tera-scale Computing Research Program, aimed at breaking barriers to scaling future chips to 10s-100s of cores. It was co-created by Intel Labs at its Bangalore (India), Braunschweig (Germany) and Hillsboro, Ore. (U.S.) research centers. Details on the chip's architecture and circuits are scheduled to be published in a paper at the International Solid State Circuits Conference in February.

Provided by Intel (news : web)