Researchers report finer lines for microchips: Advance could lead to next-generation computer chips, solar cells

MIT researchers have achieved a significant advance in nanoscale lithographic technology, used in the manufacture of computer chips and other electronic devices, to make finer patterns of lines over larger areas than have been possible with other methods.

Their new technique could pave the way for next-generation computer memory and integrated-circuit chips, as well as advanced solar cells and other devices.

The team has created lines about 25 nanometers (billionths of a meter) wide separated by 25 nm spaces. For comparison, the most advanced commercially available computer chips today have a minimum feature size of 65 nm. Intel recently announced that it will start manufacturing at the 32 nm minimum line-width scale in 2009, and the industry roadmap calls for 25 nm features in the 2013-2015 time frame.

The MIT technique could also be economically attractive because it works without the chemically amplified resists, immersion lithography techniques and expensive lithography tools that are widely considered essential to work at this scale with optical lithography. Periodic patterns at the nanoscale, while having many important scientific and commercial applications, are notoriously difficult to produce with low cost and high yield. The new method could make possible the commercialization of many new nanotechnology inventions that have languished in laboratories due to the lack of a viable manufacturing method.

The MIT team includes Mark Schattenburg and Ralf Heilmann of the MIT Kavli Institute of Astrophysics and Space Research and graduate students Chih-Hao Chang and Yong Zhao of the Department of Mechanical Engineering. Their results have been accepted for publication in the journal Optics Letters and were recently presented at the 52nd International Conference on Electron, Ion and Photon Beam Technology and Nanofabrication in Portland, Ore.

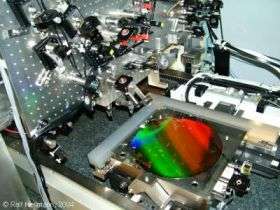

Schattenburg and colleagues used a technique known as interference lithography (IL) to generate the patterns, but they did so using a tool called the nanoruler—built by MIT graduate students—that is designed to perform a particularly high precision variant of IL called scanning-beam interference lithography, or SBIL. This recently developed technique uses 100 MHz sound waves, controlled by custom high-speed electronics, to diffract and frequency-shift the laser light, resulting in rapid patterning of large areas with unprecedented control over feature geometry.

While IL has been around for a long time, the SBIL technique has enabled, for the first time, the precise and repeatable pattern registration and overlay over large areas, thanks to a new high-precision phase detection algorithm developed by Zhao and a novel image reversal process developed by Chang.

According to Schattenburg, "What we're finding is that control of the lithographic imaging process is no longer the limiting step. Material issues such as line sidewall roughness are now a major barrier to still-finer length scales. However, there are several new technologies on the horizon that have the potential for alleviating these problems. These results demonstrate that there's still a lot of room left for scale shrinkage in optical lithography. We don't see any insurmountable roadblocks just yet."

Source: MIT, by David Chandler