February 6, 2017 feature

The thermodynamics of learning

(Phys.org)—While investigating how efficiently the brain can learn new information, physicists have found that, at the neuronal level, learning efficiency is ultimately limited by the laws of thermodynamics—the same principles that limit the efficiency of many other familiar processes.

"The greatest significance of our work is that we bring the second law of thermodynamics to the analysis of neural networks," Sebastian Goldt at the University of Stuttgart, Germany, told Phys.org. "The second law is a very powerful statement about which transformations are possible—and learning is just a transformation of a neural network at the expense of energy. This makes our results quite general and takes us one step towards understanding the ultimate limits of the efficiency of neural networks."

Goldt and coauthor Udo Seifert have published a paper on their work in a recent issue of Physical Review Letters.

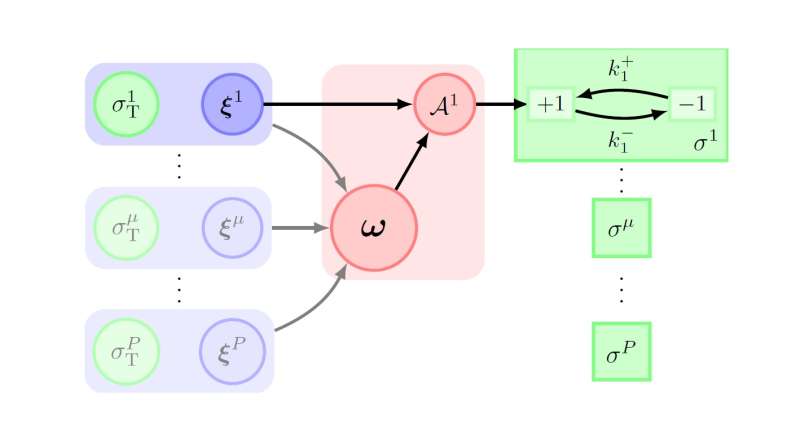

Since all brain activity is tied to the firing of billions of neurons, at the neuronal level, the question of "how efficiently can we learn?" becomes the question of "how efficiently can a neuron adjust its output signal in response to the patterns of input signals it receives from other neurons?" As neurons get better at firing in response to certain patterns, the corresponding thoughts are reinforced in our brains, as implied by the adage "fire together, wire together."

In the new study, the scientists showed that learning efficiency is bounded by the total entropy production of a neural network. They demonstrated that, the slower a neuron learns, the less heat and entropy it produces, increasing its efficiency. In light of this finding, the scientists introduced a new measure of learning efficiency based on energy requirements and thermodynamics.

As the results are very general, they can be applied to any learning algorithm that does not use feedback, such as those used in artificial neural networks.

"Having a thermodynamic perspective on neural networks gives us a new tool to think about their efficiency and gives us a new way to rate their performance," Goldt said. "Finding the optimal artificial neural network with respect to that rating is an exciting possibility, and also quite a challenge."

In the future, the researchers plan to analyze the efficiency of learning algorithms that do employ feedback, as well as investigate the possibility of experimentally testing the new model.

"On the one hand, we are currently researching what thermodynamics can teach us about other learning problems," Goldt said. "At the same time, we are looking at ways to make our models and hence our results more general. It's an exciting time to work on neural networks!"

More information: Sebastian Goldt and Udo Seifert. "Stochastic Thermodynamics of Learning." Physical Review Letters. DOI: 10.1103/PhysRevLett.118.010601, Also at arXiv:1611.09428 [cond-mat.stat-mech]

Journal information: Physical Review Letters

© 2017 Phys.org