Physicists prove energy input predicts molecular behavior

The world within a cell is a chaotic space, where the quantity and movement of molecules and proteins are in constant flux. Trying to predict how widely a protein or process may fluctuate is essential to knowing how well a cell is performing. But such predictions are hard to pin down in a cell's open system, where everything can look hopelessly random.

Now physicists at MIT have proved that at least one factor can set a limit, or bound, on a given protein or process' fluctuations: energy. Given the amount of energy that a cell is spending, or dissipating, the fluctuations in a particular protein's quantity, for example, must be within a specific range; fluctuations outside this range would be deemed impossible, according to the laws of thermodynamics.

This idea also works in the opposite direction: Given a range of fluctuations in, say, the rate of a motor protein's rotation, the researchers can determine the minimum amount of energy that the cell must be expending to drive that rotation.

"This ends up being a very powerful, general statement about what is physically possible, or what is not physically possible, in a microscopic system," says Jeremy England, the Thomas D. and Virginia W. Cabot Assistant Professor of Physics at MIT. "It's also a generally applicable design constraint for the architecture of anything you want to make at the nanoscale."

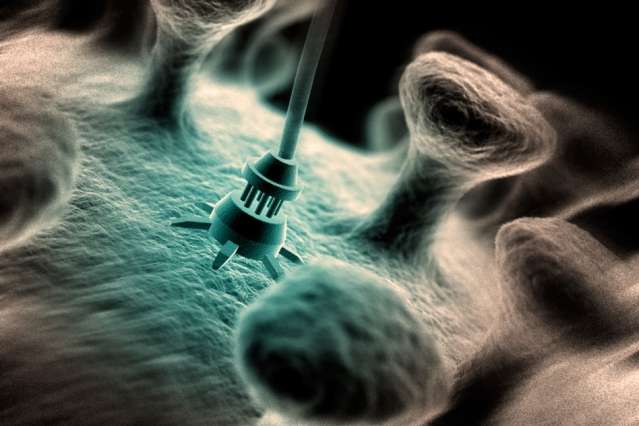

For instance, knowing how energy and microscopic fluctuations relate will help scientists design more reliable nanomachines, for applications ranging from drug delivery to fuel cell technology. These tiny synthetic machines are designed to mimic a molecule's motor-like behavior, but getting them to perform reliably at the nanoscale has proven extremely difficult.

"This is a general proof that shows that how much energy you feed the system is related in a quantitative way to how reliable you've made it," England says. "Having this constraint immediately gives you intuition, and a sort of road-ready yardstick to hold up to whatever it is you're trying to design, to see if it's feasible, and to direct it toward things that are feasible."

England and his colleagues, including Physics of Living Systems Fellow Todd Gingrich, postdoc Jordan Horowitz, and graduate student Nikolay Perunov, have published their results this week in Physical Review Letters.

Making sense of microscopic motions

The researchers' paper was inspired by another study published last summer by scientists in Germany, who speculated that a cell's energy dissipation might shape the fluctuations in certain microscopic processes. That paper addressed only typical fluctuations. England and his colleagues wondered whether the same results could be extended to include rare, "freak" instances, such as a sudden, temporary spike in a cell's protein quantity.

The team started with a general master equation, a model that describes motion of small systems, be it in the number or directional rotation for a given protein. The researchers then employed large deviation theory, which is a mathematical technique that is used to determine the probability distributions of processes that occur over a long period time, to evaluate how a microscopic system such as a rotating protein would behave. They then calculated, essentially, how the system fluctuated over a long period of time—for instance, how often a protein rotated clockwise versus counterclockwise—and then developed a probability distribution for those fluctuations.

That distribution turns out to have a general form, which the team found could be bounded, or limited, by a simple mathematical expression. When they translated this expression into thermodynamic terms, to apply to the fluctuations in cells and other microscopic systems, they found that the bound boiled down to energy dissipation. In other words, how a microscopic system fluctuates is constrained by the energy put into the system.

"We have in mind trying to make some sense of molecular systems," Gingrich says. "What this proof tells us is, even without observing every single feature, by measuring the amount of energy lost from the system to the environment, it teaches us and limits the set of possibilities of what could be going on with the microscopic motions."

Pushing out of equilibrium

The team found that the minimum amount of energy required to produce a given distribution of fluctuations is related to a state that is "near-equilibrium." Systems that are at equilibrium are essentially at rest, with no energy coming in or out of the system. Any movement within the system is entirely due to the effect of the surrounding temperature, and therefore, fluctuations in whether a protein turns clockwise or counterclockwise, for example, are completely random, with an equal chance of rotating in either direction. Near-equilibrium systems are close to this state of rest; directional motion is generated by a small input of energy, but many features of the motion still appear as they do in equilibrium.

Most living systems, however, operate far from equilibrium, with so much energy constantly flowing into and out of a cell that the fluctuations of molecular proteins and processes do not resemble anything in equilibrium. Lacking a similarity to equilibrium, it has been hard for scientists to uncover many general features of nonequilibrium fluctuations. England and his colleagues have shown that a comparison can nevertheless be made: Fluctuations occurring far from equilibrium must be at least as large as those that occur near equilibrium.

The team says scientists can use the relationships established in its proof to understand the energy requirements in certain cellular systems, as well as to design reliable synthetic molecular machines.

"One of the things that's confusing about life is, it happens on a microscopic scale where there are a lot of processes that look pretty random," Gingrich says. "We view this proof as a signpost: Here is one thing that at least must be true, even in those extreme, far-from-equilibrium situations where life is operating."

Journal information: Physical Review Letters

Provided by Massachusetts Institute of Technology

This story is republished courtesy of MIT News (web.mit.edu/newsoffice/), a popular site that covers news about MIT research, innovation and teaching.