Scientists compose complex math equations to replicate behaviors of Earth systems

Whenever news breaks about what Earth's climate is expected to be like decades into the future or how much rainfall various regions around the country or the world are likely to receive, those educated estimates are generated by a global climate model.

But what exactly is a climate model? And how does it work?

At its most basic, a global climate model (GCM) is a computer software program that solves complex equations that mathematically describe how Earth's various systems, processes and cycles work, interact and react under certain conditions. It's math in action.

A global model depends on submodels

Submodels can be broken into two classes: dynamics and physics. Dynamics refers to fluid dynamics. The atmosphere and the ocean are both treated mathematically as fluids. The physics class includes natural processes such as the carbon organic soil respiration cycle and sunlight as it passes though and heats the atmosphere.

Just as Earth's major systems and spheres—the atmosphere, the biosphere, the hydrosphere and the cryosphere—interact with and influence each other, so too must the subprograms in a climate model that represents them. This is accomplished through a technique called coupling, in which scientists develop additional equations and subprograms that knit together divergent submodels. That's what climate researcher Rob Jacob does at the U.S. Department of Energy's (DOE) Argonne National Laboratory.

"It's like a handshake," he said."If you have two submodels that don't know each other, a software program has to be created that allows these disparate components to communicate and interact."

All submodel equations are based on real-world field observations, measurements and certain immutable laws of physics. Developing such formulas is no simple feat; neither is the collection of field data, which is essential for equation development.

"Science is looking for general equations that apply in many cases," said Jacob, who works in Argonne's Mathematics and Computer Science Division. "So collecting field data from the widest possible parts of the globe is critically important if you're looking to have one set of equations that describe how a system behaves, and if you want it to cover all cases so that you can describe everything that happens to soil, for example, no matter where on the globe that soil is."

Field data are needed, too

As important as that is, there is a real need for more field data; the more data, the more accurate the submodel can be, he said. But getting that information is not as easy as it may seem.

Modeling clouds, for example, is an especially challenging task. Field research is always planned out a year or more in advance for a specific location and time period. So if there's not a cloud in the sky during that allotted time, then no data can be collected.

"Clouds are one of the greatest uncertain elements in climate models because they involve very small-scale physics that are too small to ever represent explicitly," Jacob said. "It's also very difficult to observe the entire life cycle of a cloud, and if you can't observe it closely, you certainly can't model it accurately."

When data are incomplete, climate modelers approximate the cycle of a system or process until the program can be updated with more hard data from the field.

"We compare model predictions with observations," Jacob said."A model gets better when its output matches recorded, observed phenomenon, so reaching 'best' is constrained by the data. And we're done as long as it matches all the observed phenomena, and we'll consider it done until we have observed phenomena that the model can't account for. It's the nature of science to expect that to happen. It's never done-done. It's done for now."

That does not mean that climate model projections are inaccurate. It simply means that newer projections are more finely tuned than earlier ones.

"For example, we have been predicting for many years that if we double carbon dioxide emissions into the atmosphere, we are going to increase the temperature by two to four degrees," Jacob said. "That calculation has stood the test of time. You can refine details of that calculation, but you're not suddenly going to change the swing in temperature to minus 10 degrees. What climate modeling is trying to do right now is understand the details behind that very basic 2- to 4-degree estimate."

Higher resolution means more accurate results

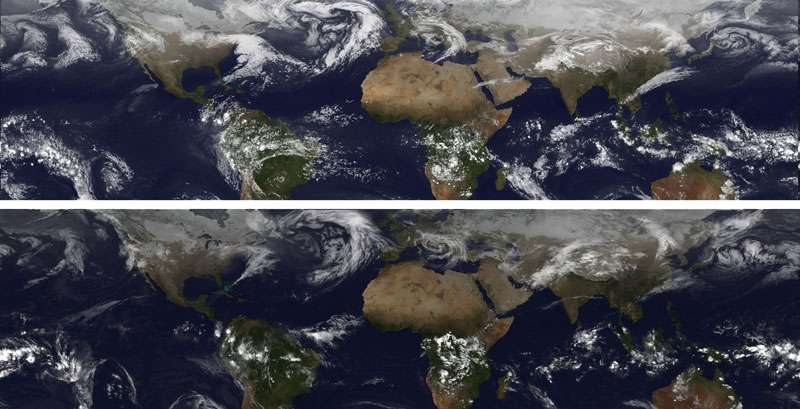

Fine-tuning a model also includes improving its resolution. A model's resolution is determined by the number of cells contained in the grid that covers a computational representation of Earth. Jacob equated the number of cells in a model to the number of pixels in a digital picture. The more cells a model has, the higher its resolution will be and the more potentially precise its results.

The higher a model's resolution is, the shorter the distance between grid points and the more localized its results will be. The current standard resolution is 50 kilometer (km) between grid points, which is the highest resolution that can be run efficiently on the fastest supercomputers in use today.

"That can eventually be pushed down to 25 km between grid points and even down to 12 km, but more powerful supercomputers than exist today are needed to run such high-resolution models," Jacob said.

High-resolution climate models can only run on supercomputers, such as Mira, the Blue Gene/Q supercomputer at the Argonne Leadership Computing Facility (ALCF). Supercomputers are ideally suited to handle the complex sets of equations contained in a GCM.

The generation of climate model results that are of higher resolution than is possible today is only a few years away as the speed of supercomputers continues to leap forward. By the close of 2018, for example, DOE will debut Aurora at Argonne. Aurora will be at least five times faster than any of today's supercomputers and will put the United States one step closer to exascale computing. Exascale computing will be able to perform a billion billion calculations per second.

There are currently about 20 to 30 GCMs—also called general circulation models—in active use by scientists around the world. While many of these models have different architectures, they all tend to generate the same results.

Three of these models were developed in the United States. DOE and the National Center for Atmospheric Research developed a model starting about 20 years ago that is still in use today.

Provided by Argonne National Laboratory