What neuroscience can learn from computer science

What do computers and brains have in common? Computers are made to solve the same problems that brains solve. Computers, however, rely on a drastically different hardware, which makes them good at different kinds of problem solving. For example, computers do much better than brains at chess, while brains do much better than computers at object recognition. A study published in PLOS ONE found that even bumblebee brains are amazingly good at selecting visual images with color, symmetry and spatial frequency properties suggestive of flowers. Despite their differences, computer science and neuroscience often inform each other.

In this post, I will explain how do computer scientists and neuroscientists often learn from each others' research and review the current applications of neuroscience-inspired computing.

Brains are good at object recognition

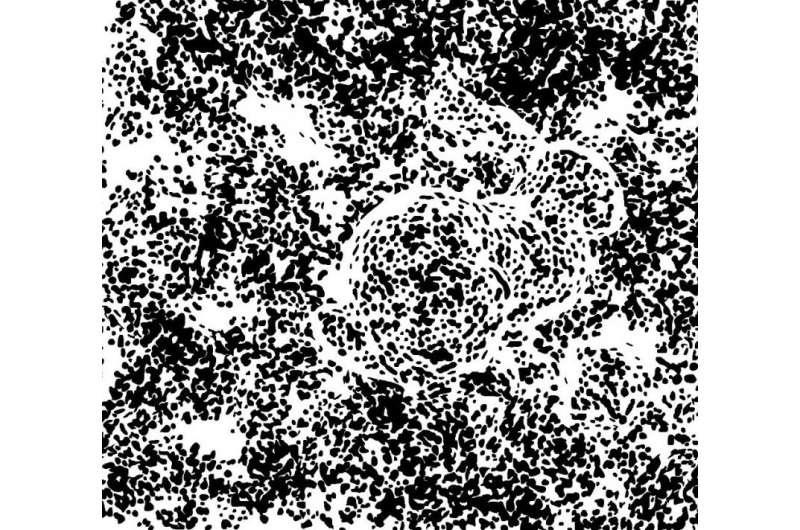

Look at the figure (image 2) composed of seemingly random dots. Initially the object in the image is blended with the background. Within seconds, however, you will see a curved line, and soon after the full figure. What is it? (The spoiler is at the end of this post.)

Computer vision algorithms, which are computer programs designed to identify objects in images and video, fail to recognize images like image 2. As for humans, it turns out that although people take time to recognize a hidden object, people start looking at the right location in the image very soon and long before they are able to identify it. That does not mean that people somehow know which object they are seeing, but cannot report the correct answer. Rather, people experience sudden perception of the object after continued observation. A 2014 study published in PLOS ONE found that this may be because the human visual system is amazingly good at picking out statistical irregularities in images.

Neuroscience-inspired computer applications

Like any experimental science neuroscience research starts with listing all possible hypotheses to explain results of experiments. Questions such as: "How can a magician hide movement in plain sight?" or "What causes optical illusions?" are probed by researchers.

Comparatively, computer science researchers begin their process by listing alternative methods to implement behavior, such as vision, in a computer. Instead of discovering how vision works, computer scientists develop software to solve problems such as: "How should a self-driving car respond to an apparent obstacle?" or "Are these two photographs of the same person?"

An acceptable computer-vision solution for artificial intelligence (AI), just like a living organism, must process information quickly and on a limited knowledge. For example, slow reaction time for a self-driving car might result in the death of a child, or stopping traffic because of a pothole. The processing speed of current computer vision algorithms is far behind the speed of visual processes humans employ daily, however, the technical solutions that computer scientists develop may have relevance to neuroscience, sometimes acting as a source of hypothesis about how biological vision might actually work.

Likewise, most AI, such as computer vision, speech recognition or even robotic navigation, addresses problems already solved in biology. Thus, computer scientists often face a choice between inventing a new way for a computer to see or modeling on a biological approach. A solution that is biologically plausible has the advantage of being resource-efficient and tested by evolution. Probably the best-known example of a biomimetic technology is Velcro, an artificial fabric recreating an attachment mechanism used by plants. Biomimetic computing, that is, recreating functions of biological brains in software, is just as ingenuous, but much less well known outside the specialized community.

The interrelated components of neuroscience and computer science compelled me to explore how computer scientists and neuroscientists learn from each other. After visiting the International Conference on Perceptual Organization (ICPO) in June 2015, I made a list of trends in neuroscience-inspired computer applications that I will explore in more detail in this post:

- Computer vision based on features of early vision

- Gestalt-based image segmentation (Levinshtein, Sminchisescu, Dickinson, 2012)

- Shape from shading and highlights—which is described in more detail in a recent PLOS Student Blog post

- Foveated displays (Jacobs et al. 2015)

- Perceptually-plausible formal shape representations

My favorite example of the interlocking components of neuroscience and computer science is computer vision based on features of early vision. ( Note that there are also many other, not biologically-inspired approaches to computer vision.) I particularly like this example because in this case a discovery in neuroscience of vision of seemingly simple principles of how visual cortex processes information informed a whole new trend in computer science research. To explain how computer vision borrows from biology, lets begin by reviewing the basics of human vision.

Inside the visual cortex

Let me present a hypothetical situation. Suppose you are walking along a beach with palm trees, tropical flowers and brightly colored birds. As new objects enter your field of vision, they seem to enter your awareness instantly. But in reality, shape perception emerges in the first 80-150 milliseconds of exposure (Wagemans, 2015). So how long is 150 ms? For comparison, in 150 ms a car travels four meters at highway speed, and a human walking along a beach travels about 20 centimeters in the time it takes to form a mental representation of an object, such as a tree. Thus, as you observe the palm trees, the flowers and the birds, your brain gradually assembles familiar percepts. During the first 80-150 ms, before awareness of the object has emerged, your brain is hard at work assembling shapes from short and long edges in various orientations, which are coded by location-specific neurons in primary visual area, V1.

Today, we know a lot about primary visual area V1 thanks to pioneering research of Hubel and Wiesel, who both won the Nobel Prize for discovering scale and orientation-specific neurons in cat visual cortex in the late 1950s. As an aside, if you have not yet seen the original videos of their experiments demonstrating how a neuron in a cat's visual cortex responds to a bar of light, I highly recommended viewing these classic videos!

Inside the computer

At about the time that Hubel and Wiesel made their breakthrough, mathematicians were looking for new tools for signal processing, to separate data from noise in a signal. For a mathematician, a signal may be a voice recording encoding a change of frequency over time. It may also be an image, encoding a change of pixel brightness over two dimensions of space.

When the signal is an image, signal processing is called image processing. Scientists care about image processing because it enables a computer "to see" a clear precept while ignoring the noise in sensors and in the environment, which is exactly what the brain does!

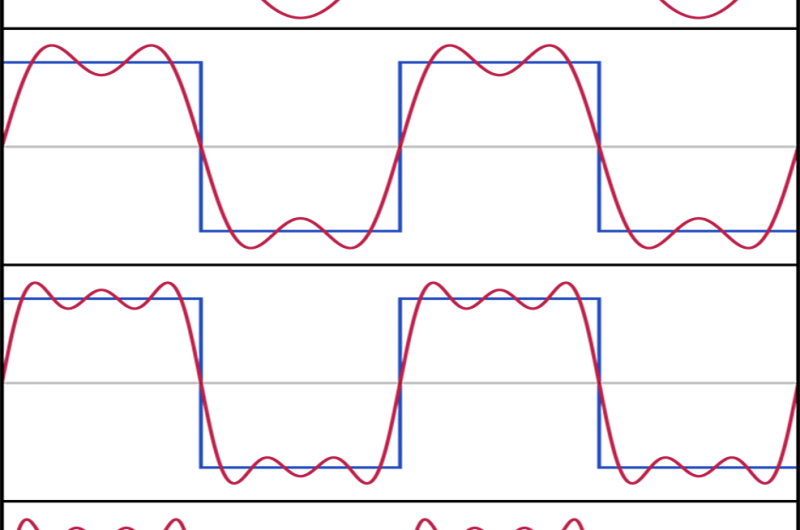

The classic tools of signal processing, Fourier transforms, were discovered by Joseph Fourier in the nineteenth century. A Fourier transform represents data as a weighted sum of sines and cosines. For example, it represents the sound of your voice as a sum of single frequency components! As illustrated in the figure above, the larger the quantity of frequency components that are used, the better is the approximation. Unfortunately, unlike brain encodings, Fourier transforms do not explicitly encode the edges that define objects.

To solve this problem, scientists experimented with sets of arbitrary basis functions encoding images for specific applications. Square waves, for example, encode low-resolution previews downloaded before a full-resolution image transfer is complete. Wavelet transforms of other shapes are used for image compression, detecting edges and filtering out lens scratches captured on camera.

What do computers see?

It turns out that a specially selected set of image transforms can model the scale and orientation-specific neurons in primary visual area, V1. The procedure can be visualized as follows. At first, we process the images by a progressively lower spatial frequency filters. The result is a pyramid of image layers, equivalent to seeing the image from further and further away. Then, each layer is filtered by several edge orientations in turn. The result is a computational model of the initial stage in early visual processing, which assumes that the useful data (the signal in the image) are edges within a frequency interval. Of course, such a model represents only a tiny aspect of biological vision. Nevertheless, it is a first step towards modeling more complex features and it answers an important theoretical question: If a brain could only see simple edges, how much would it see?

To sample a few applications, our computational brain could tell:

- Whether a photograph is taken indoors or outdoors (Guérin-Dugué & Oliva, 2000)

- Whether the material is glossy, matte or textured

- Whether a painting is a forgery, by recognizing individual artist brushstrokes.

Moreover, a computer brain can also do something that a real brain cannot; it can analyze a three-dimensional signal, a video. You can think of video frames as slices perpendicular to time in a three-dimensional space-time volume. A computer interprets moving bright and dark patches in the time-space volume as edges in three dimensions.

Using this technique, MIT researchers discovered and amplified imperceptible motions and color changes captured by a video camera, making them visible to a human observer. The so-called motion microscope reveals changes as subtle as face color changing with heartbeat, a baby's breath, and a crane swaying in the wind.Probably the most striking demonstration presented at ICPO 2015 last month showed a pipe vibrating into different shapes when struck by a hammer. Visit the project webpage for demo and technical details.

So, how far are computer scientists from modeling the brain? In 1950, early AI researchers expected computing technology to pass the Turing test by 2000. Today, computers are still used as tools for solving technically specific problems; a computer program can behave like a human only to the extent that human behavior is understood. The motivation for computer models based on biology, however, is twofold.

First, both computer scientists and computer users alike are much more likely to see the output of a computer program as valid if its decisions are based on biologically plausible steps. A computer AI recognizing objects using the same rules as humans will likely see the same categories and come to the same conclusions. Second, computer applications are a test bed for neuroscience hypotheses. Moreover, a computer implementation can tell us not only if a particular theoretical model is feasible, it may also, unexpectedly, reveal alternative ways in which evolution could work.

Answer to image one riddle:

A rabbit

More information: "Unsupervised Neural Network Quantifies the Cost of Visual Information Processing." PLoS ONE 10(7): e0132218. DOI: 10.1371/journal.pone.0132218

"Stochastic Process Underlying Emergent Recognition of Visual Objects Hidden in Degraded Images." PLoS ONE 9(12): e115658. DOI: 10.1371/journal.pone.0115658

Journal information: PLoS ONE

Provided by Public Library of Science

This story is republished courtesy of PLOS Blogs: blogs.plos.org.