Human-computer interactions could be improved by a new efficient and accurate hand-gesture-recognition model

Virtual reality, gaming and robotics rely on computers using depth images from cameras to recognize, understand and replicate actual body movements. Programming a computer to accurately identify hand gestures is particularly challenging because of the speed, complexity and dexterity of human hand movements.

Devices such as the popular Microsoft Kinect provide both video images and depth information. Processing software must combine these data to build up a three-dimensional (3D) representation of hand gestures, but this can be computationally expensive and is not always accurate.

Now, Li Cheng and Chi Xu at the A*STAR Bioinformatics Institute in Singapore have successfully trialed a new model capable of estimating hand movements and poses using only raw depth data, thus allowing for faster processing. The method appears robust and at times more accurate than high-end programs.

"Hand pose estimation is a difficult problem," states Cheng. "For example, fingers are often clustered together and tend to obstruct each other. Recent programs are still not sufficiently reliable—3Gear, for example, only holds six gestures in its database and ignores all other possibilities. Our new method provides quick results for a large number of different poses."

The researchers trained their model using synthetic images of hand gestures before applying the model to realistic low-resolution, noisy depth images, as typically found in Kinect-type systems. They applied a feature classification technique called a Hough transform to the data, which uses a forest of points to locate the hand and verify the likely orientation of the palm and fingers.

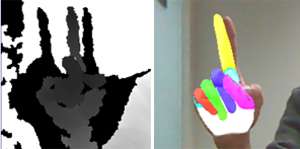

"As the hand can easily roll sideways—a movement not seen in other parts of the body—we used two further steps in the process to improve 3D accuracy," explains Cheng. Rotating a whole image is computationally costly; instead, the researchers applied a second Hough 'forest' with specially modified depth features to account for possible hand rotation. This step produced a list of probable hand poses from which the best fit was chosen (see image).

Unlike previous programs where the palm of the hand is assumed to be flat, the new model is capable of simulating the arch of the palm. This significantly helps in accurately representing gestures. Tests using real hand movements demonstrated that the model could deliver fast and precise results.

The researchers hope their model will provide more efficient and accurate hand pose estimations in computer software. In the future, they also aim to include scenarios that involve hands interacting with physical objects.

More information: Xu, C. & Cheng, L. Efficient hand pose estimation from a single depth image. International Conference on Computer Vision, 3–6 December 2013. www.cv-foundation.org/openacce … _2013_ICCV_paper.pdf