November 13, 2013 weblog

Cycle Computing uses Amazon computing services to do work of supercomputer

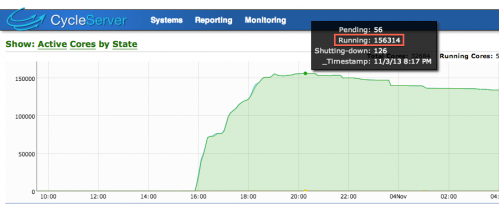

(Phys.org) —Computer services company Cycle Computing has announced that it has used Amazon's servers to run software for a client that simulated the properties of 205,000 molecules over an 18 hour period using 156,000 Amazon cores to get the job done. The cost to the client, the University of Southern California, was $33,000.

Supercomputers are big, fast and extremely expensive. For that reason, researchers have begun to look for other ways to process huge amounts of data for less money. Rushing in to fill that void are companies that match clients with distributed computing services such as those offered by Google, Microsoft or Amazon. Cycle Computing is one such company. In this latest endeavor, Mark Thompson, of USC wanted to find a faster way to crunch the mammoth amount of data needed to analyze molecules that might be useful for creating photovoltaic cells—in the past, it was done by grad students, one molecule at a time. More recently, software has been developed that can do the crunching—in this case, it was Schrödinger's Materials Science software suite. Unfortunately, crunching the data for a lot of molecules takes more computer resources than USC had to offer. That's where Cycle Computing came in—they were able to connect Thompson and his software with Amazon servers running all over the world—all at the same time. The result was an analysis of the suitability of 205,000 molecules in just 18 hours—a task that would have taken 264 years if run on a conventional computer.

The idea of using distributed server systems offered by big name companies hyping cloud services has become very enticing for big businesses looking to crunch massive amounts of data without having to fork over the huge amounts of cash normally associated with buying a supercomputer or renting time on one owned by someone else. And as with many business models, there has arisen a need for companies with expertise in connecting applications with such services—no small feat. To get the job done for USC, Cycle Computing had to secure the resources from Amazon, provide a pipeline between the client data and the Amazon servers and reallocate resources if there were outages—all while making sure the budget wasn't overrun. Cycle Computer managed the job using custom software it calls Jupiter. Company reps noted also that jobs such as the one they performed for USC are particularly suited for the type of server processing offered by cloud servers, noting that it was "pleasantly parallel"—the different parts of the project could be very easily broken into separate jobs and handled separately.

© 2013 Phys.org