March 1, 2013 report

MIT group shows unseen motion captured in video

(Phys.org) —A baby lies in the crib looking motionless, a typical situation causing worry to new parents, wondering if the baby is still breathing. A video run through an algorithm designed for amplification shows the baby is indeed breathing with movements that were invisible to the naked eye. It's that special algorithm at the heart of interest in the work of a group of scientists at MIT who work on a project called motion magnification. They have said that "Our goal is to reveal temporal variations in videos that are difficult or impossible to see with the naked eye." Their process breaks apart the visual elements of every frame of a video, reconstructed with an algorithm tool that can amplify aspects of the video.

The team from MIT's Computer Science and Artificial Intelligence Laboratory are working on the program to analyze videos to pick up movements. The program was first developed essentially to monitor neonatal babies. They believe their algorithm can be applied to other scenarios to reveal changes imperceptible to the naked eye as well, as in hospital monitoring of patients. You can see a person's face flushing as the blood pumps from his heart. You can read a baby's pulse. A spatial pattern of when the blood goes and where is seen; scientists could look to see where the blood flows on the body as well as on the face. "There is a big world of small motions out there," said a team member.

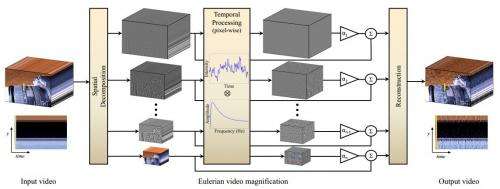

The process is called Eulerian Video Magnification. "Our method, which we call Eulerian Video Magnification, takes a standard video sequence as input, and applies spatial decomposition, followed by temporal filtering to the frames. The resulting signal is then amplified to reveal hidden information," they wrote. They noted that their technique can run in realtime to show phenomena occurring at the temporal frequencies selected by the user.

"We are inspired by the Eulerian perspective," according to the scientists, "where properties of a voxel of fluid, such as pressure and velocity, evolve over time, in a spatially multiscale manner." In their approach to motion magnification, they said they do not explicitly estimate motion but rather exaggerate motion by amplifying temporal color changes at fixed positions.

This is not the first time their advances have been publicized. The program was presented last year at the annual computer graphics conference, Siggraph. What is new is that the team has revamped the work and they posted code online for people interested in exploring such renderings of motion that otherwise would not be detected by the naked eye. "Our team is still actively working on this direction, so people can expect more to come," said a team member. "We hope that it will motivate people to look deeper into this type of processing and different applications it can support."

More information:

people.csail.mit.edu/mrub/papers/vidmag.pdf

web.mit.edu/newsoffice/2013/cs … visible-changes.html

© 2013 Phys.org