Asimov's robots live on twenty years after his death

Renowned author Isaac Asimov died 20 years ago today. Although he wrote more than 500 books, the robot stories he began writing at age 19 are possibly his greatest accomplishment. They have become the starting point for any discussion about how smart robots will behave around humans.

The issue is no longer theoretical. Today, autonomous robots work in warehouses and factories. South Korea plans to make them jailers. To date, Google's fleet of autonomous cars have driven individuals, including a legally blind man, more than 200,000 miles through cities and highways with little human intervention.

Several nations, notably the United States, South Korea, and Israel, operate military drones and autonomous land robots. Many are armed. The day is fast approaching when semiconductor circuits may make life-and-death decisions based on mathematical algorithms.

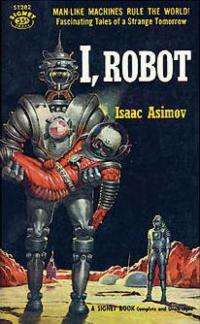

Robots were still new when Asimov began writing about them in 1939. The word was first used in a play by Czech playwright Karel Capek in 1920, the same year Asimov was born. The teenaged Asimov saw them in pulp magazines sold in the family candy store in Brooklyn. Lurid drawings depicted them turning on their creators, usually while threatening a scantily clad female.

Asimov preferred stories that portrayed robots sympathetically.

"I didn't think a robot should be sympathetic just because it happened to be nice," he wrote in a 1980 essay. "It should be engineered to meet certain safety standards as any other machine should in any right-thinking technological society. I therefore began to write stories about robots that were not only sympathetic, but were sympathetic because they couldn't help it."

That idea infused Asimov's first robot stories. His editor, John Campbell of "Astounding Science-Fiction," wrote down a list of rules Asimov's robots obeyed. They became Asimov's Three Laws of Robotics:

• A robot must not injure a human being or, through inaction, allow a human being to come to harm.

• A robot must obey the orders given it by human beings except where those orders would conflict with the First Law.

• A robot must protect its own existence, except where such protection would conflict with the First or Second Law.

Like most things Asimov wrote, the Three Laws were clear, direct, and logical. Asimov's stories, on the other hand, told how easily they could fail.

In "Liar," a telepathic robot lies rather than hurt people's feelings. Ultimately, the lies create havoc and break the heroine's heart.

In "Roundabout," a robot must risk danger to follow an order. When it nears the threat, it pulls back to protect itself. Once safe, it starts to follow orders again. The robot keeps repeating this pattern. The hero finally breaks the loop by deliberately putting himself in danger, forcing the robot to default to the First Law and save his life.

Asimov's robots are adaptive and sometimes reprogram themselves. One develops an emotional attachment to its creator. Another finds a logical reason to turn on humans. A robot isolated on a space station decides humans do not exist and develops its own religion. Another robot sues to be declared a person.

The contradictions in Asimov's laws encouraged others to propose new rules. One proposed that human-looking robots always identify themselves as robots. Another argued that robots must always know they are robots. A third, tongue in cheek, proposed that robots only kill enemy soldiers.

Michael Anissimov, of the Singularity Institute for Artificial Intelligence, a Silicon Valley think tank founded to develop safe AI software, argued that any set of rules will always have conflicts and grey areas.

In a 2004 essay, he wrote, "it's not so straightforward to convert a set of statements into a mind that follows or believes in those statements."

A robot might misapply laws in complex situations, especially if it did not understand why the law was created, he said. Also, robots might modify rules in unexpected ways as they re-program themselves to adapt to new circumstances.

Instead of rules, Anissimov believes we must create "friendly AI" that loves humanity.

While truly intelligent robots are decades away, autonomous robots are already making decisions independently. They require a different set of rules, according to Texas A&M computer scientist Robin Murphy and Ohio State Cognitive Systems Engineering Lab director David Woods.

In a 2009, they proposed three laws to govern autonomous robots. The first assumes that since humans deploy robots, human-robot systems must meet high safety and ethical standards.

The second asserts robots must obey appropriate commands, but only from a limited number of people. The third says that robots must protect themselves, but only after they transfer control of whatever they are doing (like driving a bus or running a machine) to humans.

The debate continues. In 2007, South Korea announced plans to publish a charter of human-robot ethics. It is likely to address such expert-identified issues as human addiction to robots (which could mimic how humans respond to video games or smartphones), human-robot sex and safety. The European Robotics Research Network is considering similar issues.

This discussion would have happened with or without Isaac Asimov. Yet his Three Laws -- and their limits -- have certainly shaped the debate.

Source: Inside Science News Service